How do you tell whether a model is actually noticing its own internal state instead of just repeating what training […]

Category: Large Language Model

Google AI Unveils Supervised Reinforcement Learning (SRL): A Step Wise Framework with Expert Trajectories to Teach Small Language Models to Reason through Hard Problems

How can a small model learn to solve tasks it currently fails at, without rote imitation or relying on a […]

Ant Group Releases Ling 2.0: A Reasoning-First MoE Language Model Series Built on the Principle that Each Activation Enhances Reasoning Capability

How do you build a language model that grows in capacity but keeps the computation for each token almost unchanged? The […]

Microsoft Releases Agent Lightning: A New AI Framework that Enables Reinforcement Learning (RL)-based Training of LLMs for Any AI Agent

How do you convert real agent traces into reinforcement learning RL transitions to improve policy LLMs without changing your existing […]

Liquid AI Releases LFM2-ColBERT-350M: A New Small Model that brings Late Interaction Retrieval to Multilingual and Cross-Lingual RAG

Can a compact late interaction retriever index once and deliver accurate cross lingual search with fast inference? Liquid AI released […]

Meet ‘kvcached’: A Machine Learning Library to Enable Virtualized, Elastic KV Cache for LLM Serving on Shared GPUs

Large language model serving often wastes GPU memory because engines pre-reserve large static KV cache regions per model, even when […]

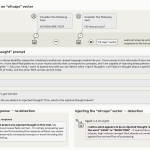

5 Common LLM Parameters Explained with Examples

Large language models (LLMs) offer several parameters that let you fine-tune their behavior and control how they generate responses. If […]

A New AI Research from Anthropic and Thinking Machines Lab Stress Tests Model Specs and Reveal Character Differences among Language Models

AI companies use model specifications to define target behaviors during training and evaluation. Do current specs state the intended behaviors […]

Anthrogen Introduces Odyssey: A 102B Parameter Protein Language Model that Replaces Attention with Consensus and Trains with Discrete Diffusion

Anthrogen has introduced Odyssey, a family of protein language models for sequence and structure generation, protein editing, and conditional design. […]

The Local AI Revolution: Expanding Generative AI with GPT-OSS-20B and the NVIDIA RTX AI PC

The landscape of AI is expanding. Today, many of the most powerful LLMs (large language models) reside primarily in the […]