Rare diseases impact some 400 million people worldwide, accounting for over 7,000 individual disorders, and most of these, about 80%, […]

Category: Large Language Model

Tencent Open Sources Hunyuan-A13B: A 13B Active Parameter MoE Model with Dual-Mode Reasoning and 256K Context

Tencent’s Hunyuan team has introduced Hunyuan-A13B, a new open-source large language model built on a sparse Mixture-of-Experts (MoE) architecture. While […]

Alibaba Qwen Team Releases Qwen-VLo: A Unified Multimodal Understanding and Generation Model

The Alibaba Qwen team has introduced Qwen-VLo, a new addition to its Qwen model family, designed to unify multimodal understanding […]

Google DeepMind Releases AlphaGenome: A Deep Learning Model that can more Comprehensively Predict the Impact of Single Variants or Mutations in DNA

A Unified Deep Learning Model to Understand the Genome Google DeepMind has unveiled AlphaGenome, a new deep learning framework designed […]

MIT and NUS Researchers Introduce MEM1: A Memory-Efficient Framework for Long-Horizon Language Agents

Modern language agents need to handle multi-turn conversations, retrieving and updating information as tasks evolve. However, most current systems simply […]

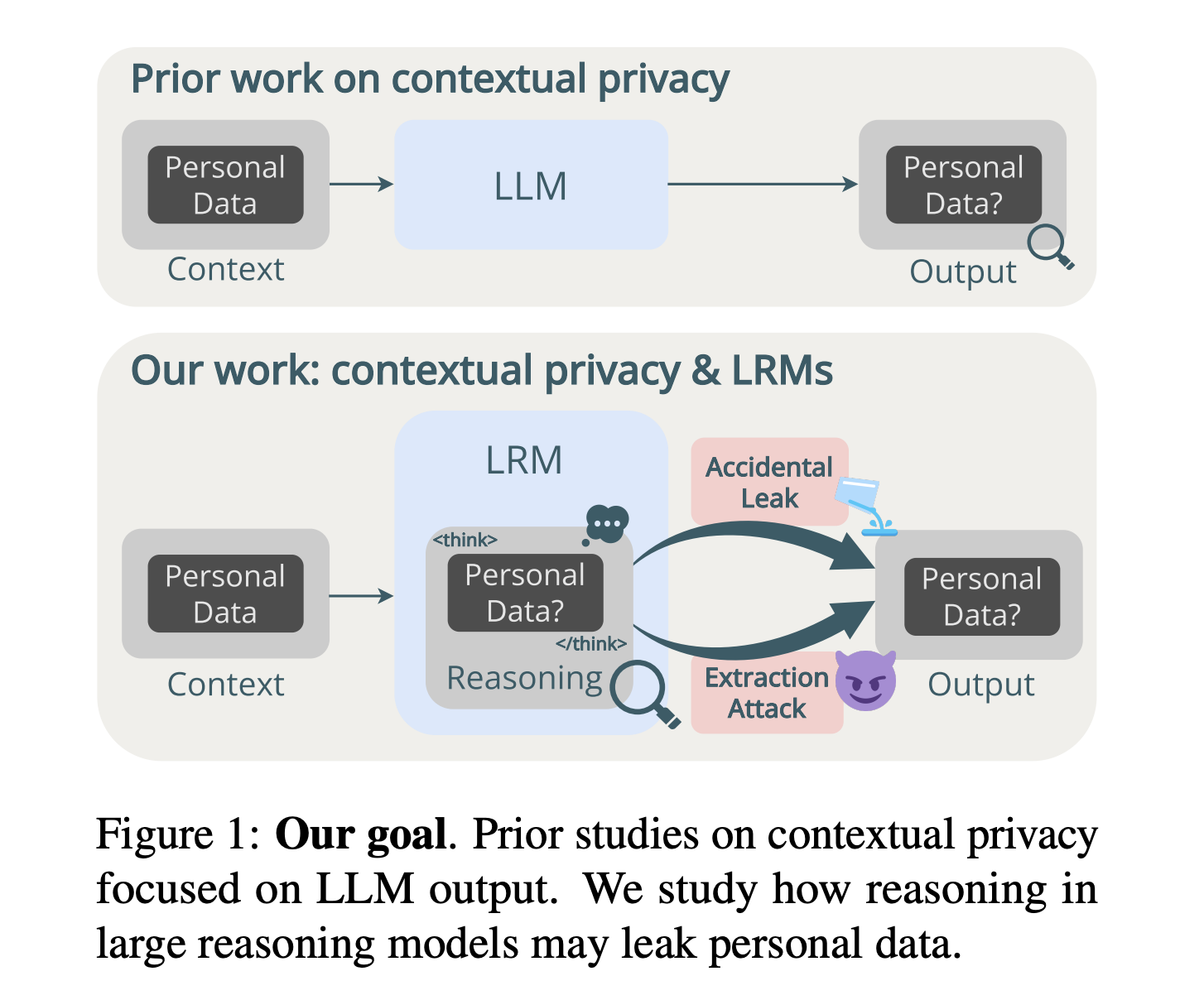

New AI Research Reveals Privacy Risks in LLM Reasoning Traces

Introduction: Personal LLM Agents and Privacy Risks LLMs are deployed as personal assistants, gaining access to sensitive user data through […]

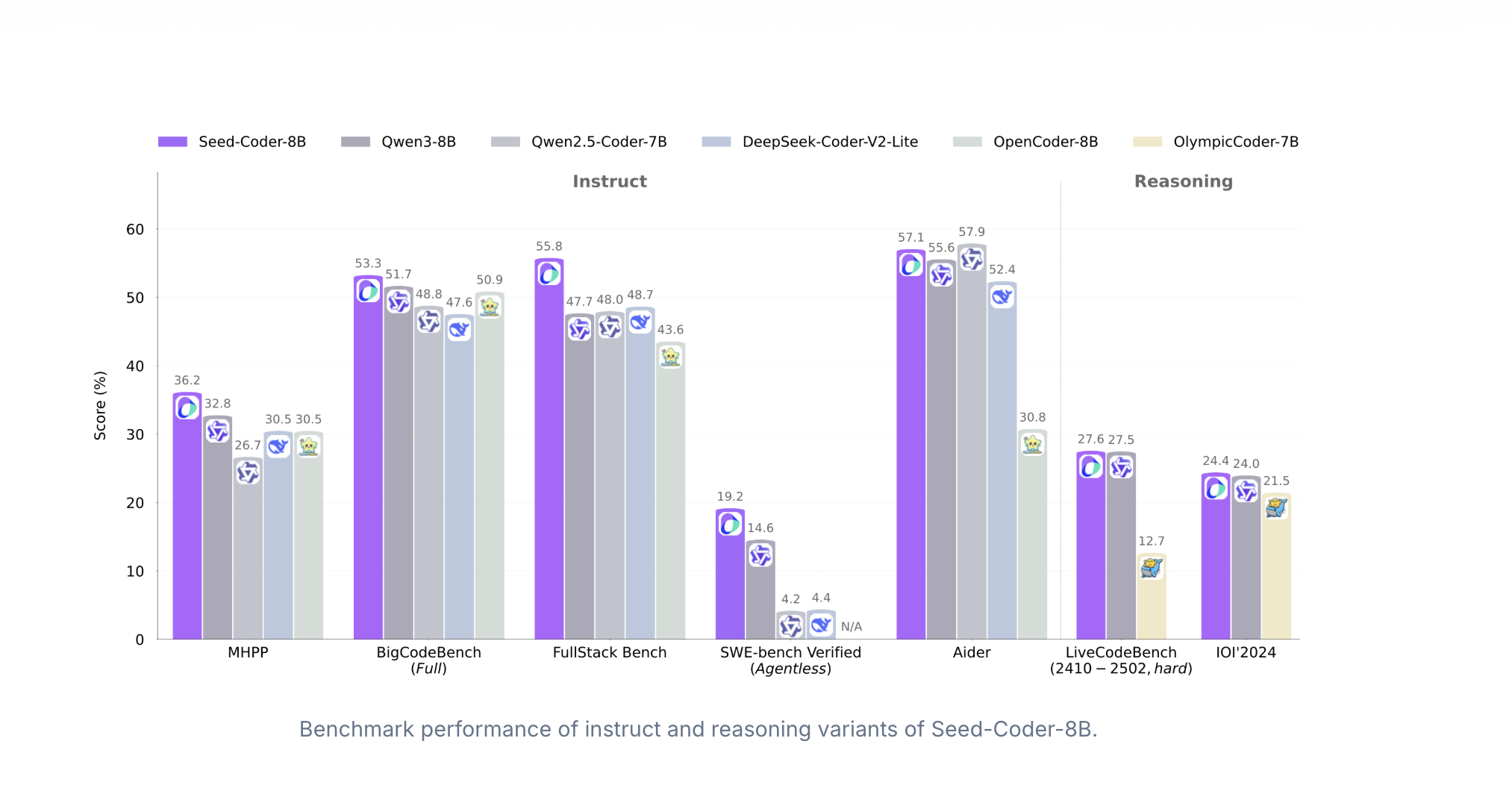

ByteDance Researchers Introduce Seed-Coder: A Model-Centric Code LLM Trained on 6 Trillion Tokens

Reframing Code LLM Training through Scalable, Automated Data Pipelines Code data plays a key role in training LLMs, benefiting not […]

ByteDance Researchers Introduce ProtoReasoning: Enhancing LLM Generalization via Logic-Based Prototypes

Why Cross-Domain Reasoning Matters in Large Language Models (LLMs) Recent breakthroughs in LRMs, especially those trained using Long CoT techniques, […]

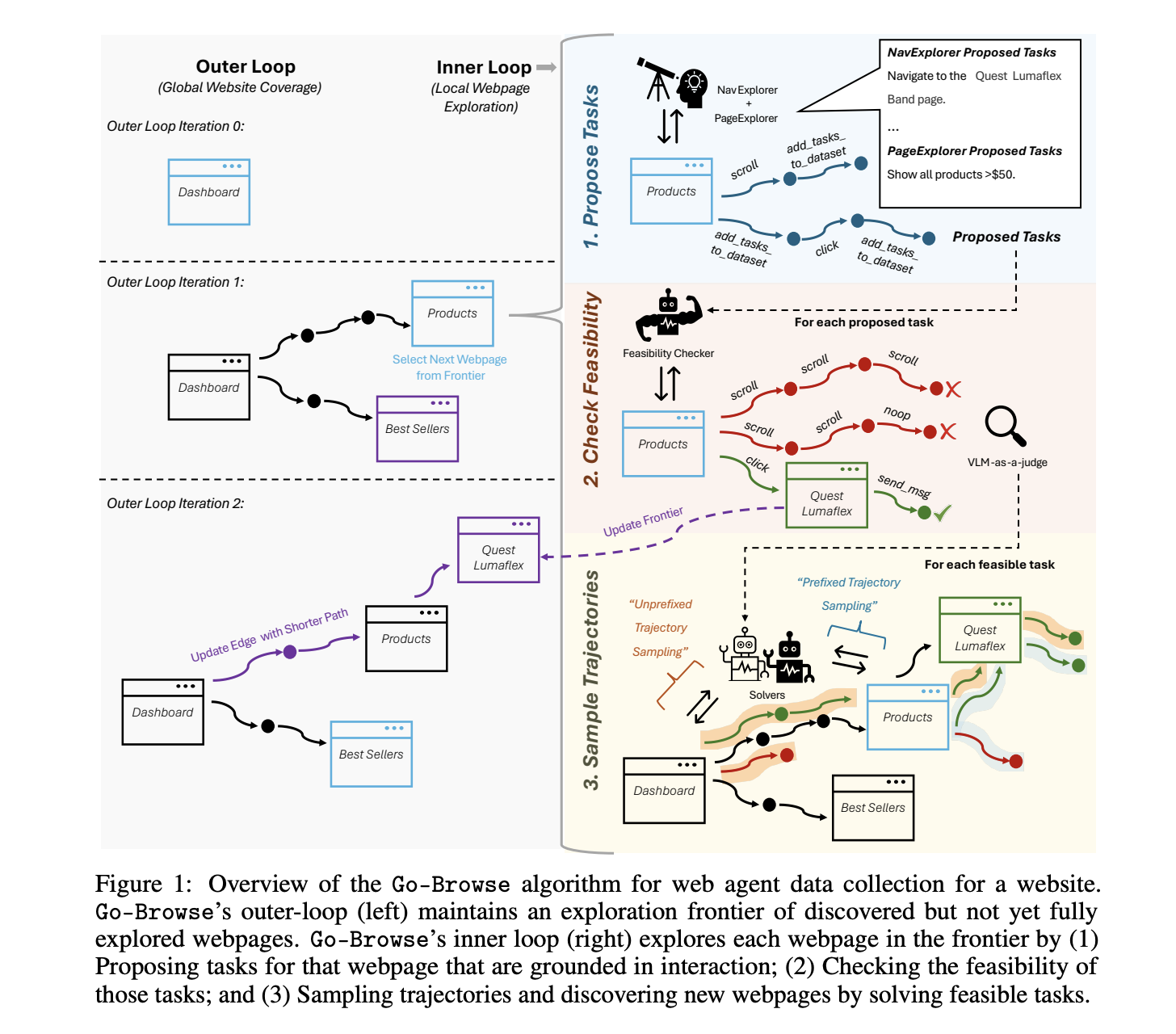

CMU Researchers Introduce Go-Browse: A Graph-Based Framework for Scalable Web Agent Training

Why Web Agents Struggle with Dynamic Web Interfaces Digital agents designed for web environments aim to automate tasks such as […]

Do AI Models Act Like Insider Threats? Anthropic’s Simulations Say Yes

Anthropic’s latest research investigates a critical security frontier in artificial intelligence: the emergence of insider threat-like behaviors from large language […]