LLMs and the Need for Scientific Code Control LLMs have rapidly evolved into complex natural language processors, enabling the development […]

Category: Applications

Alibaba Qwen Team Releases Qwen-VLo: A Unified Multimodal Understanding and Generation Model

The Alibaba Qwen team has introduced Qwen-VLo, a new addition to its Qwen model family, designed to unify multimodal understanding […]

Unbabel Introduces TOWER+: A Unified Framework for High-Fidelity Translation and Instruction-Following in Multilingual LLMs

Large language models have driven progress in machine translation, leveraging massive training corpora to translate dozens of languages and dialects […]

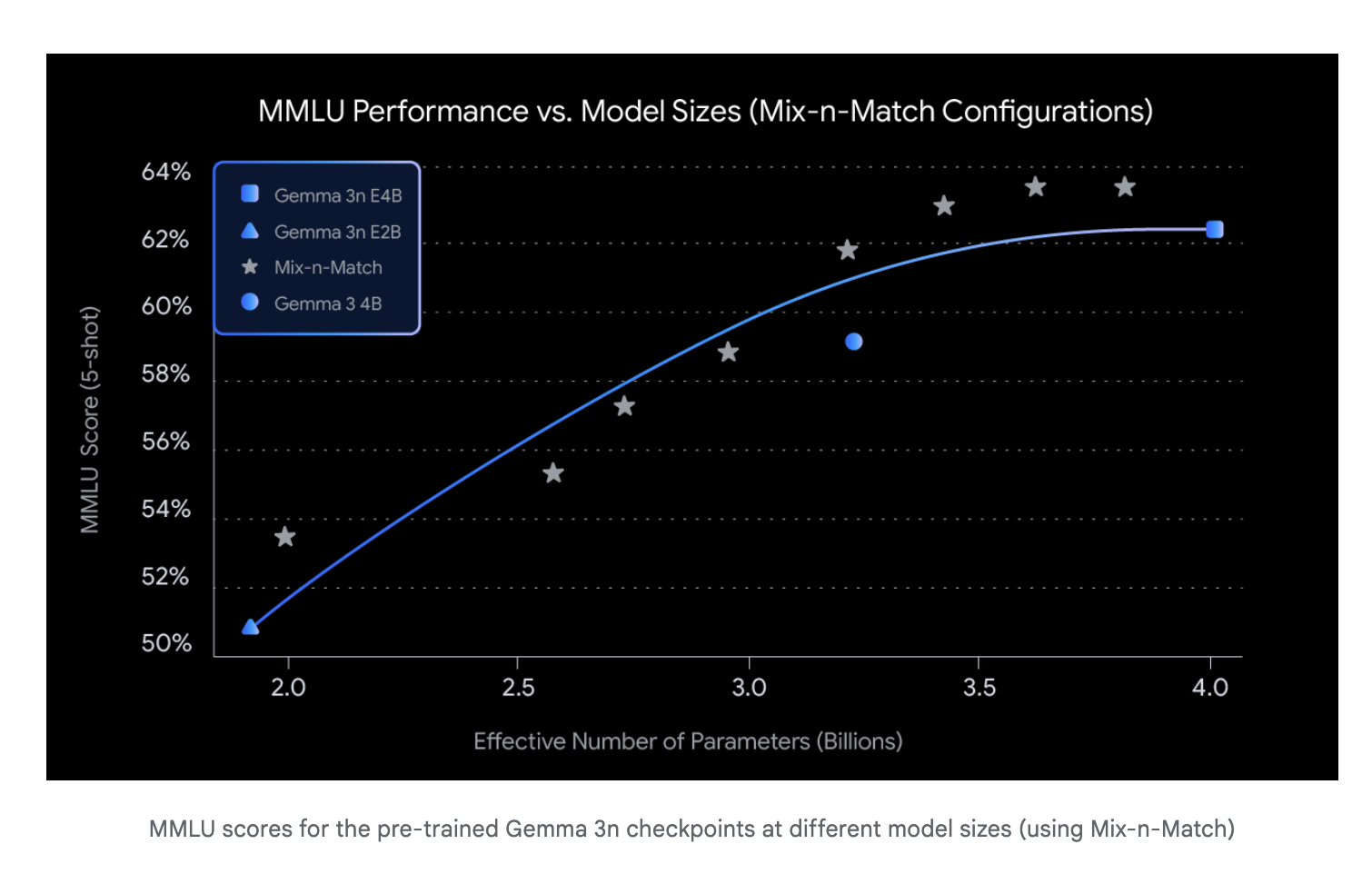

Google AI Releases Gemma 3n: A Compact Multimodal Model Built for Edge Deployment

Google has introduced Gemma 3n, a new addition to its family of open models, designed to bring large multimodal AI […]

Inception Labs Introduces Mercury: A Diffusion-Based Language Model for Ultra-Fast Code Generation

Generative AI and Its Challenges in Autoregressive Code Generation The field of generative artificial intelligence has significantly impacted software development […]

Google DeepMind Releases AlphaGenome: A Deep Learning Model that can more Comprehensively Predict the Impact of Single Variants or Mutations in DNA

A Unified Deep Learning Model to Understand the Genome Google DeepMind has unveiled AlphaGenome, a new deep learning framework designed […]

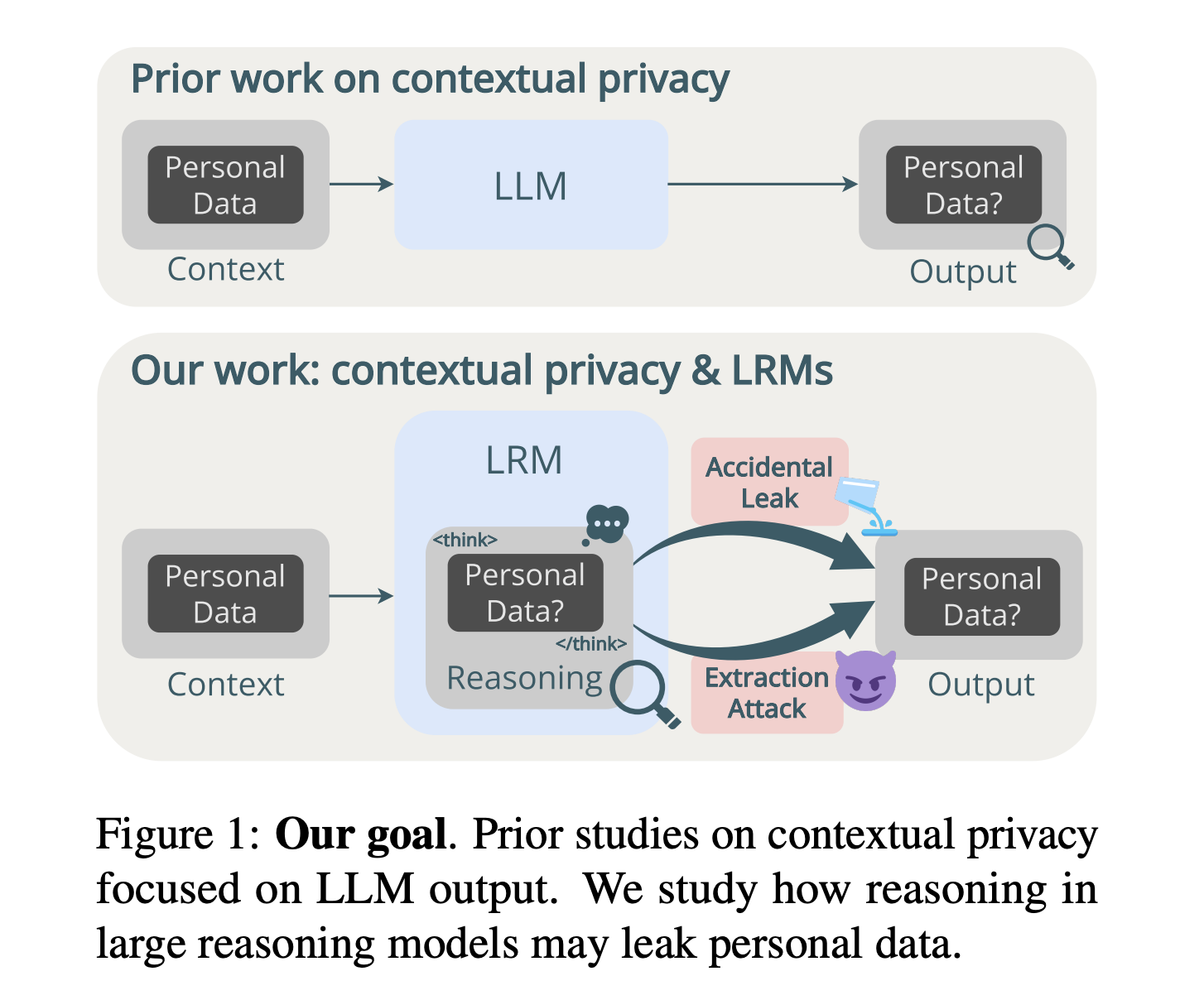

New AI Research Reveals Privacy Risks in LLM Reasoning Traces

Introduction: Personal LLM Agents and Privacy Risks LLMs are deployed as personal assistants, gaining access to sensitive user data through […]

ETH and Stanford Researchers Introduce MIRIAD: A 5.8M Pair Dataset to Improve LLM Accuracy in Medical AI

Challenges of LLMs in Medical Decision-Making: Addressing Hallucinations via Knowledge Retrieval LLMs are set to revolutionize healthcare through intelligent decision […]

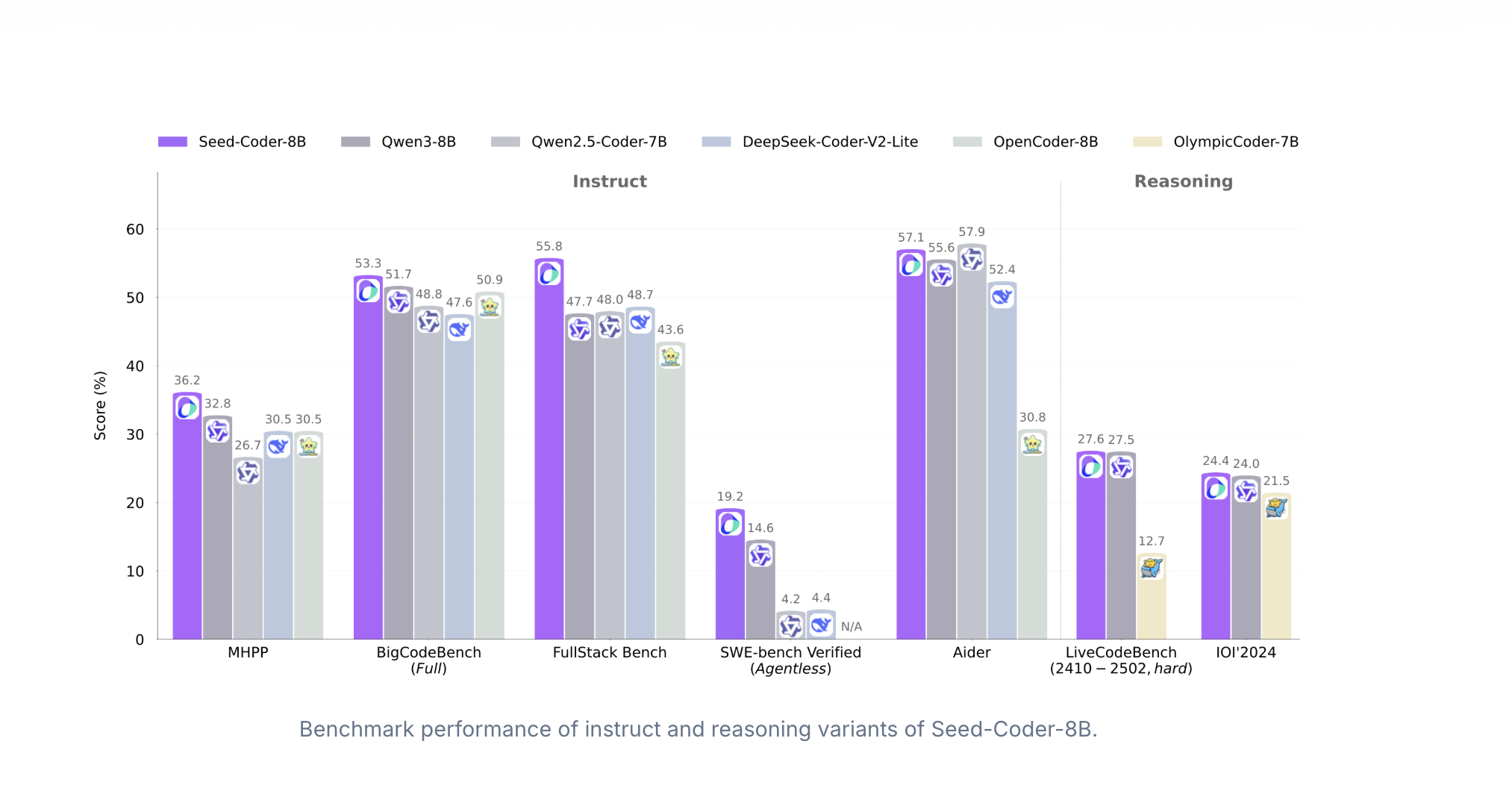

ByteDance Researchers Introduce Seed-Coder: A Model-Centric Code LLM Trained on 6 Trillion Tokens

Reframing Code LLM Training through Scalable, Automated Data Pipelines Code data plays a key role in training LLMs, benefiting not […]

ByteDance Researchers Introduce ProtoReasoning: Enhancing LLM Generalization via Logic-Based Prototypes

Why Cross-Domain Reasoning Matters in Large Language Models (LLMs) Recent breakthroughs in LRMs, especially those trained using Long CoT techniques, […]