LLMs often show a peculiar behavior where the first token in a sequence draws unusually high attention—known as an “attention […]

Category: Large Language Model

Salesforce AI Released APIGen-MT and xLAM-2-fc-r Model Series: Advancing Multi-Turn Agent Training with Verified Data Pipelines and Scalable LLM Architectures

AI agents quickly become core components in handling complex human interactions, particularly in business environments where conversations span multiple turns […]

Huawei Noah’s Ark Lab Released Dream 7B: A Powerful Open Diffusion Reasoning Model with Advanced Planning and Flexible Inference Capabilities

LLMs have revolutionized artificial intelligence, transforming various applications across industries. Autoregressive (AR) models dominate current text generation, with leading systems […]

This AI Paper from ByteDance Introduces MegaScale-Infer: A Disaggregated Expert Parallelism System for Efficient and Scalable MoE-Based LLM Serving

Large language models are built on transformer architectures and power applications like chat, code generation, and search, but their growing […]

A Code Implementation to Use Ollama through Google Colab and Building a Local RAG Pipeline on Using DeepSeek-R1 1.5B through Ollama, LangChain, FAISS, and ChromaDB for Q&A

In this tutorial, we’ll build a fully functional Retrieval-Augmented Generation (RAG) pipeline using open-source tools that run seamlessly on Google […]

RARE (Retrieval-Augmented Reasoning Modeling): A Scalable AI Framework for Domain-Specific Reasoning in Lightweight Language Models

LLMs have demonstrated strong general-purpose performance across various tasks, including mathematical reasoning and automation. However, they struggle in domain-specific applications […]

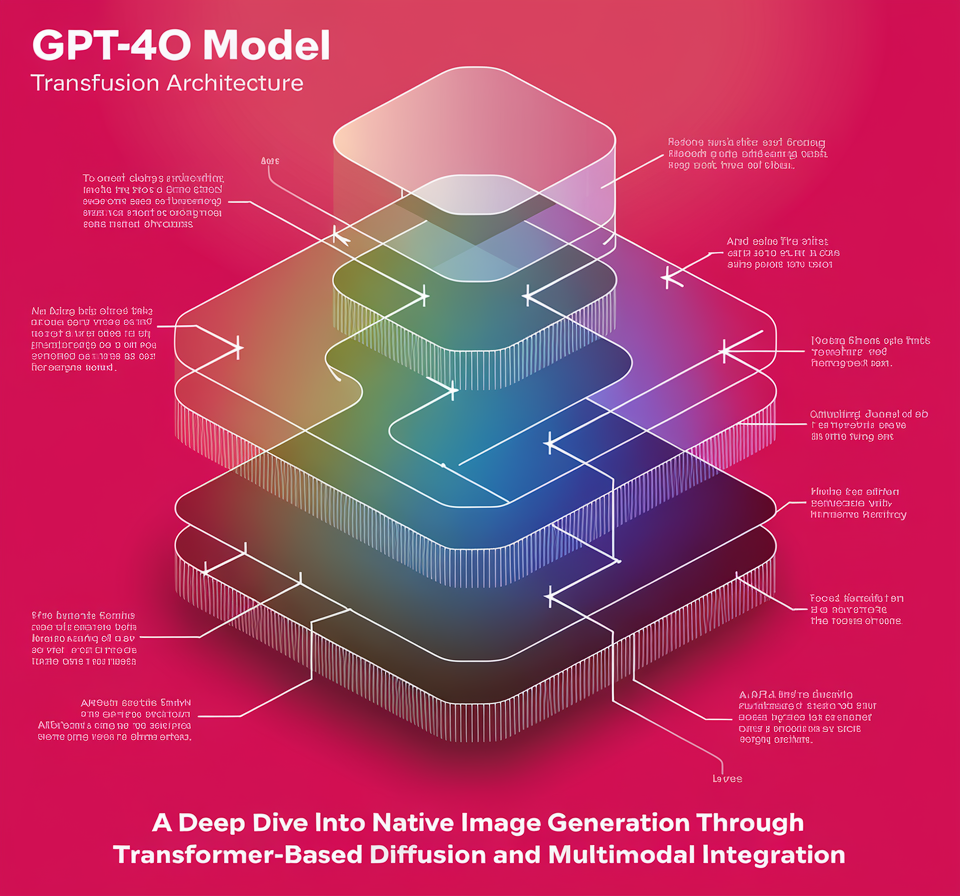

Transformer Meets Diffusion: How the Transfusion Architecture Empowers GPT-4o’s Creativity

OpenAI’s GPT-4o represents a new milestone in multimodal AI: a single model capable of generating fluent text and high-quality images […]

Anthropic’s Evaluation of Chain-of-Thought Faithfulness: Investigating Hidden Reasoning, Reward Hacks, and the Limitations of Verbal AI Transparency in Reasoning Models

A key advancement in AI capabilities is the development and use of chain-of-thought (CoT) reasoning, where models explain their steps […]

Meta AI Just Released Llama 4 Scout and Llama 4 Maverick: The First Set of Llama 4 Models

Today, Meta AI announced the release of its latest generation multimodal models, Llama 4, featuring two variants: Llama 4 Scout […]

Scalable Reinforcement Learning with Verifiable Rewards: Generative Reward Modeling for Unstructured, Multi-Domain Tasks

Reinforcement Learning with Verifiable Rewards (RLVR) has proven effective in enhancing LLMs’ reasoning and coding abilities, particularly in domains where […]