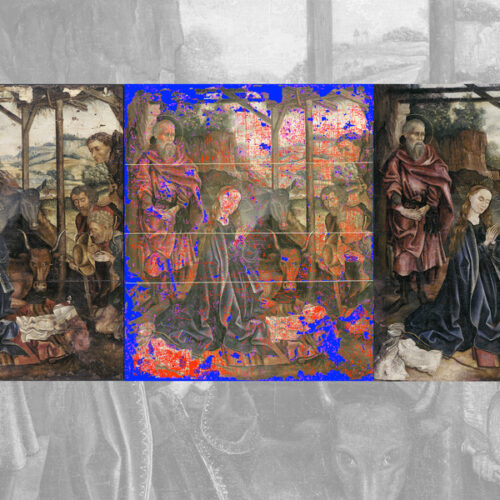

MIT graduate student Alex Kachkine once spent nine months meticulously restoring a damaged baroque Italian painting, which left him plenty […]

Category: Machine Learning

This AI Paper from Google Introduces a Causal Framework to Interpret Subgroup Fairness in Machine Learning Evaluations More Reliably

Understanding Subgroup Fairness in Machine Learning ML Evaluating fairness in machine learning often involves examining how models perform across different […]

MiniMax AI Releases MiniMax-M1: A 456B Parameter Hybrid Model for Long-Context and Reinforcement Learning RL Tasks

The Challenge of Long-Context Reasoning in AI Models Large reasoning models are not only designed to understand language but are […]

ReVisual-R1: An Open-Source 7B Multimodal Large Language Model (MLLMs) that Achieves Long, Accurate and Thoughtful Reasoning

The Challenge of Multimodal Reasoning Recent breakthroughs in text-based language models, such as DeepSeek-R1, have demonstrated that RL can aid […]

HtFLlib: A Unified Benchmarking Library for Evaluating Heterogeneous Federated Learning Methods Across Modalities

AI institutions develop heterogeneous models for specific tasks but face data scarcity challenges during training. Traditional Federated Learning (FL) supports […]

Scientists once hoarded pre-nuclear steel; now we’re hoarding pre-AI content

A time capsule of human expression Graham-Cumming is no stranger to tech preservation efforts. He’s a British software engineer and […]

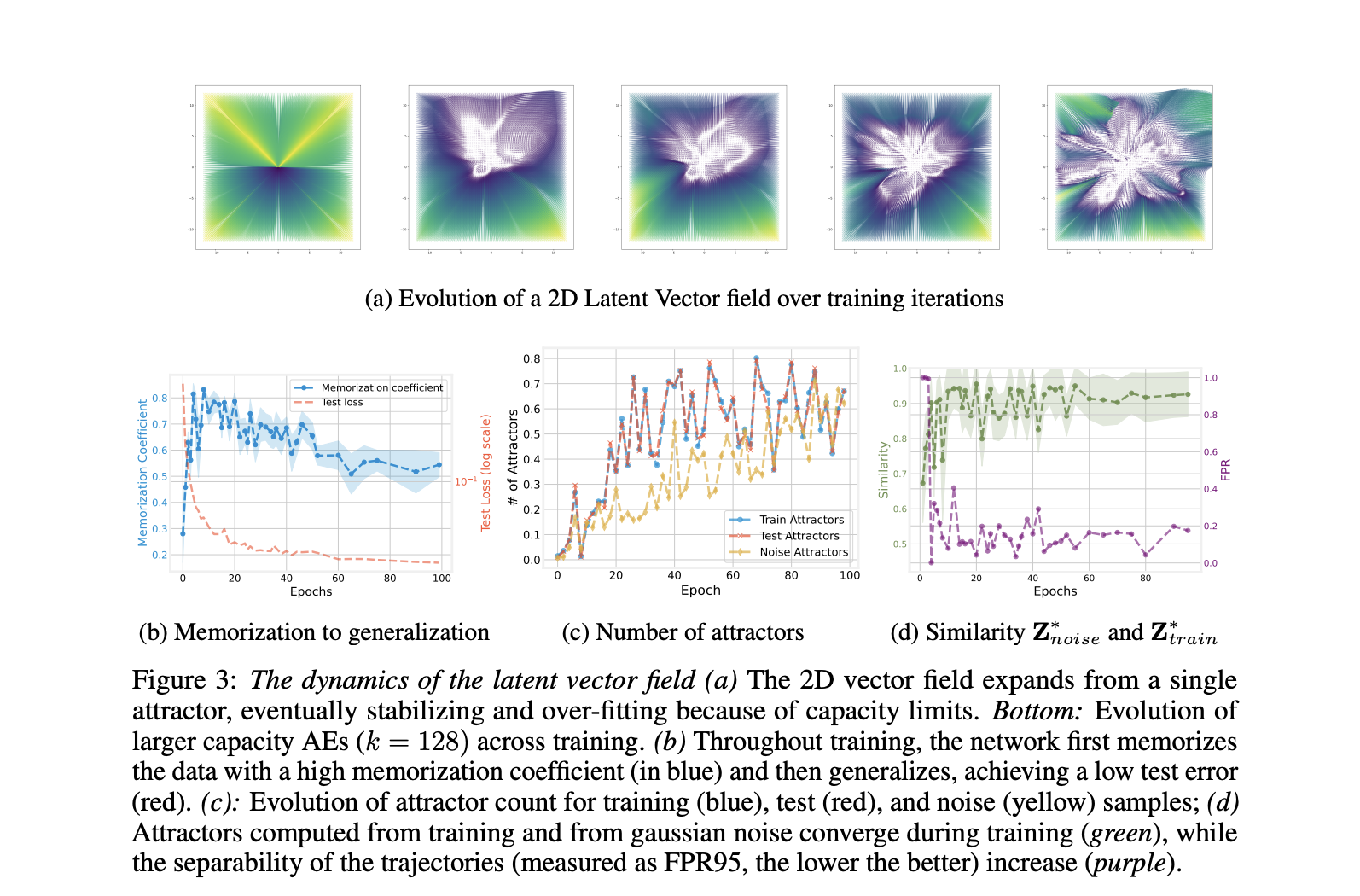

How Latent Vector Fields Reveal the Inner Workings of Neural Autoencoders

Autoencoders and the Latent Space Neural networks are designed to learn compressed representations of high-dimensional data, and autoencoders (AEs) are […]

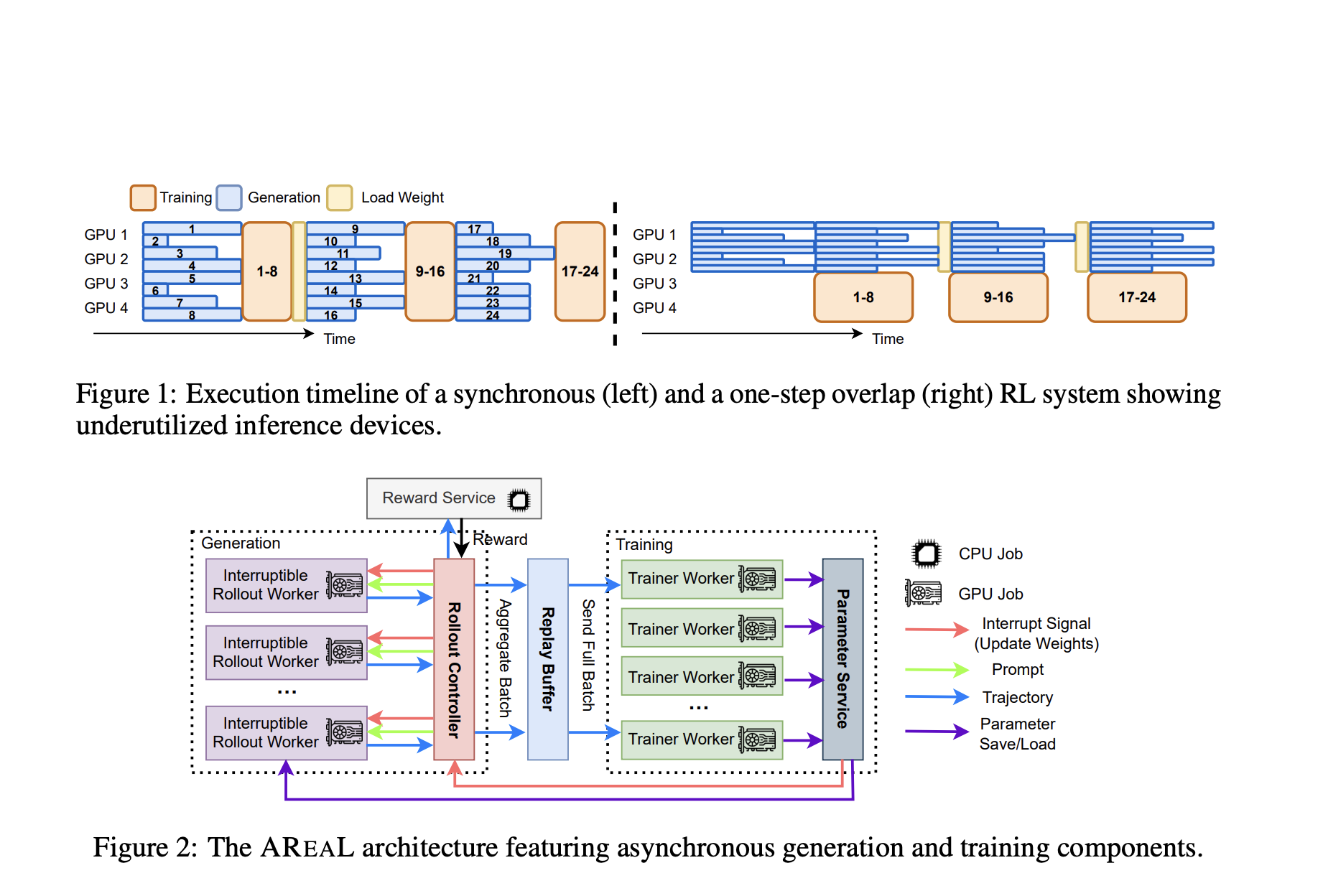

AREAL: Accelerating Large Reasoning Model Training with Fully Asynchronous Reinforcement Learning

Introduction: The Need for Efficient RL in LRMs Reinforcement Learning RL is increasingly used to enhance LLMs, especially for reasoning […]

From Fine-Tuning to Prompt Engineering: Theory and Practice for Efficient Transformer Adaptation

The Challenge of Fine-Tuning Large Transformer Models Self-attention enables transformer models to capture long-range dependencies in text, which is crucial […]

OpenAI weighs “nuclear option” of antitrust complaint against Microsoft

OpenAI executives have discussed filing an antitrust complaint with US regulators against Microsoft, the company’s largest investor, The Wall Street […]