AI-powered video generation is improving at a breathtaking pace. In a short time, we’ve gone from blurry, incoherent clips to […]

Category: Computer Vision

How Radial Attention Cuts Costs in Video Diffusion by 4.4× Without Sacrificing Quality

Introduction to Video Diffusion Models and Computational Challenges Diffusion models have made impressive progress in generating high-quality, coherent videos, building […]

ByteDance Researchers Introduce VGR: A Novel Reasoning Multimodal Large Language Model (MLLM) with Enhanced Fine-Grained Visual Perception Capabilities

Why Multimodal Reasoning Matters for Vision-Language Tasks Multimodal reasoning enables models to make informed decisions and answer questions by combining […]

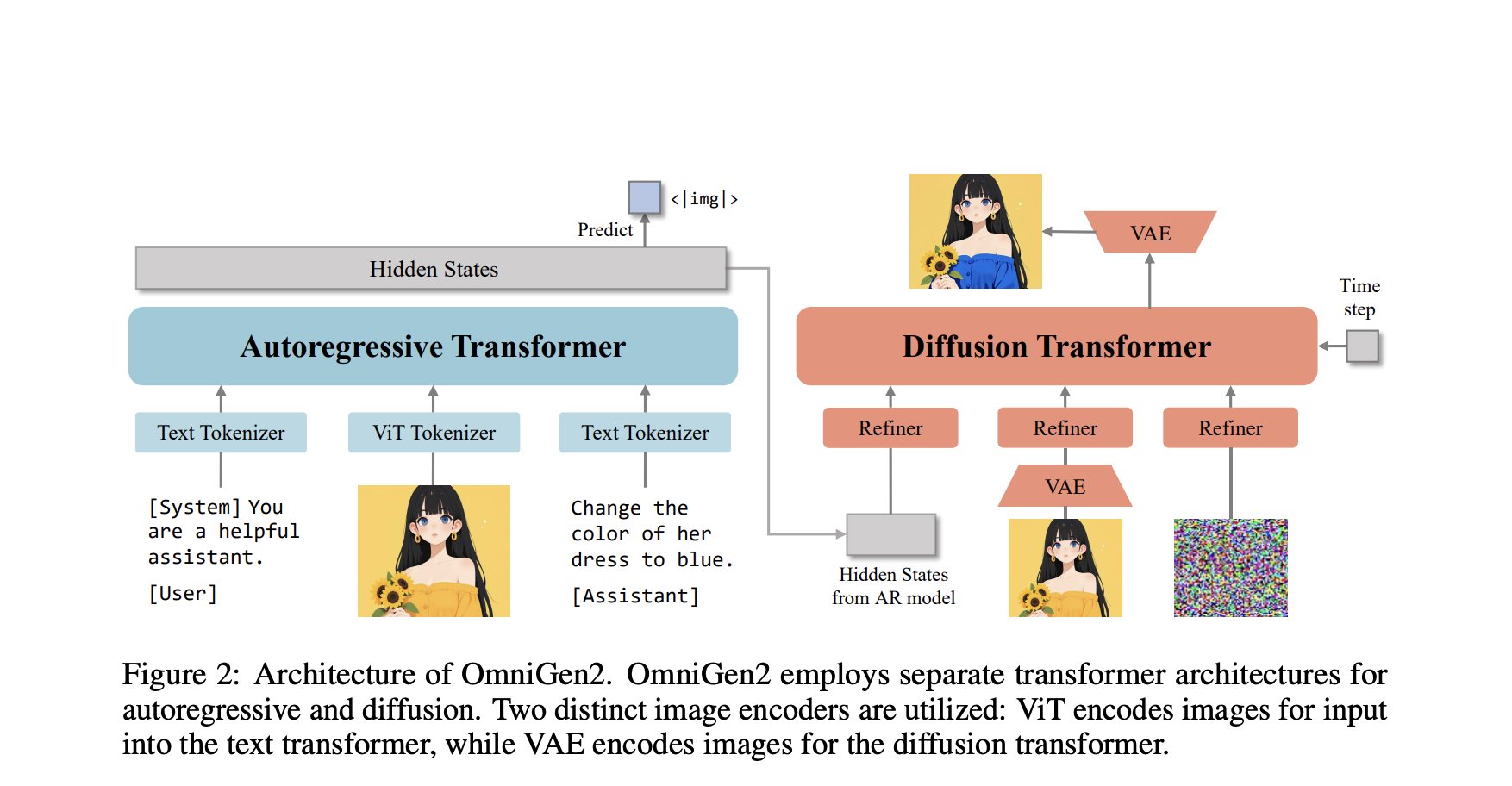

BAAI Launches OmniGen2: A Unified Diffusion and Transformer Model for Multimodal AI

Beijing Academy of Artificial Intelligence (BAAI) introduces OmniGen2, a next-generation, open-source multimodal generative model. Expanding on its predecessor OmniGen, the […]

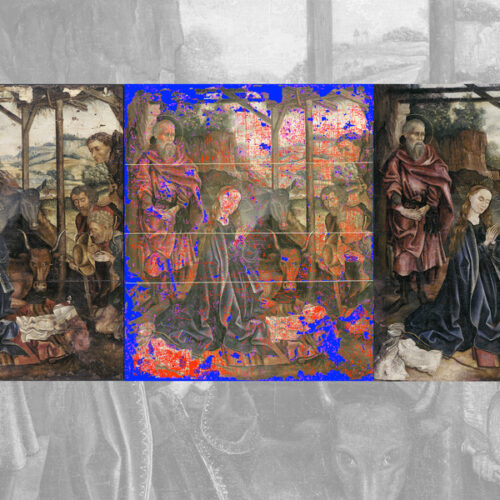

MIT student prints AI polymer masks to restore paintings in hours

MIT graduate student Alex Kachkine once spent nine months meticulously restoring a damaged baroque Italian painting, which left him plenty […]

EPFL Researchers Unveil FG2 at CVPR: A New AI Model That Slashes Localization Errors by 28% for Autonomous Vehicles in GPS-Denied Environments

Navigating the dense urban canyons of cities like San Francisco or New York can be a nightmare for GPS systems. […]

Highlighted at CVPR 2025: Google DeepMind’s ‘Motion Prompting’ Paper Unlocks Granular Video Control

Key Takeaways: Researchers from Google DeepMind, the University of Michigan & Brown university have developed “Motion Prompting,” a new method […]

This AI Paper Introduces VLM-R³: A Multimodal Framework for Region Recognition, Reasoning, and Refinement in Visual-Linguistic Tasks

Multimodal reasoning ability helps machines perform tasks such as solving math problems embedded in diagrams, reading signs from photographs, or […]

VeBrain: A Unified Multimodal AI Framework for Visual Reasoning and Real-World Robotic Control

Bridging Perception and Action in Robotics Multimodal Large Language Models (MLLMs) hold promise for enabling machines, such as robotic arms […]

Yandex Releases Alchemist: A Compact Supervised Fine-Tuning Dataset for Enhancing Text-to-Image T2I Model Quality

Despite the substantial progress in text-to-image (T2I) generation brought about by models such as DALL-E 3, Imagen 3, and Stable […]