Google AI has released TranslateGemma, a suite of open machine translation models built on Gemma 3 and targeted at 55 […]

Category: Language Model

NVIDIA AI Open-Sourced KVzap: A SOTA KV Cache Pruning Method that Delivers near-Lossless 2x-4x Compression

As context lengths move into tens and hundreds of thousands of tokens, the key value cache in transformer decoders becomes […]

Google AI Releases MedGemma-1.5: The Latest Update to their Open Medical AI Models for Developers

Google Research has expanded its Health AI Developer Foundations program (HAI-DEF) with the release of MedGemma-1.5. The model is released […]

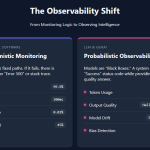

Understanding the Layers of AI Observability in the Age of LLMs

Artificial intelligence (AI) observability refers to the ability to understand, monitor, and evaluate AI systems by tracking their unique metrics—such […]

How This Agentic Memory Research Unifies Long Term and Short Term Memory for LLM Agents

How do you design an LLM agent that decides for itself what to store in long term memory, what to […]

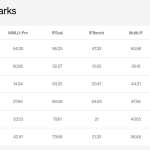

TII Abu-Dhabi Released Falcon H1R-7B: A New Reasoning Model Outperforming Others in Math and Coding with only 7B Params with 256k Context Window

Technology Innovation Institute (TII), Abu Dhabi, has released Falcon-H1R-7B, a 7B parameter reasoning specialized model that matches or exceeds many […]

NVIDIA AI Released Nemotron Speech ASR: A New Open Source Transcription Model Designed from the Ground Up for Low-Latency Use Cases like Voice Agents

NVIDIA has just released its new streaming English transcription model (Nemotron Speech ASR) built specifically for low latency voice agents […]

Liquid AI Releases LFM2.5: A Compact AI Model Family For Real On Device Agents

Liquid AI has introduced LFM2.5, a new generation of small foundation models built on the LFM2 architecture and focused at […]

LLM-Pruning Collection: A JAX Based Repo For Structured And Unstructured LLM Compression

Zlab Princeton researchers have released LLM-Pruning Collection, a JAX based repository that consolidates major pruning algorithms for large language models […]

Tencent Researchers Release Tencent HY-MT1.5: A New Translation Models Featuring 1.8B and 7B Models Designed for Seamless on-Device and Cloud Deployment

Tencent Hunyuan researchers have released HY-MT1.5, a multilingual machine translation family that targets both mobile devices and cloud systems with […]