In this tutorial, we will explore how to implement the LLM Arena-as-a-Judge approach to evaluate large language model outputs. Instead of assigning isolated numerical scores to each response, this method performs head-to-head comparisons between outputs to determine which one is better — based on criteria you define, such as helpfulness, clarity, or tone. Check out the FULL CODES here.

We’ll use OpenAI’s GPT-4.1 and Gemini 2.5 Pro to generate responses, and leverage GPT-5 as the judge to evaluate their outputs. For demonstration, we’ll work with a simple email support scenario, where the context is as follows:

Dear Support, I ordered a wireless mouse last week, but I received a keyboard instead. Can you please resolve this as soon as possible? Thank you, John Installing the dependencies

pip install deepeval google-genai openaiIn this tutorial, you’ll need API keys from both OpenAI and Google. Check out the FULL CODES here.

- Google API Key: Visit https://aistudio.google.com/apikey to generate your key.

- OpenAI API Key: Go to https://platform.openai.com/settings/organization/api-keys and create a new key. If you’re a new user, you may need to add billing information and make a minimum payment of $5 to activate API access.

Since we’re using Deepeval for evaluation, the OpenAI API key is required

import os from getpass import getpass os.environ["OPENAI_API_KEY"] = getpass('Enter OpenAI API Key: ') os.environ['GOOGLE_API_KEY'] = getpass('Enter Google API Key: ')Defining the context

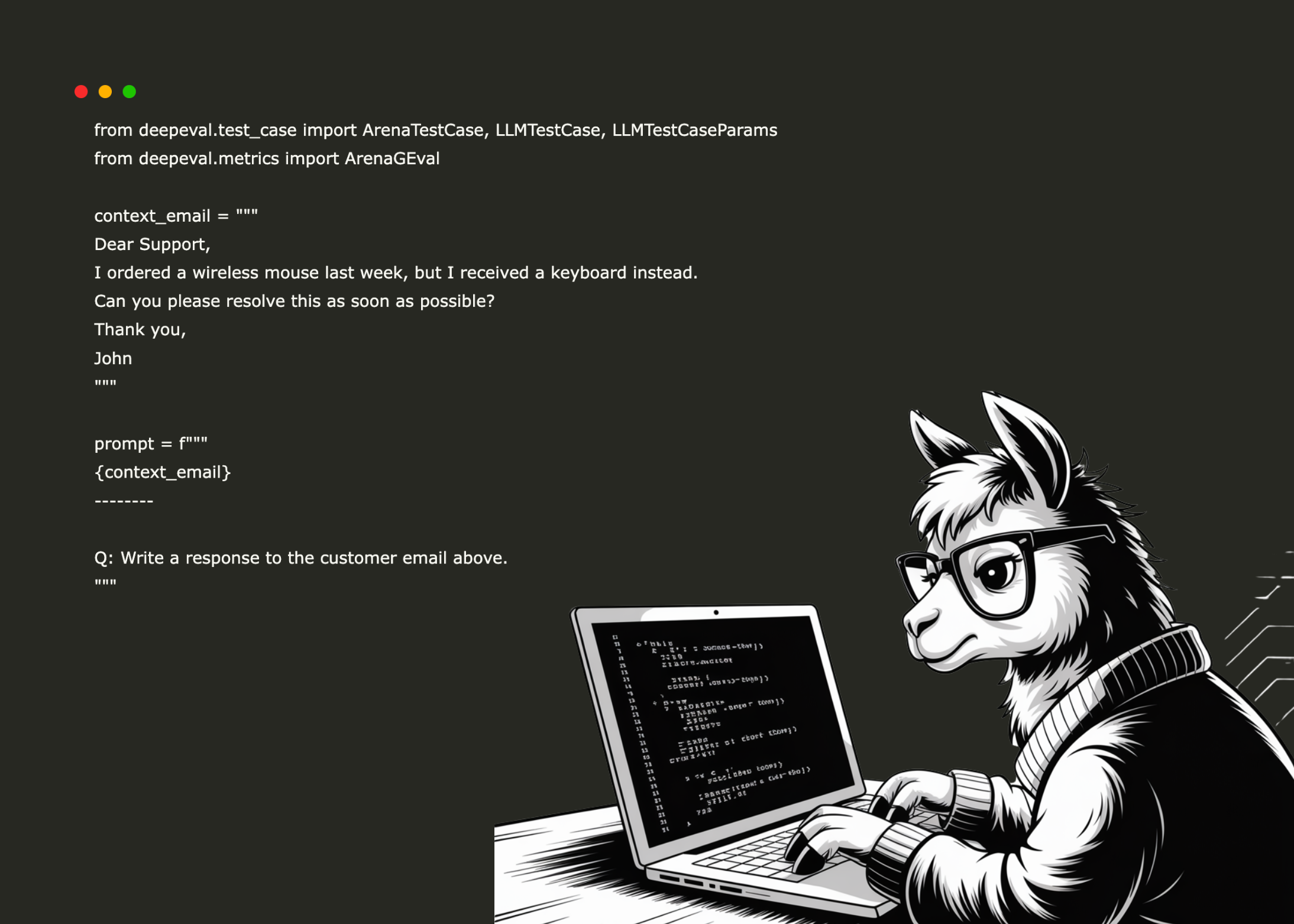

Next, we’ll define the context for our test case. In this example, we’re working with a customer support scenario where a user reports receiving the wrong product. We’ll create a context_email containing the original message from the customer and then build a prompt to generate a response based on that context. Check out the FULL CODES here.

from deepeval.test_case import ArenaTestCase, LLMTestCase, LLMTestCaseParams from deepeval.metrics import ArenaGEval context_email = """ Dear Support, I ordered a wireless mouse last week, but I received a keyboard instead. Can you please resolve this as soon as possible? Thank you, John """ prompt = f""" {context_email} -------- Q: Write a response to the customer email above. """OpenAI Model Response

from openai import OpenAI client = OpenAI() def get_openai_response(prompt: str, model: str = "gpt-4.1") -> str: response = client.chat.completions.create( model=model, messages=[ {"role": "user", "content": prompt} ] ) return response.choices[0].message.content openAI_response = get_openai_response(prompt=prompt)Gemini Model Response

from google import genai client = genai.Client() def get_gemini_response(prompt, model="gemini-2.5-pro"): response = client.models.generate_content( model=model, contents=prompt ) return response.text geminiResponse = get_gemini_response(prompt=prompt)Defining the Arena Test Case

Here, we set up the ArenaTestCase to compare the outputs of two models — GPT-4 and Gemini — for the same input prompt. Both models receive the same context_email, and their generated responses are stored in openAI_response and geminiResponse for evaluation. Check out the FULL CODES here.

a_test_case = ArenaTestCase( contestants={ "GPT-4": LLMTestCase( input="Write a response to the customer email above.", context=[context_email], actual_output=openAI_response, ), "Gemini": LLMTestCase( input="Write a response to the customer email above.", context=[context_email], actual_output=geminiResponse, ), }, )Setting Up the Evaluation Metric

Here, we define the ArenaGEval metric named Support Email Quality. The evaluation focuses on empathy, professionalism, and clarity — aiming to identify the response that is understanding, polite, and concise. The evaluation considers the context, input, and model outputs, using GPT-5 as the evaluator with verbose logging enabled for better insights. Check out the FULL CODES here.

metric = ArenaGEval( name="Support Email Quality", criteria=( "Select the response that best balances empathy, professionalism, and clarity. " "It should sound understanding, polite, and be succinct." ), evaluation_params=[ LLMTestCaseParams.CONTEXT, LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT, ], model="gpt-5", verbose_mode=True )Running the Evaluation

metric.measure(a_test_case)************************************************** Support Email Quality [Arena GEval] Verbose Logs ************************************************** Criteria: Select the response that best balances empathy, professionalism, and clarity. It should sound understanding, polite, and be succinct. Evaluation Steps: [ "From the Context and Input, identify the user's intent, needs, tone, and any constraints or specifics to be addressed.", "Verify the Actual Output directly responds to the Input, uses relevant details from the Context, and remains consistent with any constraints.", "Evaluate empathy: check whether the Actual Output acknowledges the user's situation/feelings from the Context/Input in a polite, understanding way.", "Evaluate professionalism and clarity: ensure respectful, blame-free tone and concise, easy-to-understand wording; choose the response that best balances empathy, professionalism, and succinct clarity." ] Winner: GPT-4 Reason: GPT-4 delivers a single, concise, and professional email that directly addresses the context (acknowledges receiving a keyboard instead of the ordered wireless mouse), apologizes, and clearly outlines next steps (send the correct mouse and provide return instructions) with a polite verification step (requesting a photo). This best matches the request to write a response and balances empathy and clarity. In contrast, Gemini includes multiple options with meta commentary, which dilutes focus and fails to provide one clear reply; while empathetic and detailed (e.g., acknowledging frustration and offering prepaid labels), the multi-option format and an over-assertive claim of already locating the order reduce professionalism and succinct clarity compared to GPT-4. ======================================================================The evaluation results show that GPT-4 outperformed the other model in generating a support email that balanced empathy, professionalism, and clarity. GPT-4’s response stood out because it was concise, polite, and action-oriented, directly addressing the situation by apologizing for the error, confirming the issue, and clearly explaining the next steps to resolve it, such as sending the correct item and providing return instructions. The tone was respectful and understanding, aligning perfectly with the user’s need for a clear and empathetic reply. In contrast, Gemini’s response, while empathetic and detailed, included multiple response options and unnecessary commentary, which reduced its clarity and professionalism. This result highlights GPT-4’s ability to deliver focused, customer-centric communication that feels both professional and considerate.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Arham Islam

I am a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I have a keen interest in Data Science, especially Neural Networks and their application in various areas.