How do you build a single vision language action model that can control many different dual arm robots in the […]

Category: Vision Language Model

Liquid AI Releases LFM2.5: A Compact AI Model Family For Real On Device Agents

Liquid AI has introduced LFM2.5, a new generation of small foundation models built on the LFM2 architecture and focused at […]

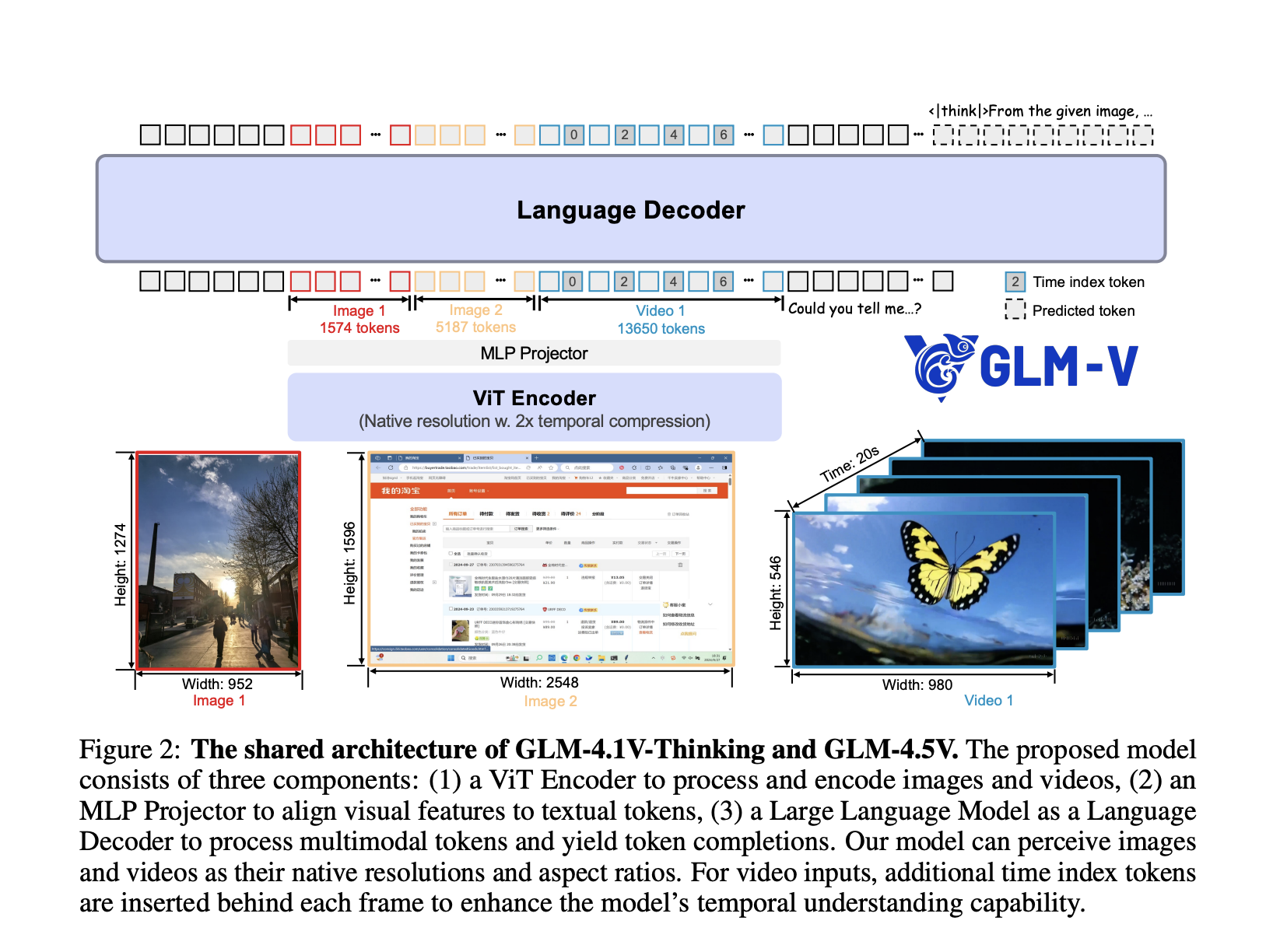

Zhipu AI Releases GLM-4.6V: A 128K Context Vision Language Model with Native Tool Calling

Zhipu AI has open sourced the GLM-4.6V series as a pair of vision language models that treat images, video and […]

Jina AI Releases Jina-VLM: A 2.4B Multilingual Vision Language Model Focused on Token Efficient Visual QA

Jina AI has released Jina-VLM, a 2.4B parameter vision language model that targets multilingual visual question answering and document understanding […]

Tencent Hunyuan Releases HunyuanOCR: a 1B Parameter End to End OCR Expert VLM

Tencent Hunyuan has released HunyuanOCR, a 1B parameter vision language model that is specialized for OCR and document understanding. The […]

Liquid AI’s LFM2-VL-3B Brings a 3B Parameter Vision Language Model (VLM) to Edge-Class Devices

Liquid AI released LFM2-VL-3B, a 3B parameter vision language model for image text to text tasks. It extends the LFM2-VL […]

Baidu’s PaddlePaddle Team Releases PaddleOCR-VL (0.9B): a NaViT-style + ERNIE-4.5-0.3B VLM Targeting End-to-End Multilingual Document Parsing

How do you convert complex, multilingual documents—dense layouts, small scripts, formulas, charts, and handwriting—into faithful structured Markdown/JSON with state-of-the-art accuracy […]

Alibaba’s Qwen AI Releases Compact Dense Qwen3-VL 4B/8B (Instruct & Thinking) With FP8 Checkpoints

Do you actually need a giant VLM when dense Qwen3-VL 4B/8B (Instruct/Thinking) with FP8 runs in low VRAM yet retains […]

Qwen Team Introduces Qwen-Image-Edit: The Image Editing Version of Qwen-Image with Advanced Capabilities for Semantic and Appearance Editing

In the domain of multimodal AI, instruction-based image editing models are transforming how users interact with visual content. Just released […]

Zhipu AI Releases GLM-4.5V: Versatile Multimodal Reasoning with Scalable Reinforcement Learning

Zhipu AI has officially released and open-sourced GLM-4.5V, a next-generation vision-language model (VLM) that significantly advances the state of open […]