In this tutorial, we explore how to build neural networks from scratch using Tinygrad while remaining fully hands-on with tensors, […]

Category: Large Language Model

Anthropic introduces cheaper, more powerful, more efficient Opus 4.5 model

Anthropic today released Opus 4.5, its flagship frontier model, and it brings improvements in coding performance, as well as some […]

Microsoft AI Releases Fara-7B: An Efficient Agentic Model for Computer Use

How do we safely let an AI agent handle real web tasks like booking, searching, and form filling directly on […]

NVIDIA AI Releases Nemotron-Elastic-12B: A Single AI Model that Gives You 6B/9B/12B Variants without Extra Training Cost

Why are AI dev teams still training and storing multiple large language models for different deployment needs when one elastic […]

AI trained on bacterial genomes produces never-before-seen proteins

The researchers argue that this setup lets Evo “link nucleotide-level patterns to kilobase-scale genomic context.” In other words, if you […]

Allen Institute for AI (AI2) Introduces Olmo 3: An Open Source 7B and 32B LLM Family Built on the Dolma 3 and Dolci Stack

Allen Institute for AI (AI2) is releasing Olmo 3 as a fully open model family that exposes the entire ‘model […]

Moonshot AI Releases Kimi K2 Thinking: An Impressive Thinking Model that can Execute up to 200–300 Sequential Tool Calls without Human Interference

How do we design AI systems that can plan, reason, and act over long sequences of decisions without constant human […]

Google AI Introduces Consistency Training for Safer Language Models Under Sycophantic and Jailbreak Style Prompts

How can consistency training help language models resist sycophantic prompts and jailbreak style attacks while keeping their capabilities intact? Large […]

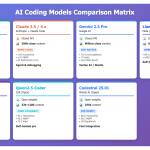

Comparing the Top 7 Large Language Models LLMs/Systems for Coding in 2025

Code-oriented large language models moved from autocomplete to software engineering systems. In 2025, leading models must fix real GitHub issues, […]

Cache-to-Cache(C2C): Direct Semantic Communication Between Large Language Models via KV-Cache Fusion

Can large language models collaborate without sending a single token of text? a team of researchers from Tsinghua University, Infinigence […]