Alibaba Cloud’s Qwen team has open-sourced Qwen3-TTS, a family of multilingual text-to-speech models that target three core tasks in one […]

Category: Editors Pick

Microsoft Releases VibeVoice-ASR: A Unified Speech-to-Text Model Designed to Handle 60-Minute Long-Form Audio in a Single Pass

Microsoft has released VibeVoice-ASR as part of the VibeVoice family of open source frontier voice AI models. VibeVoice-ASR is described […]

FlashLabs Researchers Release Chroma 1.0: A 4B Real Time Speech Dialogue Model With Personalized Voice Cloning

Chroma 1.0 is a real time speech to speech dialogue model that takes audio as input and returns audio as […]

Inworld AI Releases TTS-1.5 For Realtime, Production Grade Voice Agents

Inworld AI has introduced Inworld TTS-1.5, an upgrade to its TTS-1 family that targets realtime voice agents with strict constraints […]

Salesforce AI Introduces FOFPred: A Language-Driven Future Optical Flow Prediction Framework that Enables Improved Robot Control and Video Generation

Salesforce AI research team present FOFPred, a language driven future optical flow prediction framework that connects large vision language models […]

How AutoGluon Enables Modern AutoML Pipelines for Production-Grade Tabular Models with Ensembling and Distillation

In this tutorial, we build a production-grade tabular machine learning pipeline using AutoGluon, taking a real-world mixed-type dataset from raw […]

Liquid AI Releases LFM2.5-1.2B-Thinking: a 1.2B Parameter Reasoning Model That Fits Under 1 GB On-Device

Liquid AI has released LFM2.5-1.2B-Thinking, a 1.2 billion parameter reasoning model that runs fully on device and fits in about […]

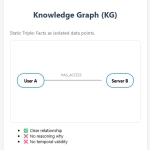

What are Context Graphs?

Knowledge Graphs and their limitations With the rapid growth of AI applications, Knowledge Graphs (KGs) have emerged as a foundational […]

A Coding Guide to Anemoi-Style Semi-Centralized Agentic Systems Using Peer-to-Peer Critic Loops in LangGraph

In this tutorial, we demonstrate how a semi-centralized Anemoi-style multi-agent system works by letting two peer agents negotiate directly without […]

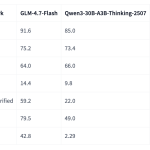

Zhipu AI Releases GLM-4.7-Flash: A 30B-A3B MoE Model for Efficient Local Coding and Agents

GLM-4.7-Flash is a new member of the GLM 4.7 family and targets developers who want strong coding and reasoning performance […]