Tencent’s Hunyuan team has introduced Hunyuan-A13B, a new open-source large language model built on a sparse Mixture-of-Experts (MoE) architecture. While […]

Category: Vision Language Model

Alibaba Qwen Team Releases Qwen-VLo: A Unified Multimodal Understanding and Generation Model

The Alibaba Qwen team has introduced Qwen-VLo, a new addition to its Qwen model family, designed to unify multimodal understanding […]

DeepSeek Researchers Open-Sourced a Personal Project named ‘nano-vLLM’: A Lightweight vLLM Implementation Built from Scratch

The DeepSeek Researchers just released a super cool personal project named ‘nano-vLLM‘, a minimalistic and efficient implementation of the vLLM […]

Meta AI Releases Web-SSL: A Scalable and Language-Free Approach to Visual Representation Learning

In recent years, contrastive language-image models such as CLIP have established themselves as a default choice for learning vision representations, […]

Long-Context Multimodal Understanding No Longer Requires Massive Models: NVIDIA AI Introduces Eagle 2.5, a Generalist Vision-Language Model that Matches GPT-4o on Video Tasks Using Just 8B Parameters

In recent years, vision-language models (VLMs) have advanced significantly in bridging image, video, and textual modalities. Yet, a persistent limitation […]

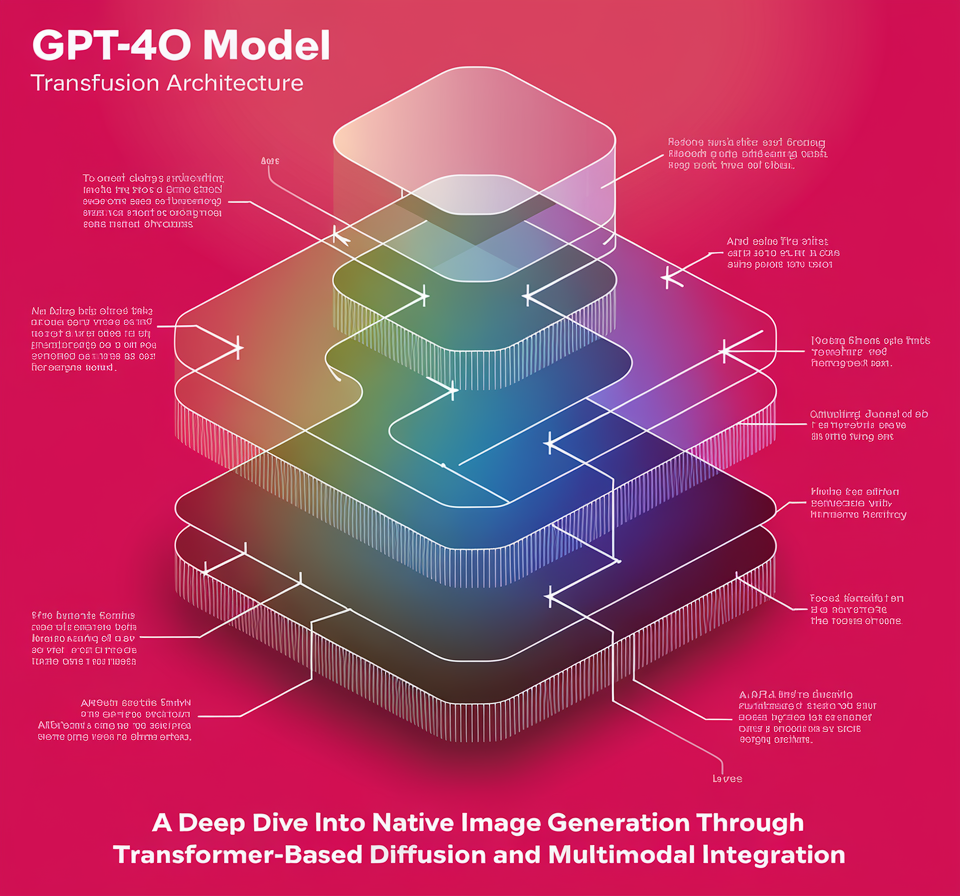

Transformer Meets Diffusion: How the Transfusion Architecture Empowers GPT-4o’s Creativity

OpenAI’s GPT-4o represents a new milestone in multimodal AI: a single model capable of generating fluent text and high-quality images […]

Google DeepMind Research Releases SigLIP2: A Family of New Multilingual Vision-Language Encoders with Improved Semantic Understanding, Localization, and Dense Features

Modern vision-language models have transformed how we process visual data, yet they often fall short when it comes to fine-grained […]

Google DeepMind Releases PaliGemma 2 Mix: New Instruction Vision Language Models Fine-Tuned on a Mix of Vision Language Tasks

Vision‐language models (VLMs) have long promised to bridge the gap between image understanding and natural language processing. Yet, practical challenges […]

IBM AI Releases Granite-Vision-3.1-2B: A Small Vision Language Model with Super Impressive Performance on Various Tasks

The integration of visual and textual data in artificial intelligence presents a complex challenge. Traditional models often struggle to interpret […]

NVIDIA AI Releases Eagle2 Series Vision-Language Model: Achieving SOTA Results Across Various Multimodal Benchmarks

Vision-Language Models (VLMs) have significantly expanded AI’s ability to process multimodal information, yet they face persistent challenges. Proprietary models such […]