Artificial intelligence has come a long way, transforming the way we work, live, and interact. Yet, challenges remain. Many AI […]

Category: Open Source

PRIME: An Open-Source Solution for Online Reinforcement Learning with Process Rewards to Advance Reasoning Abilities of Language Models Beyond Imitation or Distillation

Large Language Models (LLMs) face significant scalability limitations in improving their reasoning capabilities through data-driven imitation, as better performance demands […]

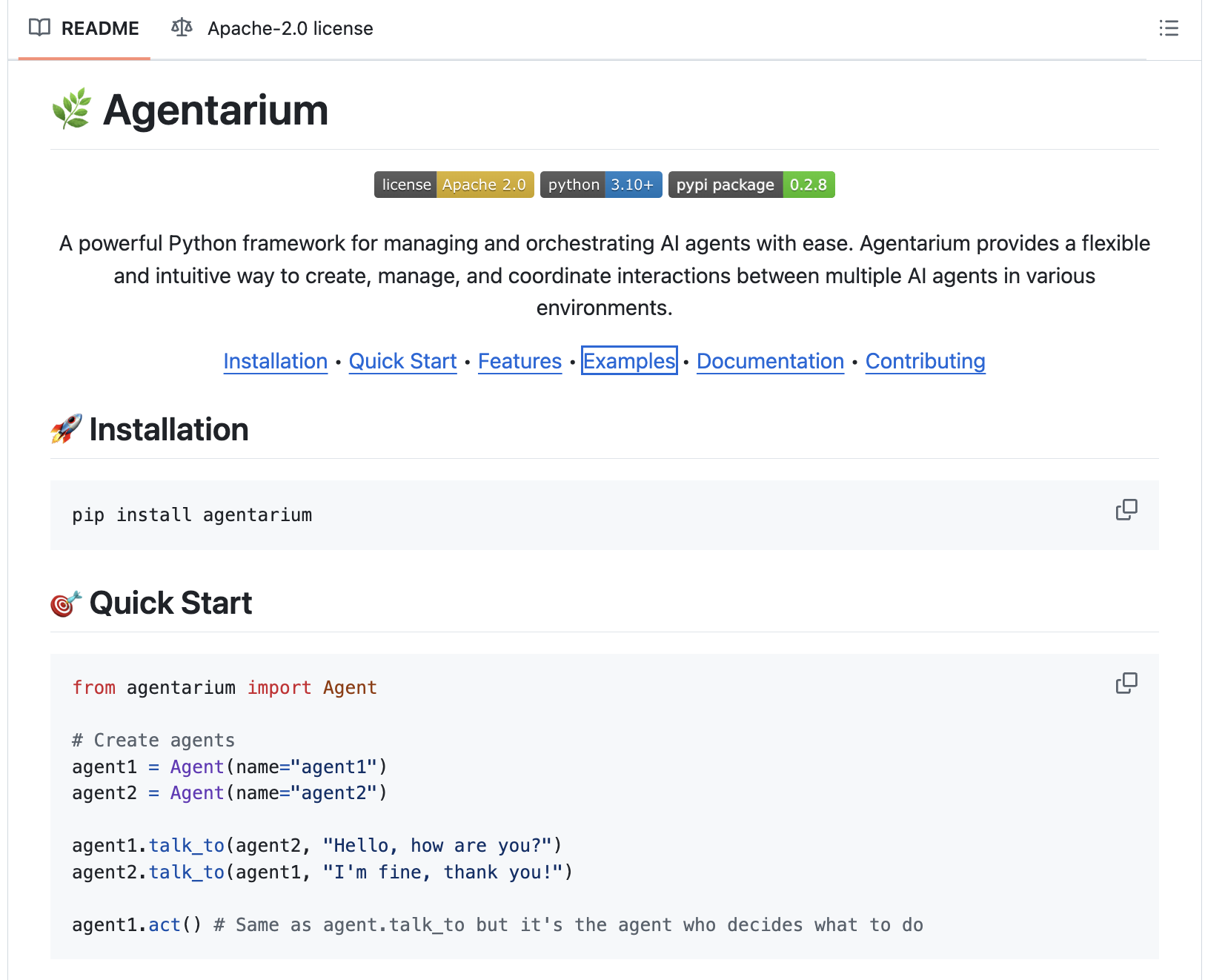

Meet Agentarium: A Powerful Python Framework for Managing and Orchestrating AI Agents

AI agents have become an integral part of modern industries, automating tasks and simulating complex systems. Despite their potential, managing […]

Hugging Face Just Released SmolAgents: A Smol Library that Enables to Run Powerful AI Agents in a Few Lines of Code

Creating intelligent agents has traditionally been a complex task, often requiring significant technical expertise and time. Developers encounter challenges like […]

Meet SemiKong: The World’s First Open-Source Semiconductor-Focused LLM

The semiconductor industry enables advancements in consumer electronics, automotive systems, and cutting-edge computing technologies. The production of semiconductors involves sophisticated […]

DeepSeek-AI Just Released DeepSeek-V3: A Strong Mixture-of-Experts (MoE) Language Model with 671B Total Parameters with 37B Activated for Each Token

The field of Natural Language Processing (NLP) has made significant strides with the development of large-scale language models (LLMs). However, […]

Qwen Team Releases QvQ: An Open-Weight Model for Multimodal Reasoning

Multimodal reasoning—the ability to process and integrate information from diverse data sources such as text, images, and video—remains a demanding […]

Microsoft Researchers Release AIOpsLab: An Open-Source Comprehensive AI Framework for AIOps Agents

The increasing complexity of cloud computing has brought both opportunities and challenges. Enterprises now depend heavily on intricate cloud-based infrastructures […]

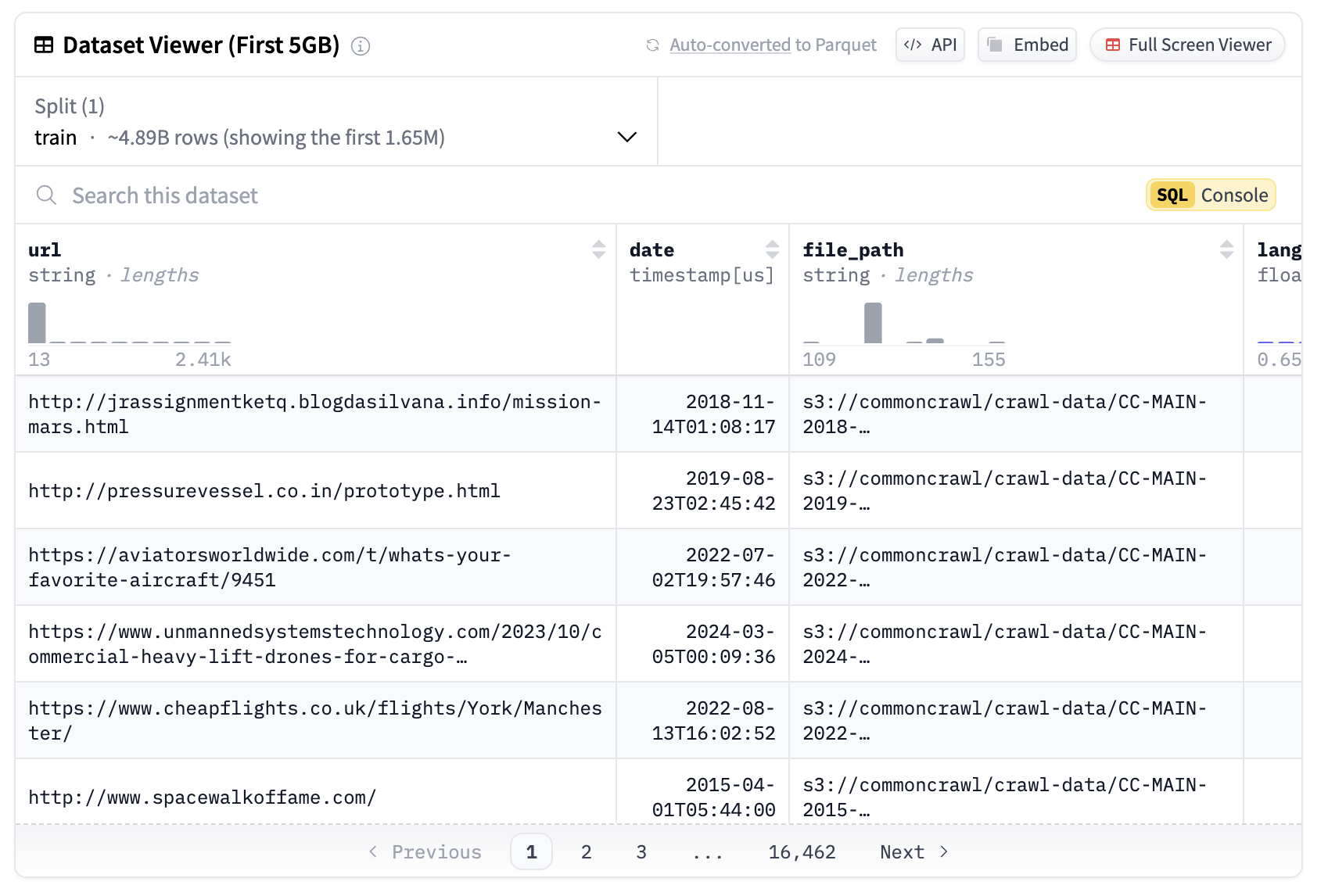

Meet FineFineWeb: An Open-Sourced Automatic Classification System for Fine-Grained Web Data

Multimodal Art Projection (M-A-P) researchers have introduced FineFineWeb, a large open-source automatic classification system for fine-grained web data. The project […]

LightOn and Answer.ai Releases ModernBERT: A New Model Series that is a Pareto Improvement over BERT with both Speed and Accuracy

Since the release of BERT in 2018, encoder-only transformer models have been widely used in natural language processing (NLP) applications […]