The rapid advancements in artificial intelligence have opened new possibilities, but the associated costs often limit who can benefit from […]

Category: New Releases

Google AI Just Released TimesFM-2.0 (JAX and Pytorch) on Hugging Face with a Significant Boost in Accuracy and Maximum Context Length

Time-series forecasting plays a crucial role in various domains, including finance, healthcare, and climate science. However, achieving accurate predictions remains […]

Good Fire AI Open-Sources Sparse Autoencoders (SAEs) for Llama 3.1 8B and Llama 3.3 70B

Large language models (LLMs) like OpenAI’s GPT and Meta’s LLaMA have significantly advanced natural language understanding and text generation. However, […]

Meta AI Open-Sources LeanUniverse: A Machine Learning Library for Consistent and Scalable Lean4 Dataset Management

Managing datasets effectively has become a pressing challenge as machine learning (ML) continues to grow in scale and complexity. As […]

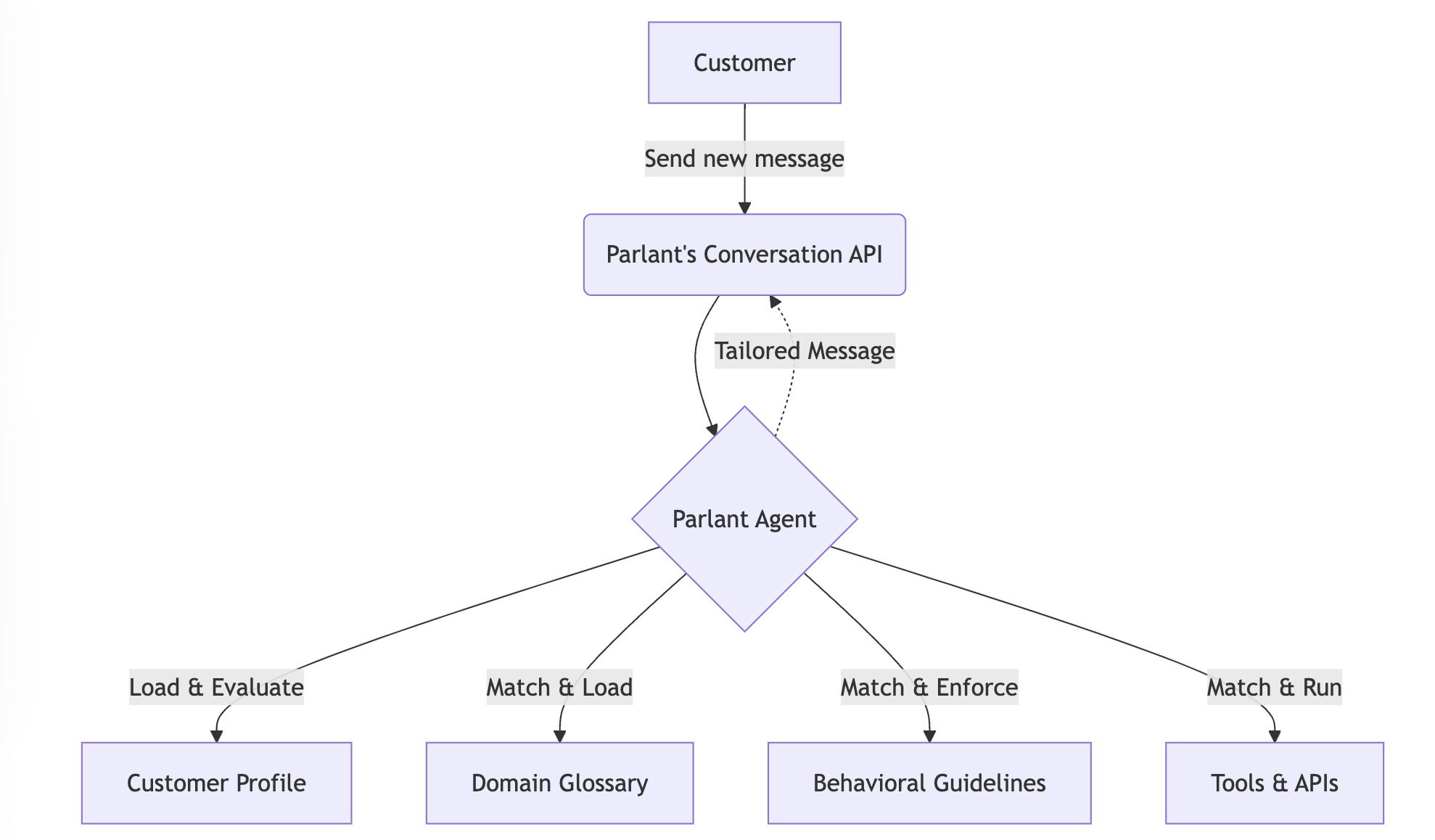

Introducing Parlant: The Open-Source Framework for Reliable AI Agents

The Problem: Why Current AI Agent Approaches Fail If you have ever designed and implemented an LLM Model-based chatbot in […]

Meet KaLM-Embedding: A Series of Multilingual Embedding Models Built on Qwen2-0.5B and Released Under MIT

Multilingual applications and cross-lingual tasks are central to natural language processing (NLP) today, making robust embedding models essential. These models […]

AMD Researchers Introduce Agent Laboratory: An Autonomous LLM-based Framework Capable of Completing the Entire Research Process

Scientific research is often constrained by resource limitations and time-intensive processes. Tasks such as hypothesis testing, data analysis, and report […]

Microsoft AI Just Released Phi-4: A Small Language Model Available on Hugging Face Under the MIT License

Microsoft has released Phi-4, a compact and efficient small language model, on Hugging Face under the MIT license. This decision […]

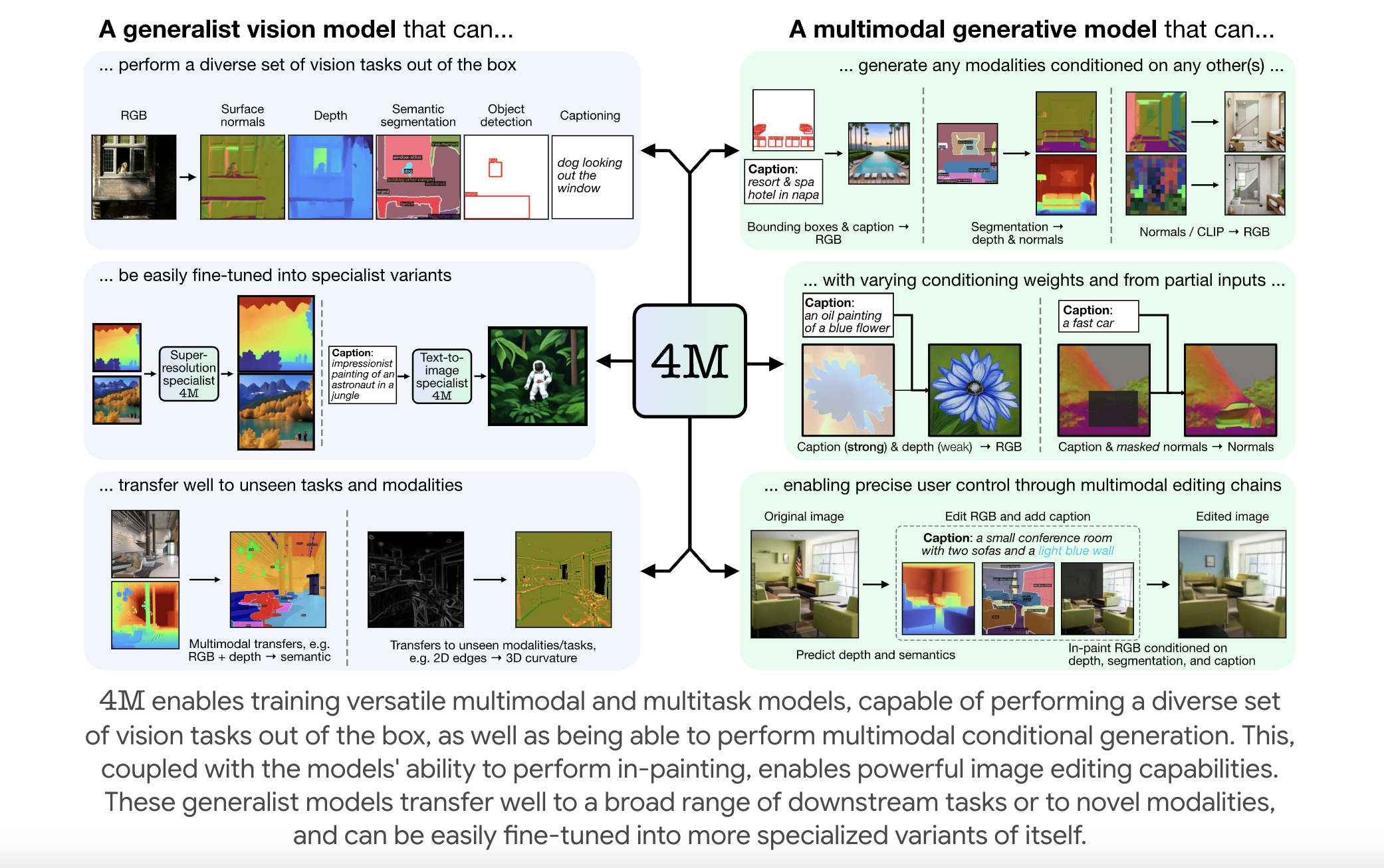

EPFL Researchers Releases 4M: An Open-Source Training Framework to Advance Multimodal AI

Multimodal foundation models are becoming increasingly relevant in artificial intelligence, enabling systems to process and integrate multiple forms of data—such […]

Researchers from USC and Prime Intellect Released METAGENE-1: A 7B Parameter Autoregressive Transformer Model Trained on Over 1.5T DNA and RNA Base Pairs

In a time when global health faces persistent threats from emerging pandemics, the need for advanced biosurveillance and pathogen detection […]