Why Cross-Domain Reasoning Matters in Large Language Models (LLMs) Recent breakthroughs in LRMs, especially those trained using Long CoT techniques, […]

Category: Machine Learning

The résumé is dying, and AI is holding the smoking gun

Skip to content Tales from the age of noise As thousands of applications flood job posts, ‘hiring slop’ is kicking […]

Sakana AI Introduces Reinforcement-Learned Teachers (RLTs): Efficiently Distilling Reasoning in LLMs Using Small-Scale Reinforcement Learning

Sakana AI introduces a novel framework for reasoning language models (LLMs) with a focus on efficiency and reusability: Reinforcement-Learned Teachers […]

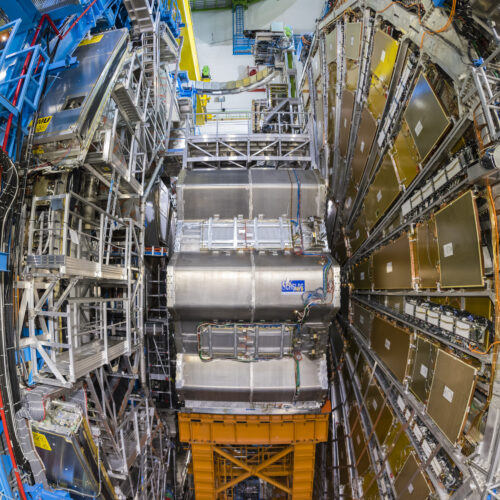

How a grad student got LHC data to play nice with quantum interference

New approach is already having an impact on the experiment’s plans for future work. The ATLAS particle detector of the […]

Why Apple’s Critique of AI Reasoning Is Premature

The debate around the reasoning capabilities of Large Reasoning Models (LRMs) has been recently invigorated by two prominent yet conflicting […]

Texas A&M Researchers Introduce a Two-Phase Machine Learning Method Named ‘ShockCast’ for High-Speed Flow Simulation with Neural Temporal Re-Meshing

Challenges in Simulating High-Speed Flows with Neural Solvers Modeling high-speed fluid flows, such as those in supersonic or hypersonic regimes, […]

This AI Paper Introduces WINGS: A Dual-Learner Architecture to Prevent Text-Only Forgetting in Multimodal Large Language Models

Multimodal LLMs: Expanding Capabilities Across Text and Vision Expanding large language models (LLMs) to handle multiple modalities, particularly images and […]

Mistral AI Releases Mistral Small 3.2: Enhanced Instruction Following, Reduced Repetition, and Stronger Function Calling for AI Integration

With the frequent release of new large language models (LLMs), there is a persistent quest to minimize repetitive errors, enhance […]

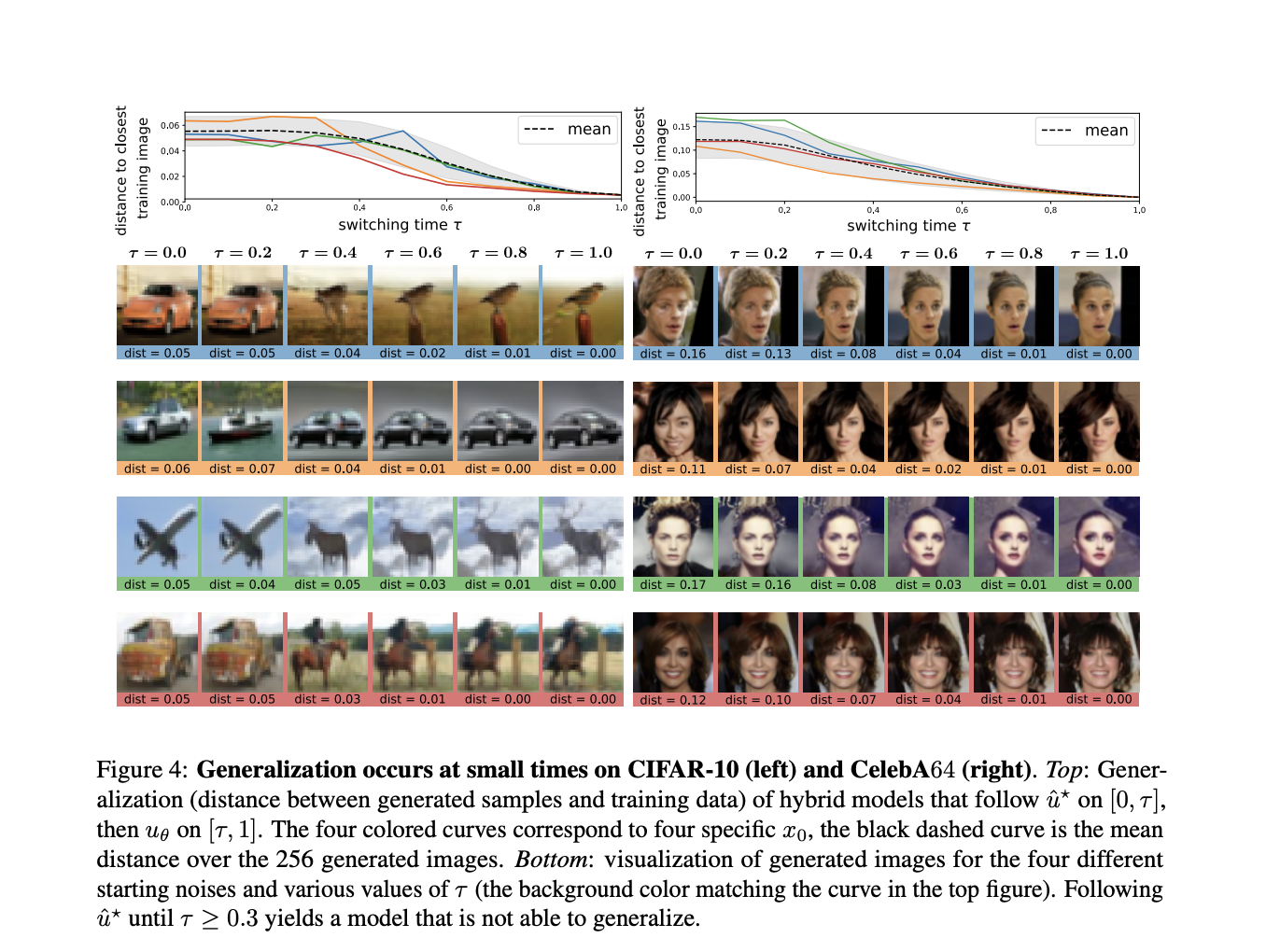

Why Generalization in Flow Matching Models Comes from Approximation, Not Stochasticity

Introduction: Understanding Generalization in Deep Generative Models Deep generative models, including diffusion and flow matching, have shown outstanding performance in […]

Meta AI Researchers Introduced a Scalable Byte-Level Autoregressive U-Net Model That Outperforms Token-Based Transformers Across Language Modeling Benchmarks

Language modeling plays a foundational role in natural language processing, enabling machines to predict and generate text that resembles human […]