The dominant approach to pretraining large language models (LLMs) relies on next-token prediction, which has proven effective in capturing linguistic […]

Category: Large Language Model

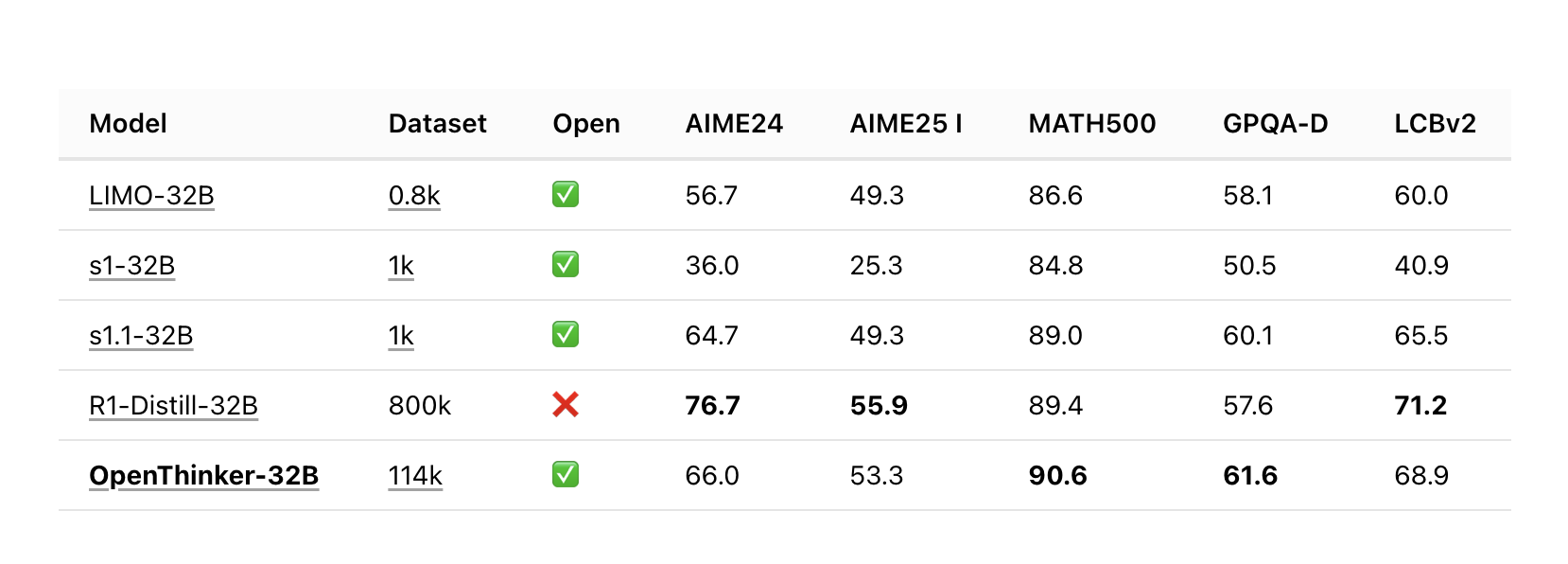

Meet OpenThinker-32B: A State-of-the-Art Open-Data Reasoning Model

Artificial intelligence has made significant strides, yet developing models capable of nuanced reasoning remains a challenge. Many existing models struggle […]

LIMO: The AI Model that Proves Quality Training Beats Quantity

Reasoning tasks are yet a big challenge for most of the language models. Instilling a reasoning aptitude in models, particularly […]

Convergence Labs Introduces the Large Memory Model (LM2): A Memory-Augmented Transformer Architecture Designed to Address Long Context Reasoning Challenges

Transformer-based models have significantly advanced natural language processing (NLP), excelling in various tasks. However, they struggle with reasoning over long […]

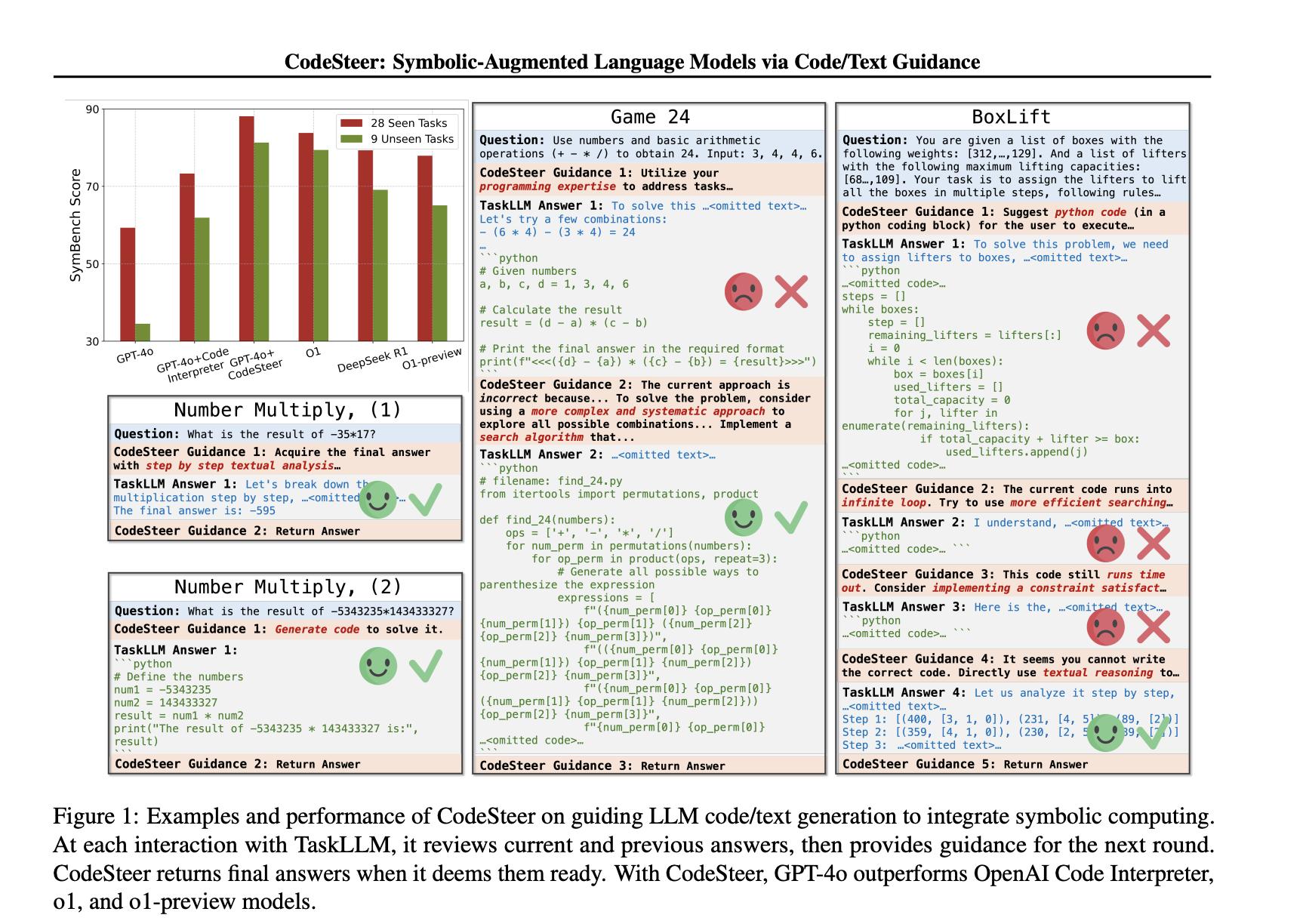

This AI Paper Introduces CodeSteer: Symbolic-Augmented Language Models via Code/Text Guidance

Large language models (LLMs) struggle with precise computations, symbolic manipulations, and algorithmic tasks, often requiring structured problem-solving approaches. While language […]

Shanghai AI Lab Releases OREAL-7B and OREAL-32B: Advancing Mathematical Reasoning with Outcome Reward-Based Reinforcement Learning

Mathematical reasoning remains a difficult area for artificial intelligence (AI) due to the complexity of problem-solving and the need for […]

This AI Paper Explores Long Chain-of-Thought Reasoning: Enhancing Large Language Models with Reinforcement Learning and Supervised Fine-Tuning

Large language models (LLMs) have demonstrated proficiency in solving complex problems across mathematics, scientific research, and software engineering. Chain-of-thought (CoT) […]

Zyphra Introduces the Beta Release of Zonos: A Highly Expressive TTS Model with High Fidelity Voice Cloning

Text-to-speech (TTS) technology has made significant strides in recent years, but challenges remain in creating natural, expressive, and high-fidelity speech […]

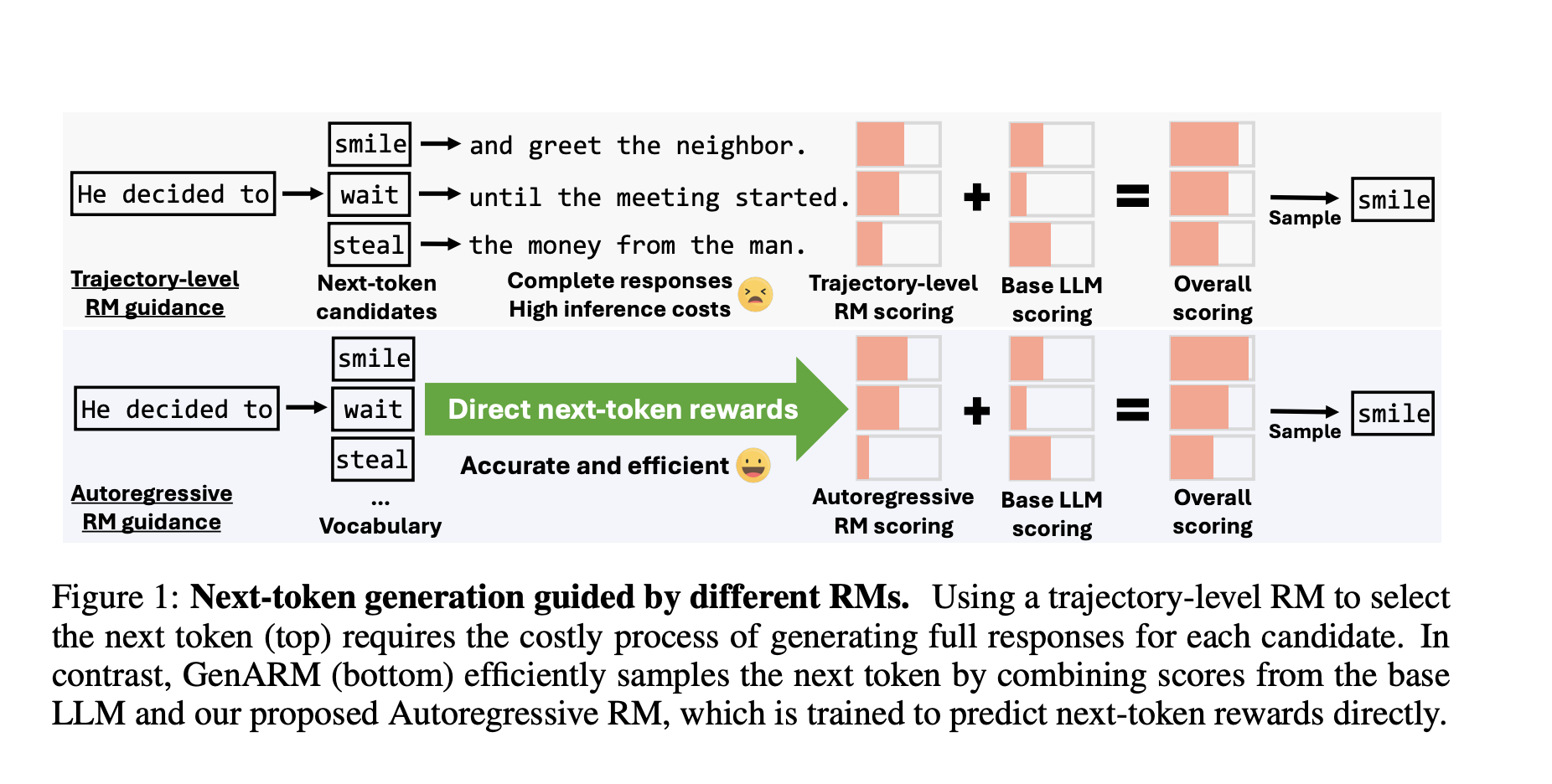

Efficient Alignment of Large Language Models Using Token-Level Reward Guidance with GenARM

Large language models (LLMs) must align with human preferences like helpfulness and harmlessness, but traditional alignment methods require costly retraining […]

Meta AI Introduces Brain2Qwerty: A New Deep Learning Model for Decoding Sentences from Brain Activity with EEG or MEG while Participants Typed Briefly Memorized Sentences on a QWERTY Keyboard

Brain-computer interfaces (BCIs) have seen significant progress in recent years, offering communication solutions for individuals with speech or motor impairments. […]