Transformers have revolutionized natural language processing as the foundation of large language models (LLMs), excelling in modeling long-range dependencies through […]

Category: Large Language Model

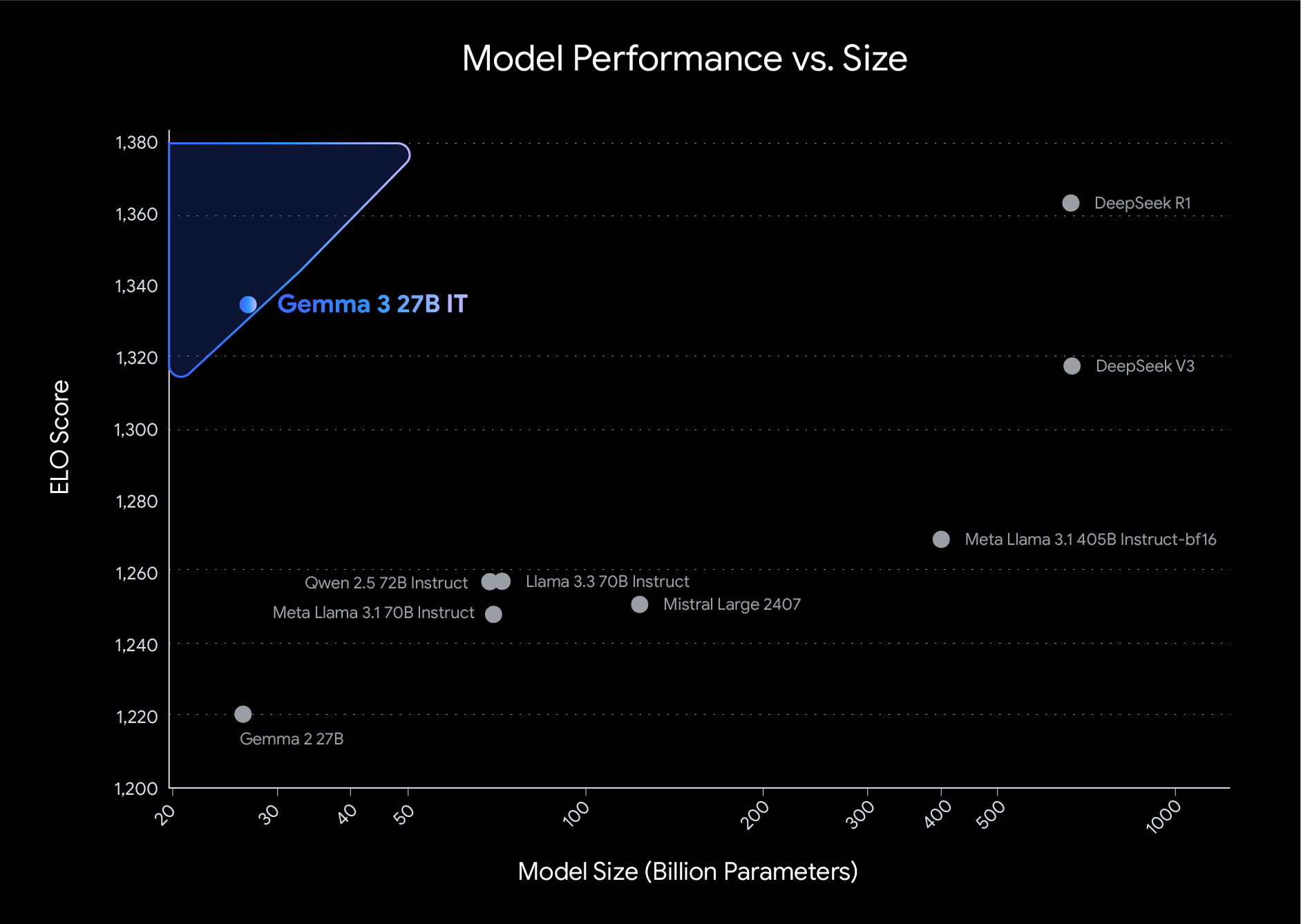

Google AI Releases Gemma 3: Lightweight Multimodal Open Models for Efficient and On‑Device AI

In the field of artificial intelligence, two persistent challenges remain. Many advanced language models require significant computational resources, which limits […]

Hugging Face Releases OlympicCoder: A Series of Open Reasoning AI Models that can Solve Olympiad-Level Programming Problems

In the realm of competitive programming, both human participants and artificial intelligence systems encounter a set of unique challenges. Many […]

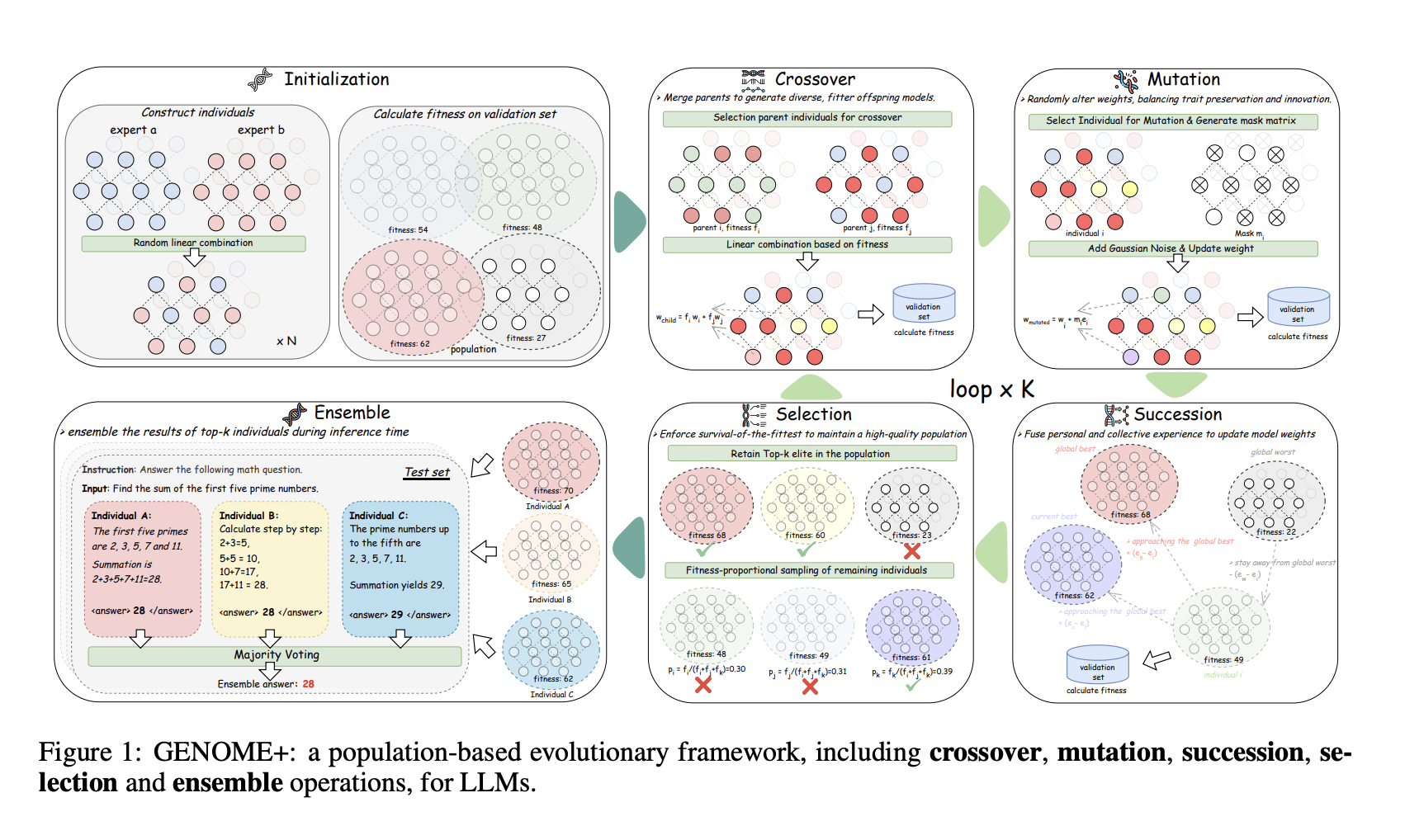

From Genes to Genius: Evolving Large Language Models with Nature’s Blueprint

Large language models (LLMs) have transformed artificial intelligence with their superior performance on various tasks, including natural language understanding and […]

Reka AI Open Sourced Reka Flash 3: A 21B General-Purpose Reasoning Model that was Trained from Scratch

In today’s dynamic AI landscape, developers and organizations face several practical challenges. High computational demands, latency issues, and limited access […]

Implementing Text-to-Speech TTS with BARK Using Hugging Face’s Transformers library in a Google Colab environment

Text-to-Speech (TTS) technology has evolved dramatically in recent years, from robotic-sounding voices to highly natural speech synthesis. BARK is an […]

Enhancing LLM Reasoning with Multi-Attempt Reinforcement Learning

Recent advancements in RL for LLMs, such as DeepSeek R1, have demonstrated that even simple question-answering tasks can significantly enhance […]

This AI Paper Introduces RL-Enhanced QWEN 2.5-32B: A Reinforcement Learning Framework for Structured LLM Reasoning and Tool Manipulation

Large reasoning models (LRMs) employ a deliberate, step-by-step thought process before arriving at a solution, making them suitable for complex […]

What if You Could Control How Long a Reasoning Model “Thinks”? CMU Researchers Introduce L1-1.5B: Reinforcement Learning Optimizes AI Thought Process

Reasoning language models have demonstrated the ability to enhance performance by generating longer chain-of-thought sequences during inference, effectively leveraging increased […]

This AI Paper Introduces CODI: A Self-Distillation Framework for Efficient and Scalable Chain-of-Thought Reasoning in LLMs

Chain-of-Thought (CoT) prompting enables large language models (LLMs) to perform step-by-step logical deductions in natural language. While this method has […]