Multimodal Large Language Models (MLLMs) have advanced the integration of visual and textual modalities, enabling progress in tasks such as […]

Category: Large Language Model

Researchers from Dataocean AI and Tsinghua University Introduces Dolphin: A Multilingual Automatic Speech Recognition ASR Model Optimized for Eastern Languages and Dialects

Automatic speech recognition (ASR) technologies have advanced significantly, yet notable disparities remain in their ability to accurately recognize diverse languages. […]

This AI Paper Introduces FASTCURL: A Curriculum Reinforcement Learning Framework with Context Extension for Efficient Training of R1-like Reasoning Models

Large language models have transformed how machines comprehend and generate text, especially in complex problem-solving areas like mathematical reasoning. These […]

Introduction to MCP: The Ultimate Guide to Model Context Protocol for AI Assistants

The Model Context Protocol (MCP) is an open standard (open-sourced by Anthropic) that defines a unified way to connect AI […]

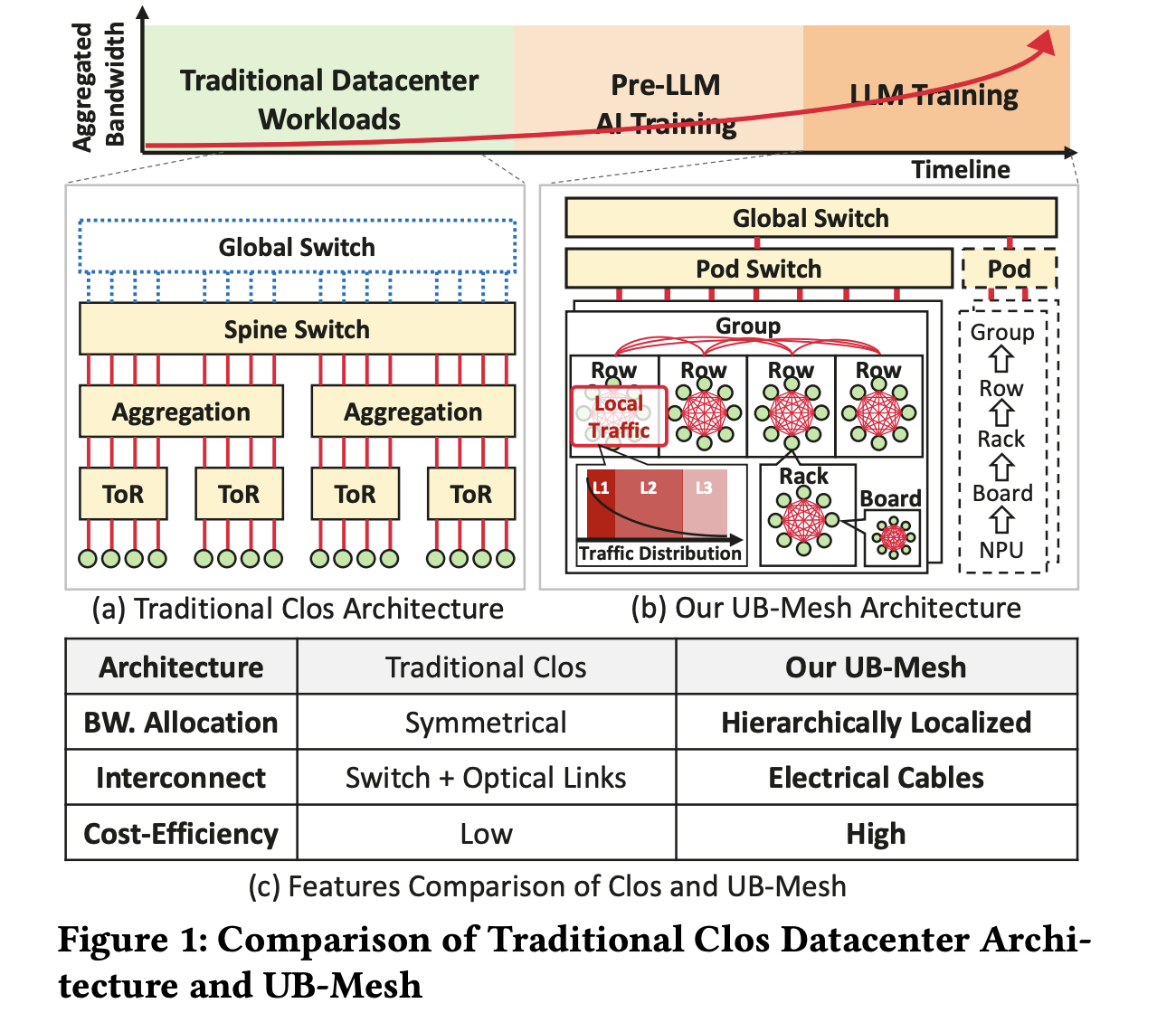

UB-Mesh: A Cost-Efficient, Scalable Network Architecture for Large-Scale LLM Training

As LLMs scale, their computational and bandwidth demands increase significantly, posing challenges for AI training infrastructure. Following scaling laws, LLMs […]

This AI Paper Unveils a Reverse-Engineered Simulator Model for Modern NVIDIA GPUs: Enhancing Microarchitecture Accuracy and Performance Prediction

GPUs are widely recognized for their efficiency in handling high-performance computing workloads, such as those found in artificial intelligence and […]

Snowflake Proposes ExCoT: A Novel AI Framework that Iteratively Optimizes Open-Source LLMs by Combining CoT Reasoning with off-Policy and on-Policy DPO, Relying Solely on Execution Accuracy as Feedback

Text-to-SQL translation, the task of transforming natural language queries into structured SQL statements, is essential for facilitating user-friendly database interactions. […]

Salesforce AI Introduce BingoGuard: An LLM-based Moderation System Designed to Predict both Binary Safety Labels and Severity Levels

The advancement of large language models (LLMs) has significantly influenced interactive technologies, presenting both benefits and challenges. One prominent issue […]

Open AI Releases PaperBench: A Challenging Benchmark for Assessing AI Agents’ Abilities to Replicate Cutting-Edge Machine Learning Research

The rapid progress in artificial intelligence (AI) and machine learning (ML) research underscores the importance of accurately evaluating AI agents’ […]

Meta AI Proposes Multi-Token Attention (MTA): A New Attention Method which Allows LLMs to Condition their Attention Weights on Multiple Query and Key Vectors

Large Language Models (LLMs) significantly benefit from attention mechanisms, enabling the effective retrieval of contextual information. Nevertheless, traditional attention methods […]