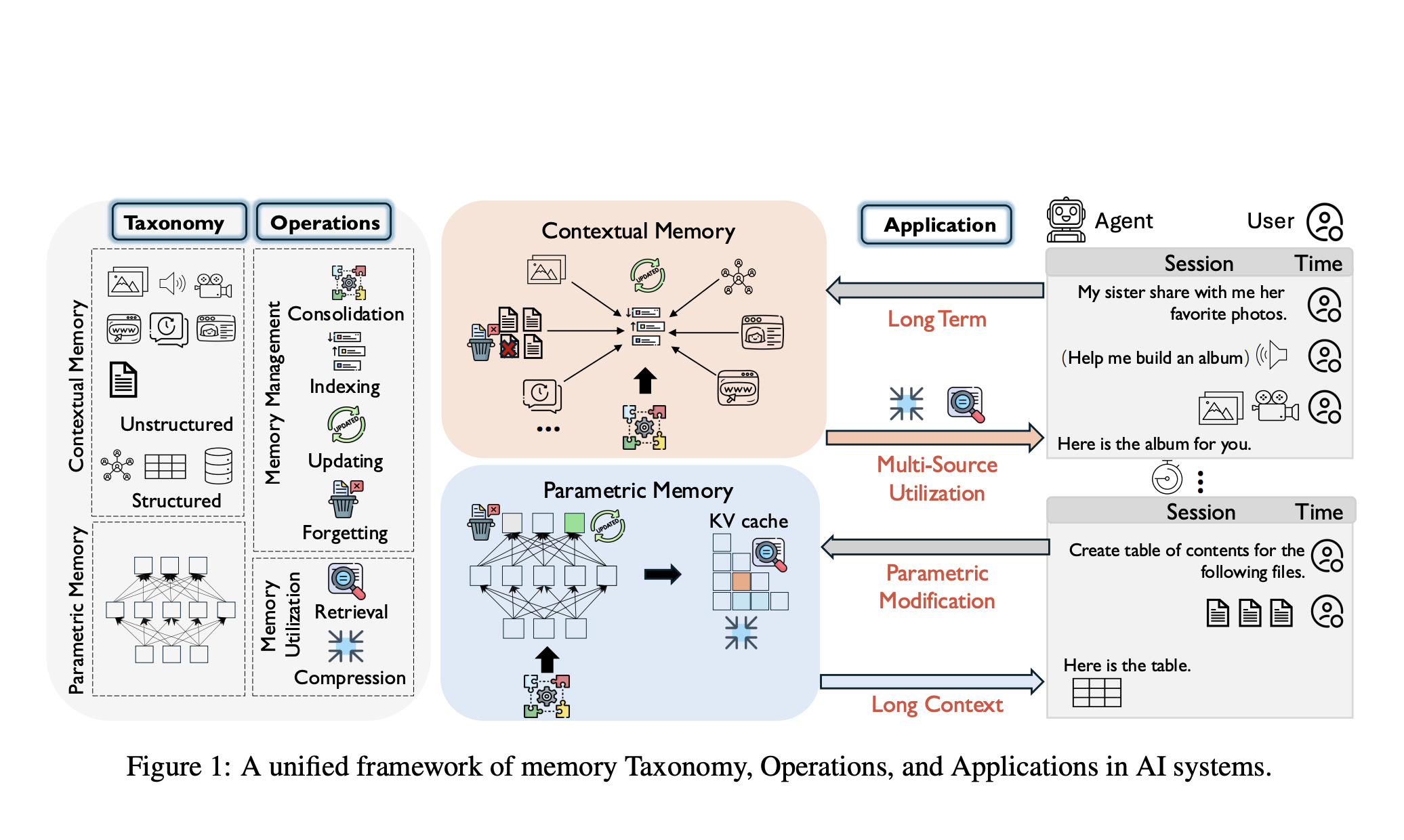

Memory plays a crucial role in LLM-based AI systems, supporting sustained, coherent interactions over time. While earlier surveys have explored […]

Category: Large Language Model

RWKV-X Combines Sparse Attention and Recurrent Memory to Enable Efficient 1M-Token Decoding with Linear Complexity

LLMs built on Transformer architectures face significant scaling challenges due to their quadratic complexity in sequence length when processing long-context […]

Scaling Reinforcement Learning Beyond Math: Researchers from NVIDIA AI and CMU Propose Nemotron-CrossThink for Multi-Domain Reasoning with Verifiable Reward Modeling

Large Language Models (LLMs) have demonstrated remarkable reasoning capabilities across diverse tasks, with Reinforcement Learning (RL) serving as a crucial […]

Multimodal Queries Require Multimodal RAG: Researchers from KAIST and DeepAuto.ai Propose UniversalRAG—A New Framework That Dynamically Routes Across Modalities and Granularities for Accurate and Efficient Retrieval-Augmented Generation

RAG has proven effective in enhancing the factual accuracy of LLMs by grounding their outputs in external, relevant information. However, […]

Google Researchers Advance Diagnostic AI: AMIE Now Matches or Outperforms Primary Care Physicians Using Multimodal Reasoning with Gemini 2.0 Flash

LLMs have shown impressive promise in conducting diagnostic conversations, particularly through text-based interactions. However, their evaluation and application have largely […]

IBM AI Releases Granite 4.0 Tiny Preview: A Compact Open-Language Model Optimized for Long-Context and Instruction Tasks

IBM has introduced a preview of Granite 4.0 Tiny, the smallest member of its upcoming Granite 4.0 family of language […]

From ELIZA to Conversation Modeling: Evolution of Conversational AI Systems and Paradigms

TL;DR: Conversational AI has transformed from ELIZA’s simple rule-based systems in the 1960s to today’s sophisticated platforms. The journey progressed […]

JetBrains Open Sources Mellum: A Developer-Centric Language Model for Code-Related Tasks

JetBrains has officially open-sourced Mellum, a purpose-built 4-billion-parameter language model tailored for software development tasks. Developed from the ground up, […]

Meta and Booz Allen Deploy Space Llama: Open-Source AI Heads to the ISS for Onboard Decision-Making

In a significant step toward enabling autonomous AI systems in space, Meta and Booz Allen Hamilton have announced the deployment […]

Training LLM Agents Just Got More Stable: Researchers Introduce StarPO-S and RAGEN to Tackle Multi-Turn Reasoning and Collapse in Reinforcement Learning

Large language models (LLMs) face significant challenges when trained as autonomous agents in interactive environments. Unlike static tasks, agent settings […]