LLM-Based Code Generation Faces a Verification Gap LLMs have shown strong performance in programming and are widely adopted in tools […]

Category: Large Language Model

DeepSeek Researchers Open-Sourced a Personal Project named ‘nano-vLLM’: A Lightweight vLLM Implementation Built from Scratch

The DeepSeek Researchers just released a super cool personal project named ‘nano-vLLM‘, a minimalistic and efficient implementation of the vLLM […]

Why Apple’s Critique of AI Reasoning Is Premature

The debate around the reasoning capabilities of Large Reasoning Models (LRMs) has been recently invigorated by two prominent yet conflicting […]

This AI Paper Introduces WINGS: A Dual-Learner Architecture to Prevent Text-Only Forgetting in Multimodal Large Language Models

Multimodal LLMs: Expanding Capabilities Across Text and Vision Expanding large language models (LLMs) to handle multiple modalities, particularly images and […]

Mistral AI Releases Mistral Small 3.2: Enhanced Instruction Following, Reduced Repetition, and Stronger Function Calling for AI Integration

With the frequent release of new large language models (LLMs), there is a persistent quest to minimize repetitive errors, enhance […]

Meta AI Researchers Introduced a Scalable Byte-Level Autoregressive U-Net Model That Outperforms Token-Based Transformers Across Language Modeling Benchmarks

Language modeling plays a foundational role in natural language processing, enabling machines to predict and generate text that resembles human […]

MiniMax AI Releases MiniMax-M1: A 456B Parameter Hybrid Model for Long-Context and Reinforcement Learning RL Tasks

The Challenge of Long-Context Reasoning in AI Models Large reasoning models are not only designed to understand language but are […]

ReVisual-R1: An Open-Source 7B Multimodal Large Language Model (MLLMs) that Achieves Long, Accurate and Thoughtful Reasoning

The Challenge of Multimodal Reasoning Recent breakthroughs in text-based language models, such as DeepSeek-R1, have demonstrated that RL can aid […]

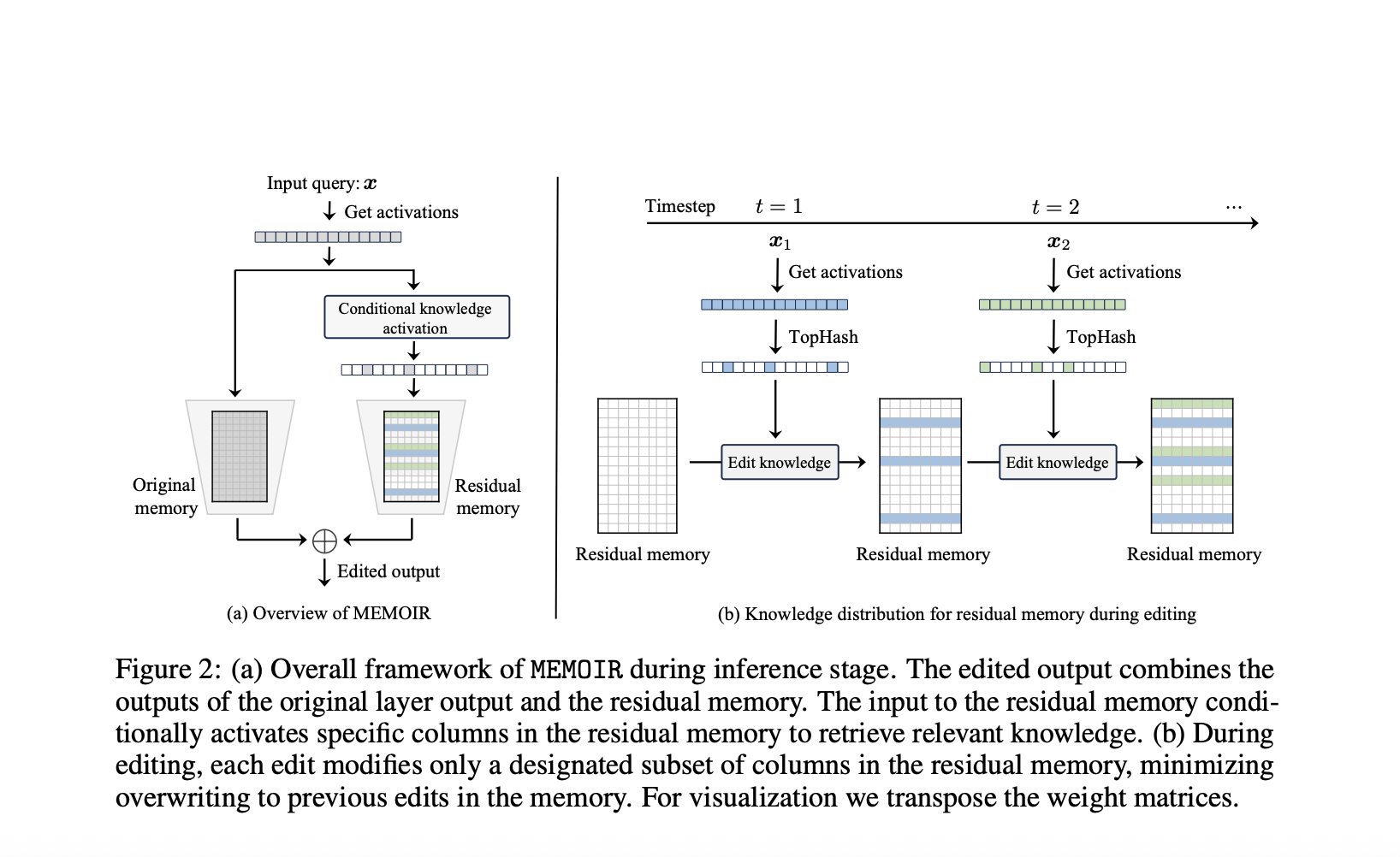

EPFL Researchers Introduce MEMOIR: A Scalable Framework for Lifelong Model Editing in LLMs

The Challenge of Updating LLM Knowledge LLMs have shown outstanding performance for various tasks through extensive pre-training on vast datasets. […]

Internal Coherence Maximization (ICM): A Label-Free, Unsupervised Training Framework for LLMs

Post-training methods for pre-trained language models (LMs) depend on human supervision through demonstrations or preference feedback to specify desired behaviors. […]