Alibaba Cloud’s Qwen team has open-sourced Qwen3-TTS, a family of multilingual text-to-speech models that target three core tasks in one […]

Category: Language Model

Microsoft Releases VibeVoice-ASR: A Unified Speech-to-Text Model Designed to Handle 60-Minute Long-Form Audio in a Single Pass

Microsoft has released VibeVoice-ASR as part of the VibeVoice family of open source frontier voice AI models. VibeVoice-ASR is described […]

FlashLabs Researchers Release Chroma 1.0: A 4B Real Time Speech Dialogue Model With Personalized Voice Cloning

Chroma 1.0 is a real time speech to speech dialogue model that takes audio as input and returns audio as […]

Inworld AI Releases TTS-1.5 For Realtime, Production Grade Voice Agents

Inworld AI has introduced Inworld TTS-1.5, an upgrade to its TTS-1 family that targets realtime voice agents with strict constraints […]

Liquid AI Releases LFM2.5-1.2B-Thinking: a 1.2B Parameter Reasoning Model That Fits Under 1 GB On-Device

Liquid AI has released LFM2.5-1.2B-Thinking, a 1.2 billion parameter reasoning model that runs fully on device and fits in about […]

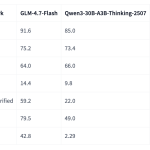

Zhipu AI Releases GLM-4.7-Flash: A 30B-A3B MoE Model for Efficient Local Coding and Agents

GLM-4.7-Flash is a new member of the GLM 4.7 family and targets developers who want strong coding and reasoning performance […]

How to Design a Fully Streaming Voice Agent with End-to-End Latency Budgets, Incremental ASR, LLM Streaming, and Real-Time TTS

In this tutorial, we build an end-to-end streaming voice agent that mirrors how modern low-latency conversational systems operate in real […]

Microsoft Research Releases OptiMind: A 20B Parameter Model that Turns Natural Language into Solver Ready Optimization Models

Microsoft Research has released OptiMind, an AI based system that converts natural language descriptions of complex decision problems into mathematical […]

Nous Research Releases NousCoder-14B: A Competitive Olympiad Programming Model Post-Trained on Qwen3-14B via Reinforcement Learning

Nous Research has introduced NousCoder-14B, a competitive olympiad programming model that is post trained on Qwen3-14B using reinforcement learning (RL) […]

NVIDIA Releases PersonaPlex-7B-v1: A Real-Time Speech-to-Speech Model Designed for Natural and Full-Duplex Conversations

NVIDIA Researchers released PersonaPlex-7B-v1, a full duplex speech to speech conversational model that targets natural voice interactions with precise persona […]