In this tutorial, we build an Advanced OCR AI Agent in Google Colab using EasyOCR, OpenCV, and Pillow, running fully offline with GPU acceleration. The agent includes a preprocessing pipeline with contrast enhancement (CLAHE), denoising, sharpening, and adaptive thresholding to improve recognition accuracy. Beyond basic OCR, we filter results by confidence, generate text statistics, and perform pattern detection (emails, URLs, dates, phone numbers) along with simple language hints. The design also supports batch processing, visualization with bounding boxes, and structured exports for flexible usage. Check out the FULL CODES here.

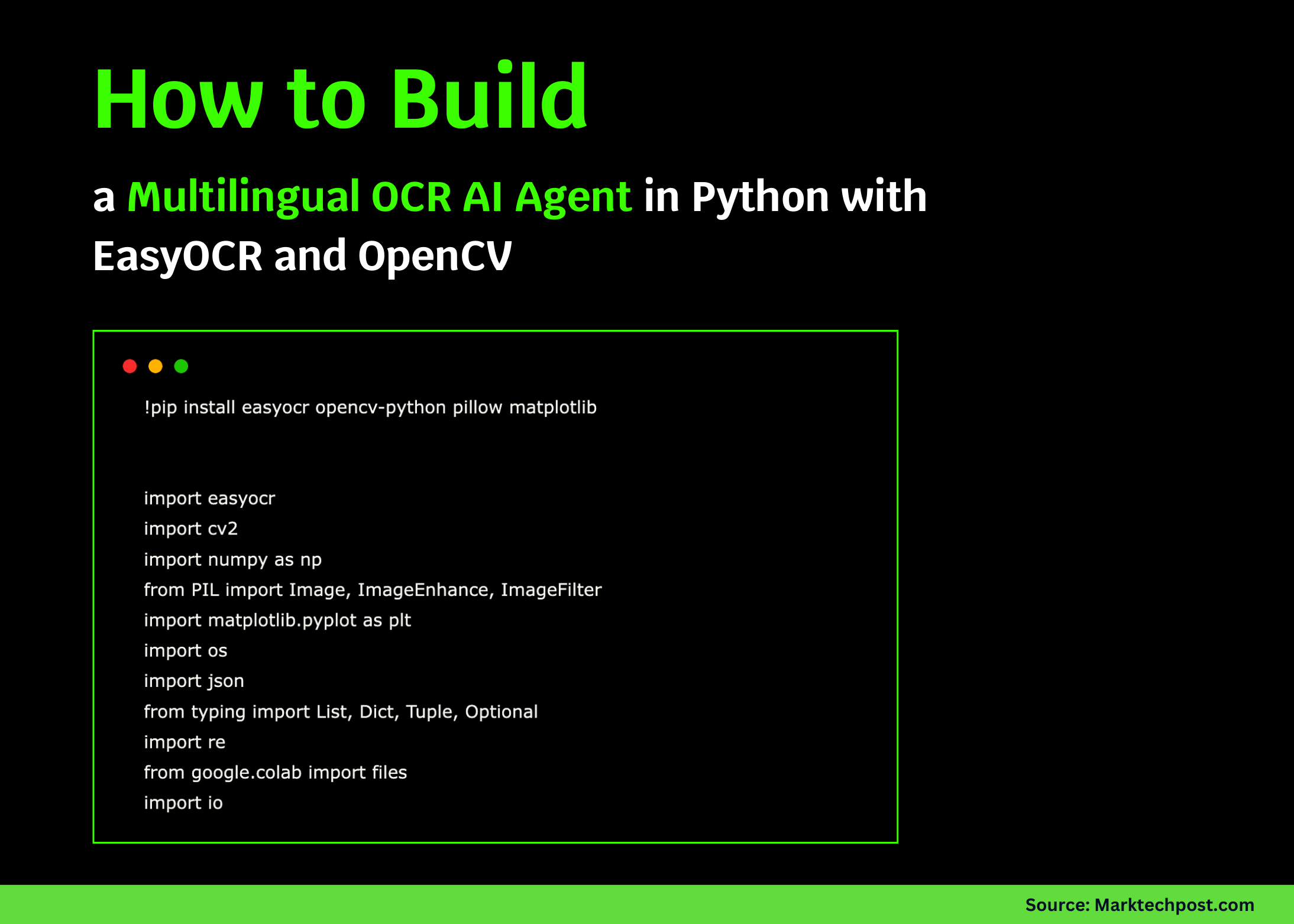

!pip install easyocr opencv-python pillow matplotlib import easyocr import cv2 import numpy as np from PIL import Image, ImageEnhance, ImageFilter import matplotlib.pyplot as plt import os import json from typing import List, Dict, Tuple, Optional import re from google.colab import files import ioWe start by installing the required libraries, EasyOCR, OpenCV, Pillow, and Matplotlib, to set up our environment. We then import all necessary modules so we can handle image preprocessing, OCR, visualization, and file operations seamlessly. Check out the FULL CODES here.

class AdvancedOCRAgent: """ Advanced OCR AI Agent with preprocessing, multi-language support, and intelligent text extraction capabilities. """ def __init__(self, languages: List[str] = ['en'], gpu: bool = True): """Initialize OCR agent with specified languages.""" print("🤖 Initializing Advanced OCR Agent...") self.languages = languages self.reader = easyocr.Reader(languages, gpu=gpu) self.confidence_threshold = 0.5 print(f"✅ OCR Agent ready! Languages: {languages}") def upload_image(self) -> Optional[str]: """Upload image file through Colab interface.""" print("📁 Upload your image file:") uploaded = files.upload() if uploaded: filename = list(uploaded.keys())[0] print(f"✅ Uploaded: {filename}") return filename return None def preprocess_image(self, image: np.ndarray, enhance: bool = True) -> np.ndarray: """Advanced image preprocessing for better OCR accuracy.""" if len(image.shape) == 3: gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) else: gray = image.copy() if enhance: clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8,8)) gray = clahe.apply(gray) gray = cv2.fastNlMeansDenoising(gray) kernel = np.array([[-1,-1,-1], [-1,9,-1], [-1,-1,-1]]) gray = cv2.filter2D(gray, -1, kernel) binary = cv2.adaptiveThreshold( gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2 ) return binary def extract_text(self, image_path: str, preprocess: bool = True) -> Dict: """Extract text from image with advanced processing.""" print(f"🔍 Processing image: {image_path}") image = cv2.imread(image_path) if image is None: raise ValueError(f"Could not load image: {image_path}") if preprocess: processed_image = self.preprocess_image(image) else: processed_image = image results = self.reader.readtext(processed_image) extracted_data = { 'raw_results': results, 'filtered_results': [], 'full_text': '', 'confidence_stats': {}, 'word_count': 0, 'line_count': 0 } high_confidence_text = [] confidences = [] for (bbox, text, confidence) in results: if confidence >= self.confidence_threshold: extracted_data['filtered_results'].append({ 'text': text, 'confidence': confidence, 'bbox': bbox }) high_confidence_text.append(text) confidences.append(confidence) extracted_data['full_text'] = ' '.join(high_confidence_text) extracted_data['word_count'] = len(extracted_data['full_text'].split()) extracted_data['line_count'] = len(high_confidence_text) if confidences: extracted_data['confidence_stats'] = { 'mean': np.mean(confidences), 'min': np.min(confidences), 'max': np.max(confidences), 'std': np.std(confidences) } return extracted_data def visualize_results(self, image_path: str, results: Dict, show_bbox: bool = True): """Visualize OCR results with bounding boxes.""" image = cv2.imread(image_path) image_rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) plt.figure(figsize=(15, 10)) if show_bbox: plt.subplot(2, 2, 1) img_with_boxes = image_rgb.copy() for item in results['filtered_results']: bbox = np.array(item['bbox']).astype(int) cv2.polylines(img_with_boxes, [bbox], True, (255, 0, 0), 2) x, y = bbox[0] cv2.putText(img_with_boxes, f"{item['confidence']:.2f}", (x, y-10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 0, 0), 1) plt.imshow(img_with_boxes) plt.title("OCR Results with Bounding Boxes") plt.axis('off') plt.subplot(2, 2, 2) processed = self.preprocess_image(image) plt.imshow(processed, cmap='gray') plt.title("Preprocessed Image") plt.axis('off') plt.subplot(2, 2, 3) confidences = [item['confidence'] for item in results['filtered_results']] if confidences: plt.hist(confidences, bins=20, alpha=0.7, color='blue') plt.xlabel('Confidence Score') plt.ylabel('Frequency') plt.title('Confidence Score Distribution') plt.axvline(self.confidence_threshold, color='red', linestyle='--', label=f'Threshold: {self.confidence_threshold}') plt.legend() plt.subplot(2, 2, 4) stats = results['confidence_stats'] if stats: labels = ['Mean', 'Min', 'Max'] values = [stats['mean'], stats['min'], stats['max']] plt.bar(labels, values, color=['green', 'red', 'blue']) plt.ylabel('Confidence Score') plt.title('Confidence Statistics') plt.ylim(0, 1) plt.tight_layout() plt.show() def smart_text_analysis(self, text: str) -> Dict: """Perform intelligent analysis of extracted text.""" analysis = { 'language_detection': 'unknown', 'text_type': 'unknown', 'key_info': {}, 'patterns': [] } email_pattern = r'b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+.[A-Z|a-z]{2,}b' phone_pattern = r'(+d{1,3}[-.s]?)?(?d{3})?[-.s]?d{3}[-.s]?d{4}' url_pattern = r'http[s]?://(?:[a-zA-Z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-fA-F][0-9a-fA-F]))+' date_pattern = r'bd{1,2}[/-]d{1,2}[/-]d{2,4}b' patterns = { 'emails': re.findall(email_pattern, text, re.IGNORECASE), 'phones': re.findall(phone_pattern, text), 'urls': re.findall(url_pattern, text, re.IGNORECASE), 'dates': re.findall(date_pattern, text) } analysis['patterns'] = {k: v for k, v in patterns.items() if v} if any(patterns.values()): if patterns.get('emails') or patterns.get('phones'): analysis['text_type'] = 'contact_info' elif patterns.get('urls'): analysis['text_type'] = 'web_content' elif patterns.get('dates'): analysis['text_type'] = 'document_with_dates' if re.search(r'[а-яё]', text.lower()): analysis['language_detection'] = 'russian' elif re.search(r'[àáâãäåæçèéêëìíîïñòóôõöøùúûüý]', text.lower()): analysis['language_detection'] = 'romance_language' elif re.search(r'[一-龯]', text): analysis['language_detection'] = 'chinese' elif re.search(r'[ひらがなカタカナ]', text): analysis['language_detection'] = 'japanese' elif re.search(r'[a-zA-Z]', text): analysis['language_detection'] = 'latin_based' return analysis def process_batch(self, image_folder: str) -> List[Dict]: """Process multiple images in batch.""" results = [] supported_formats = ('.png', '.jpg', '.jpeg', '.bmp', '.tiff') for filename in os.listdir(image_folder): if filename.lower().endswith(supported_formats): image_path = os.path.join(image_folder, filename) try: result = self.extract_text(image_path) result['filename'] = filename results.append(result) print(f"✅ Processed: {filename}") except Exception as e: print(f"❌ Error processing {filename}: {str(e)}") return results def export_results(self, results: Dict, format: str = 'json') -> str: """Export results in specified format.""" if format.lower() == 'json': output = json.dumps(results, indent=2, ensure_ascii=False) filename = 'ocr_results.json' elif format.lower() == 'txt': output = results['full_text'] filename = 'extracted_text.txt' else: raise ValueError("Supported formats: 'json', 'txt'") with open(filename, 'w', encoding='utf-8') as f: f.write(output) print(f"📄 Results exported to: {filename}") return filenameWe define an AdvancedOCRAgent that we initialize with multilingual EasyOCR and a GPU, and we set a confidence threshold to control output quality. We preprocess images (CLAHE, denoise, sharpen, adaptive threshold), extract text, visualize bounding boxes and confidence, run smart pattern/language analysis, support batch folders, and export results as JSON or TXT. Check out the FULL CODES here.

def demo_ocr_agent(): """Demonstrate the OCR agent capabilities.""" print("🚀 Advanced OCR AI Agent Demo") print("=" * 50) ocr = AdvancedOCRAgent(languages=['en'], gpu=True) image_path = ocr.upload_image() if image_path: try: results = ocr.extract_text(image_path, preprocess=True) print("n📊 OCR Results:") print(f"Words detected: {results['word_count']}") print(f"Lines detected: {results['line_count']}") print(f"Average confidence: {results['confidence_stats'].get('mean', 0):.2f}") print("n📝 Extracted Text:") print("-" * 30) print(results['full_text']) print("-" * 30) analysis = ocr.smart_text_analysis(results['full_text']) print(f"n🧠 Smart Analysis:") print(f"Detected text type: {analysis['text_type']}") print(f"Language hints: {analysis['language_detection']}") if analysis['patterns']: print(f"Found patterns: {list(analysis['patterns'].keys())}") ocr.visualize_results(image_path, results) ocr.export_results(results, 'json') except Exception as e: print(f"❌ Error: {str(e)}") else: print("No image uploaded. Please try again.") if __name__ == "__main__": demo_ocr_agent()We create a demo function that walks us through the full OCR workflow: we initialize the agent with English and GPU support, upload an image, preprocess it, and extract text with confidence stats. We then display the results, run smart text analysis to detect patterns and language hints, visualize bounding boxes and scores, and finally export everything into a JSON file.

In conclusion, we create a robust OCR pipeline that combines preprocessing, recognition, and analysis in a single Colab workflow. We enhance EasyOCR outputs using OpenCV techniques, visualize results for interpretability, and add confidence metrics for reliability. The agent is modular, allowing both single-image and batch processing, with results exported in JSON or text formats. This shows that open-source tools can deliver production-grade OCR without external APIs, while leaving room for domain-specific extensions like invoice or document parsing.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.