Formal mathematical reasoning represents a significant frontier in artificial intelligence, addressing fundamental logic, computation, and problem-solving challenges. This field focuses […]

Category: Staff

Camel-AI Open Sourced OASIS: A Next Generation Simulator for Realistic Social Media Dynamics with One Million Agents

Social media platforms have revolutionized human interaction, creating dynamic environments where millions of users exchange information, form communities, and influence […]

Collective Monte Carlo Tree Search (CoMCTS): A New Learning-to-Reason Method for Multimodal Large Language Models

In today’s world, Multimodal large language models (MLLMs) are advanced systems that process and understand multiple input forms, such as […]

YuLan-Mini: A 2.42B Parameter Open Data-efficient Language Model with Long-Context Capabilities and Advanced Training Techniques

Large language models (LLMs) built using transformer architectures heavily depend on pre-training with large-scale data to predict sequential tokens. This […]

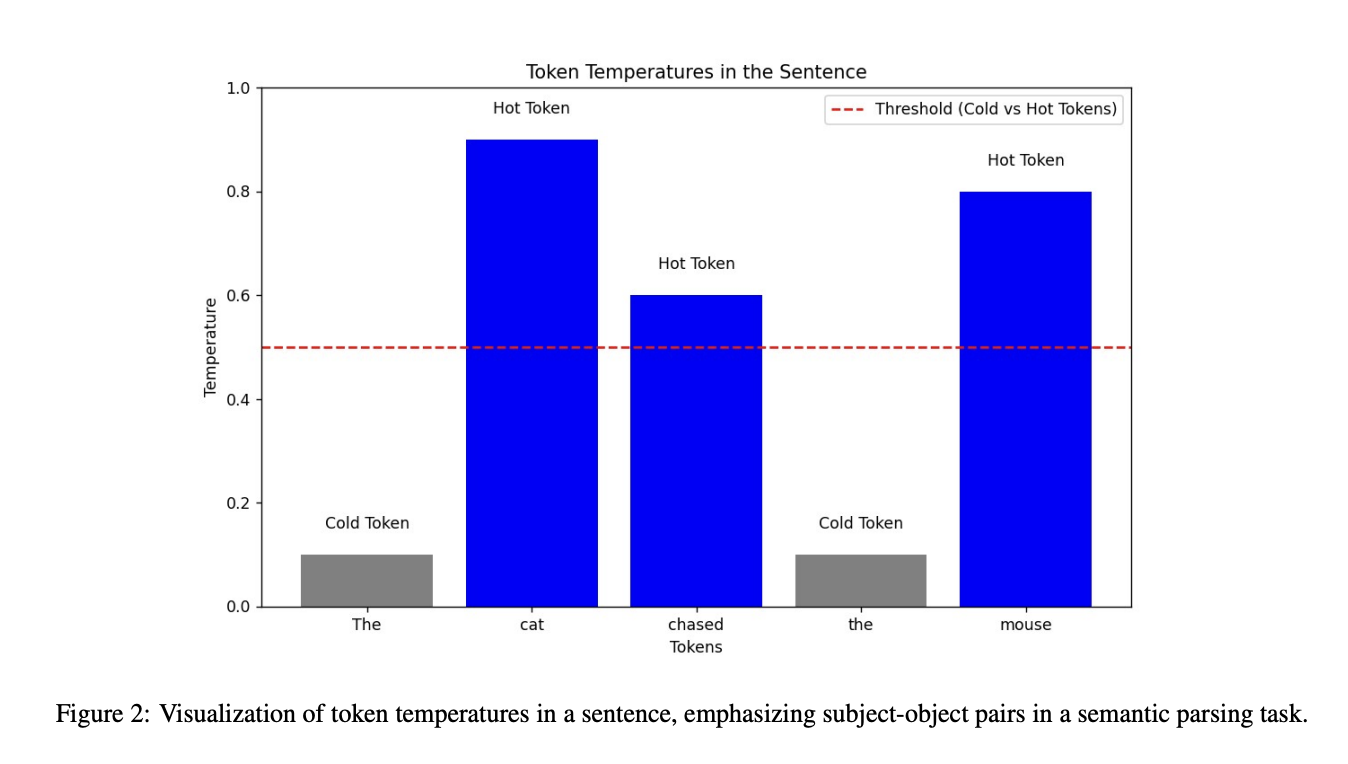

Quasar-1: A Rigorous Mathematical Framework for Temperature-Guided Reasoning in Language Models

Large language models (LLMs) encounter significant difficulties in performing efficient and logically consistent reasoning. Existing methods, such as CoT prompting, […]

Unveiling Privacy Risks in Machine Unlearning: Reconstruction Attacks on Deleted Data

Machine unlearning is driven by the need for data autonomy, allowing individuals to request the removal of their data’s influence […]

Meet SemiKong: The World’s First Open-Source Semiconductor-Focused LLM

The semiconductor industry enables advancements in consumer electronics, automotive systems, and cutting-edge computing technologies. The production of semiconductors involves sophisticated […]

Google DeepMind Introduces Differentiable Cache Augmentation: A Coprocessor-Enhanced Approach to Boost LLM Reasoning and Efficiency

Large language models (LLMs) are integral to solving complex problems across language processing, mathematics, and reasoning domains. Enhancements in computational […]

AWS Researchers Propose LEDEX: A Machine Learning Training Framework that Significantly Improves the Self-Debugging Capability of LLMs

Code generation using Large Language Models (LLMs) has emerged as a critical research area, but generating accurate code for complex […]

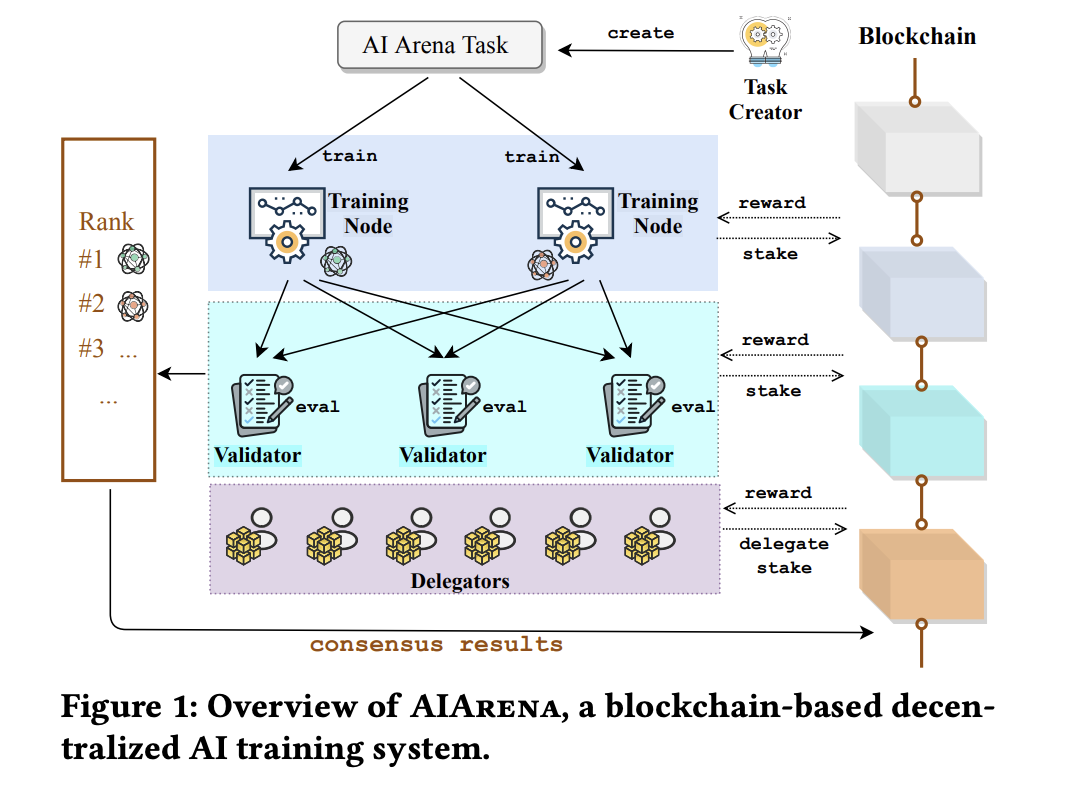

Meet AIArena: A Blockchain-Based Decentralized AI Training Platform

The monopolization of any industry into the hands of a few giant companies has always been a matter of concern. […]