Large Language Models (LLMs) aim to align with human preferences, ensuring reliable and trustworthy decision-making. However, these models acquire biases, […]

Category: Machine Learning

Researchers from SynthLabs and Stanford Propose Meta Chain-of-Thought (Meta-CoT): An AI Framework for Improving LLM Reasoning

Large Language Models (LLMs) have significantly advanced artificial intelligence, particularly in natural language understanding and generation. However, these models encounter […]

TabTreeFormer: Enhancing Synthetic Tabular Data Generation Through Tree-Based Inductive Biases and Dual-Quantization Tokenization

The generation of synthetic tabular data has become increasingly crucial in fields like healthcare and financial services, where privacy concerns […]

Microsoft AI Just Released Phi-4: A Small Language Model Available on Hugging Face Under the MIT License

Microsoft has released Phi-4, a compact and efficient small language model, on Hugging Face under the MIT license. This decision […]

This AI Paper Introduces Semantic Backpropagation and Gradient Descent: Advanced Methods for Optimizing Language-Based Agentic Systems

Language-based agentic systems represent a breakthrough in artificial intelligence, allowing for the automation of tasks such as question-answering, programming, and […]

PyG-SSL: An Open-Source Library for Graph Self-Supervised Learning and Compatible with Various Deep Learning and Scientific Computing Backends

Complex domains like social media, molecular biology, and recommendation systems have graph-structured data that consists of nodes, edges, and their […]

DeepMind Research Introduces The FACTS Grounding Leaderboard: Benchmarking LLMs’ Ability to Ground Responses to Long-Form Input

Large language models (LLMs) have revolutionized natural language processing, enabling applications that range from automated writing to complex decision-making aids. […]

Researchers from Caltech, Meta FAIR, and NVIDIA AI Introduce Tensor-GaLore: A Novel Method for Efficient Training of Neural Networks with Higher-Order Tensor Weights

Advancements in neural networks have brought significant changes across domains like natural language processing, computer vision, and scientific computing. Despite […]

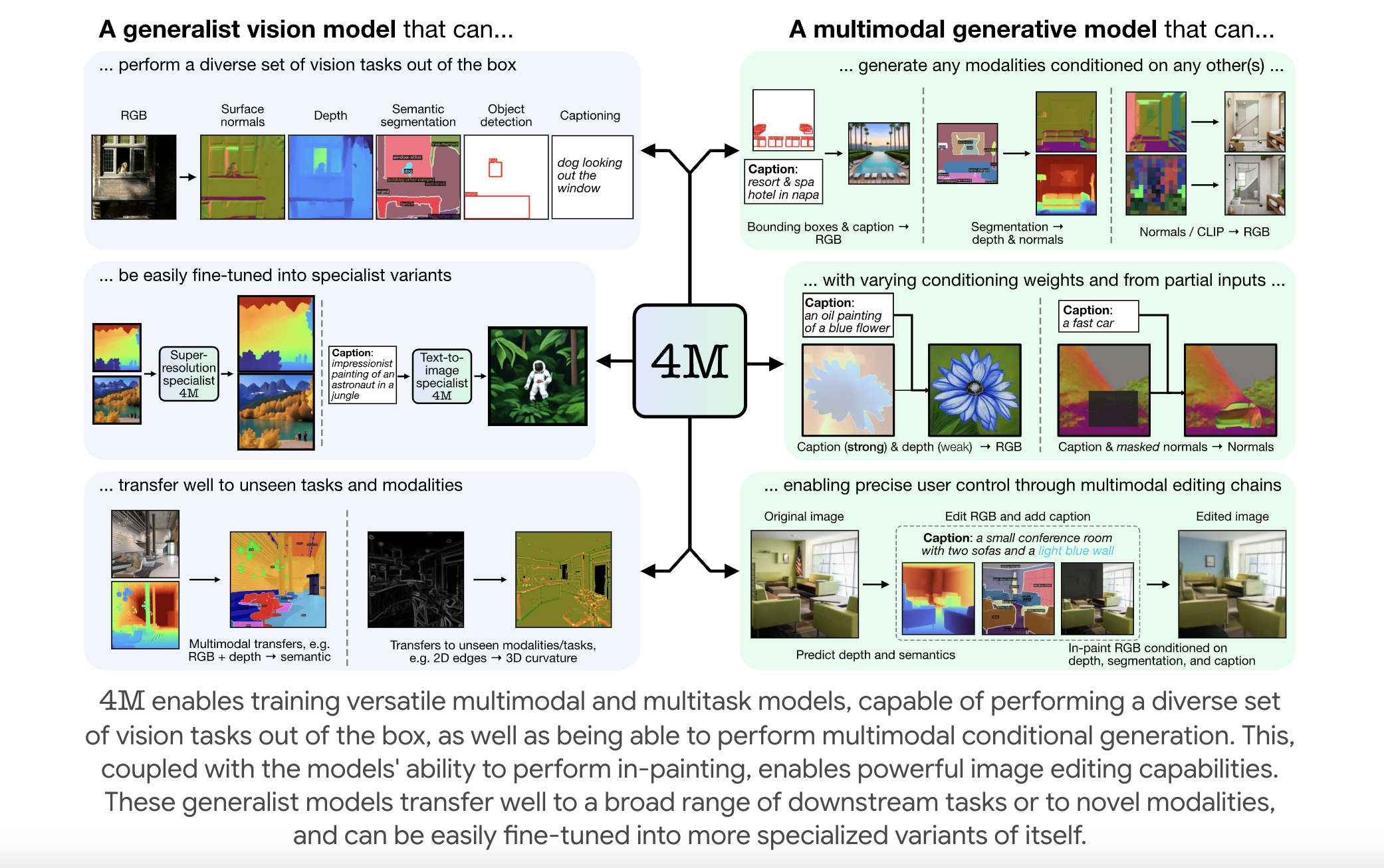

EPFL Researchers Releases 4M: An Open-Source Training Framework to Advance Multimodal AI

Multimodal foundation models are becoming increasingly relevant in artificial intelligence, enabling systems to process and integrate multiple forms of data—such […]

Transformer-Based AI Models for Ovarian Lesion Diagnosis: Enhancing Accuracy and Reducing Expert Referral Dependence Across International Centers

Ovarian lesions are frequently detected, often by chance, and managing them is crucial to avoid delayed diagnoses or unnecessary interventions. […]