AI has witnessed rapid advancements in NLP in recent years, yet many existing models still struggle to balance intuitive responses […]

Category: Machine Learning

This AI Paper from Apple Introduces a Distillation Scaling Law: A Compute-Optimal Approach for Training Efficient Language Models

Language models have become increasingly expensive to train and deploy. This has led researchers to explore techniques such as model […]

DeepSeek AI Introduces CODEI/O: A Novel Approach that Transforms Code-based Reasoning Patterns into Natural Language Formats to Enhance LLMs’ Reasoning Capabilities

Large Language Models (LLMs) have advanced significantly in natural language processing, yet reasoning remains a persistent challenge. While tasks such […]

Google DeepMind Researchers Propose Matryoshka Quantization: A Technique to Enhance Deep Learning Efficiency by Optimizing Multi-Precision Models without Sacrificing Accuracy

Quantization is a crucial technique in deep learning for reducing computational costs and improving model efficiency. Large-scale language models demand […]

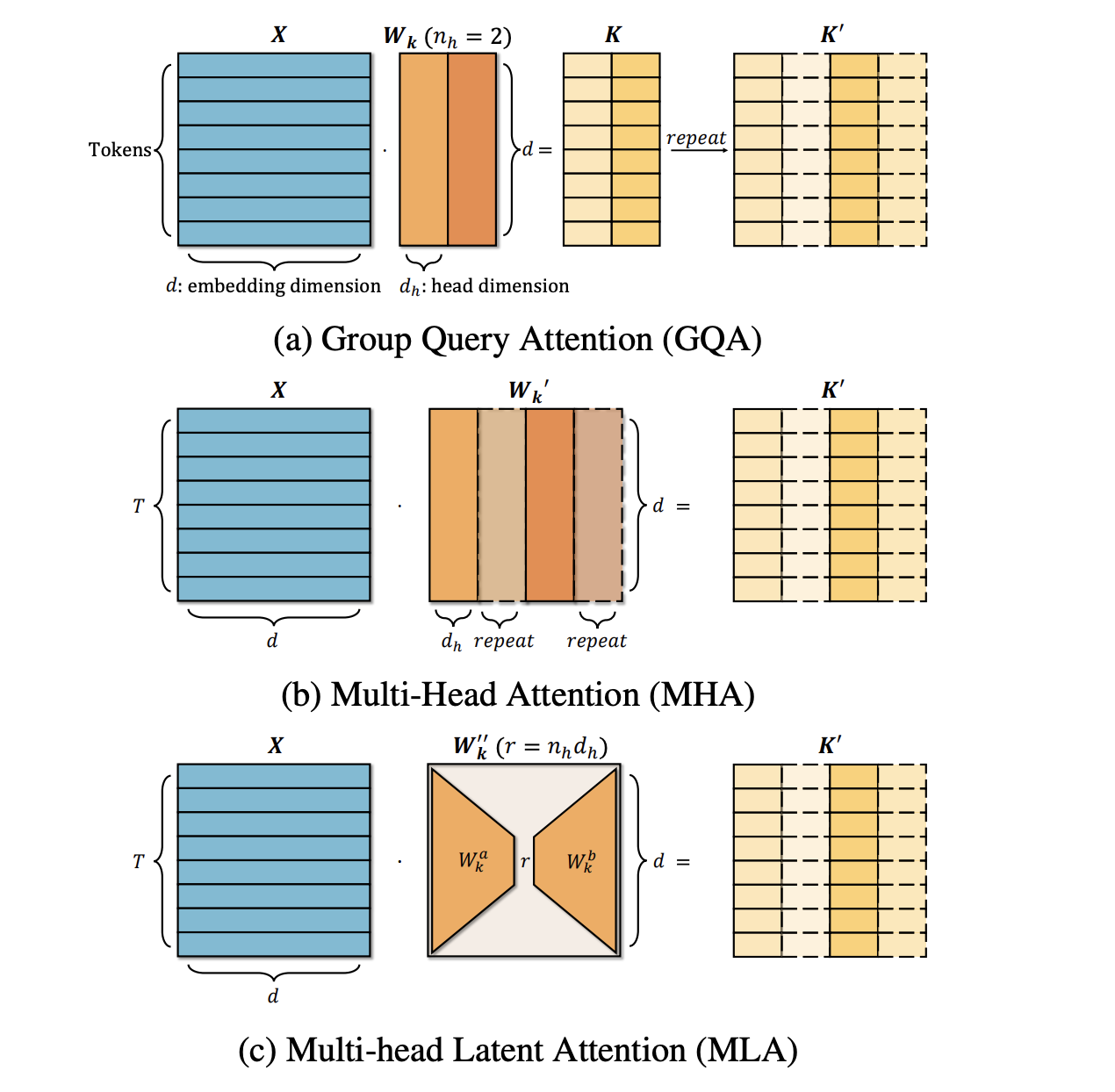

TransMLA: Transforming GQA-based Models Into MLA-based Models

Large Language Models (LLMs) have gained significant importance as productivity tools, with open-source models increasingly matching the performance of their […]

ChatGPT can now write erotica as OpenAI eases up on AI paternalism

“Following the initial release of the Model Spec (May 2024), many users and developers expressed support for enabling a ‘grown-up […]

Salesforce AI Research Introduces Reward-Guided Speculative Decoding (RSD): A Novel Framework that Improves the Efficiency of Inference in Large Language Models (LLMs) Up To 4.4× Fewer FLOPs

In recent years, the rapid scaling of large language models (LLMs) has led to extraordinary improvements in natural language understanding […]

Layer Parallelism: Enhancing LLM Inference Efficiency Through Parallel Execution of Transformer Layers

LLMs have demonstrated exceptional capabilities, but their substantial computational demands pose significant challenges for large-scale deployment. While previous studies indicate […]

ByteDance Introduces UltraMem: A Novel AI Architecture for High-Performance, Resource-Efficient Language Models

Large Language Models (LLMs) have revolutionized natural language processing (NLP) but face significant challenges in practical applications due to their […]

Google DeepMind Research Introduces WebLI-100B: Scaling Vision-Language Pretraining to 100 Billion Examples for Cultural Diversity and Multilingualit

Machines learn to connect images and text by training on large datasets, where more data helps models recognize patterns and […]