Artificial intelligence (AI) has made significant strides in recent years, yet challenges persist in achieving efficient, cost-effective, and high-performance models. […]

Category: Large Language Model

Google AI Released Gemini 2.5 Pro Experimental: An Advanced AI Model that Excels in Reasoning, Coding, and Multimodal Capabilities

In the evolving field of artificial intelligence, a significant challenge has been developing models that can effectively reason through complex […]

RWKV-7: Advancing Recurrent Neural Networks for Efficient Sequence Modeling

Autoregressive Transformers have become the leading approach for sequence modeling due to their strong in-context learning and parallelizable training enabled […]

Qwen Releases the Qwen2.5-VL-32B-Instruct: A 32B Parameter VLM that Surpasses Qwen2.5-VL-72B and Other Models like GPT-4o Mini

In the evolving field of artificial intelligence, vision-language models (VLMs) have become essential tools, enabling machines to interpret and generate […]

A Coding Implementation of Extracting Structured Data Using LangSmith, Pydantic, LangChain, and Claude 3.7 Sonnet

Unlock the power of structured data extraction with LangChain and Claude 3.7 Sonnet, transforming raw text into actionable insights. This […]

This AI Paper from NVIDIA Introduces Cosmos-Reason1: A Multimodal Model for Physical Common Sense and Embodied Reasoning

Artificial intelligence systems designed for physical settings require more than just perceptual abilities—they must also reason about objects, actions, and […]

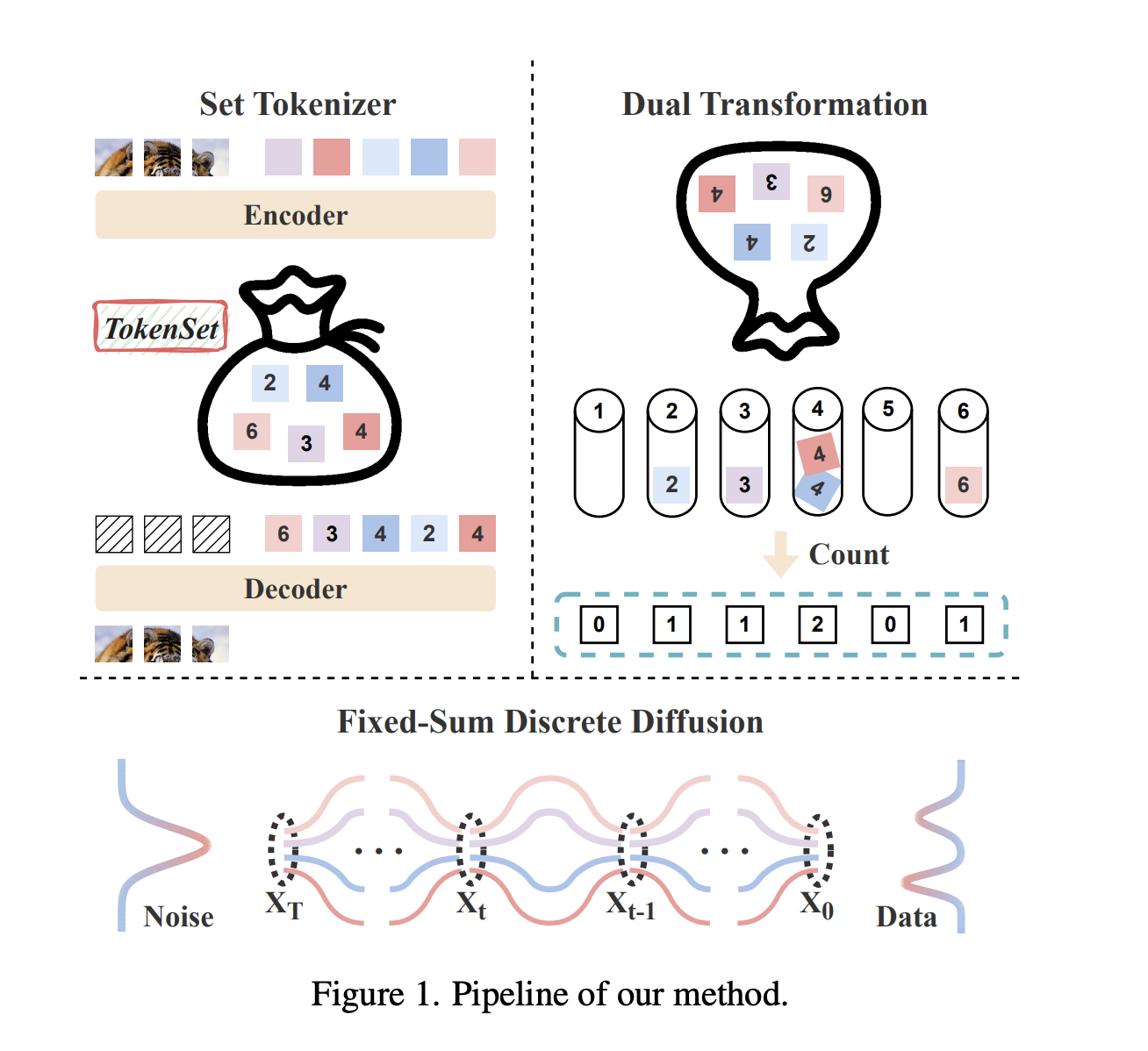

TokenSet: A Dynamic Set-Based Framework for Semantic-Aware Visual Representation

Visual generation frameworks follow a two-stage approach: first compressing visual signals into latent representations and then modeling the low-dimensional distributions. […]

SuperBPE: Advancing Language Models with Cross-Word Tokenization

Language models (LMs) face a fundamental challenge in how to perceive textual data through tokenization. Current subword tokenizers segment text […]

A Unified Acoustic-to-Speech-to-Language Embedding Space Captures the Neural Basis of Natural Language Processing in Everyday Conversations

Language processing in the brain presents a challenge due to its inherently complex, multidimensional, and context-dependent nature. Psycholinguists have attempted […]

Achieving Critical Reliability in Instruction-Following with LLMs: How to Achieve AI Customer Service That’s 100% Reliable

Ensuring reliable instruction-following in LLMs remains a critical challenge. This is particularly important in customer-facing applications, where mistakes can be […]