Understanding the Limits of Current Interpretability Tools in LLMs AI models, such as DeepSeek and GPT variants, rely on billions […]

Category: Large Language Model

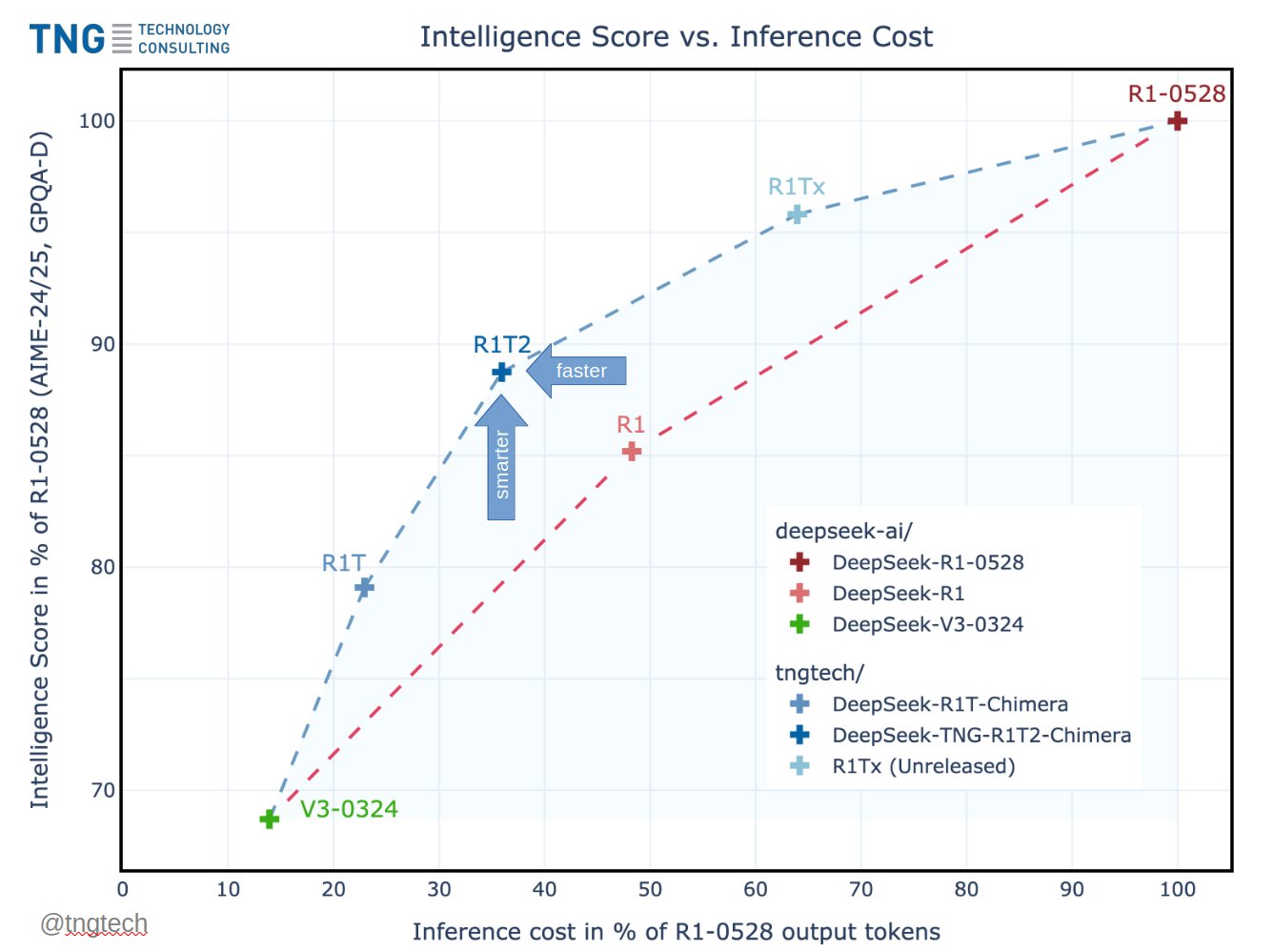

DeepSeek R1T2 Chimera: 200% Faster Than R1-0528 With Improved Reasoning and Compact Output

TNG Technology Consulting has unveiled DeepSeek-TNG R1T2 Chimera, a new Assembly-of-Experts (AoE) model that blends intelligence and speed through an […]

Shanghai Jiao Tong Researchers Propose OctoThinker for Reinforcement Learning-Scalable LLM Development

Introduction: Reinforcement Learning Progress through Chain-of-Thought Prompting LLMs have shown excellent progress in complex reasoning tasks through CoT prompting combined […]

ReasonFlux-PRM: A Trajectory-Aware Reward Model Enhancing Chain-of-Thought Reasoning in LLMs

Understanding the Role of Chain-of-Thought in LLMs Large language models are increasingly being used to solve complex tasks such as […]

Baidu Researchers Propose AI Search Paradigm: A Multi-Agent Framework for Smarter Information Retrieval

The Need for Cognitive and Adaptive Search Engines Modern search systems are evolving rapidly as the demand for context-aware, adaptive […]

Baidu Open Sources ERNIE 4.5: LLM Series Scaling from 0.3B to 424B Parameters

Baidu has officially open-sourced its latest ERNIE 4.5 series, a powerful family of foundation models designed for enhanced language understanding, […]

OMEGA: A Structured Math Benchmark to Probe the Reasoning Limits of LLMs

Introduction to Generalization in Mathematical Reasoning Large-scale language models with long CoT reasoning, such as DeepSeek-R1, have shown good results […]

LongWriter-Zero: A Reinforcement Learning Framework for Ultra-Long Text Generation Without Synthetic Data

Introduction to Ultra-Long Text Generation Challenges Generating ultra-long texts that span thousands of words is becoming increasingly important for real-world […]

DeepRare: The First AI-Powered Agentic Diagnostic System Transforming Clinical Decision-Making in Rare Disease Management

Rare diseases impact some 400 million people worldwide, accounting for over 7,000 individual disorders, and most of these, about 80%, […]

Tencent Open Sources Hunyuan-A13B: A 13B Active Parameter MoE Model with Dual-Mode Reasoning and 256K Context

Tencent’s Hunyuan team has introduced Hunyuan-A13B, a new open-source large language model built on a sparse Mixture-of-Experts (MoE) architecture. While […]