Key Takeaways: Researchers from Google DeepMind, the University of Michigan & Brown university have developed “Motion Prompting,” a new method […]

Category: Applications

OpenThoughts: A Scalable Supervised Fine-Tuning SFT Data Curation Pipeline for Reasoning Models

The Growing Complexity of Reasoning Data Curation Recent reasoning models, such as DeepSeek-R1 and o3, have shown outstanding performance in […]

Apple Researchers Reveal Structural Failures in Large Reasoning Models Using Puzzle-Based Evaluation

Artificial intelligence has undergone a significant transition from basic language models to advanced models that focus on reasoning tasks. These […]

This AI Paper Introduces VLM-R³: A Multimodal Framework for Region Recognition, Reasoning, and Refinement in Visual-Linguistic Tasks

Multimodal reasoning ability helps machines perform tasks such as solving math problems embedded in diagrams, reading signs from photographs, or […]

Meta AI Releases V-JEPA 2: Open-Source Self-Supervised World Models for Understanding, Prediction, and Planning

Meta AI has introduced V-JEPA 2, a scalable open-source world model designed to learn from video at internet scale and […]

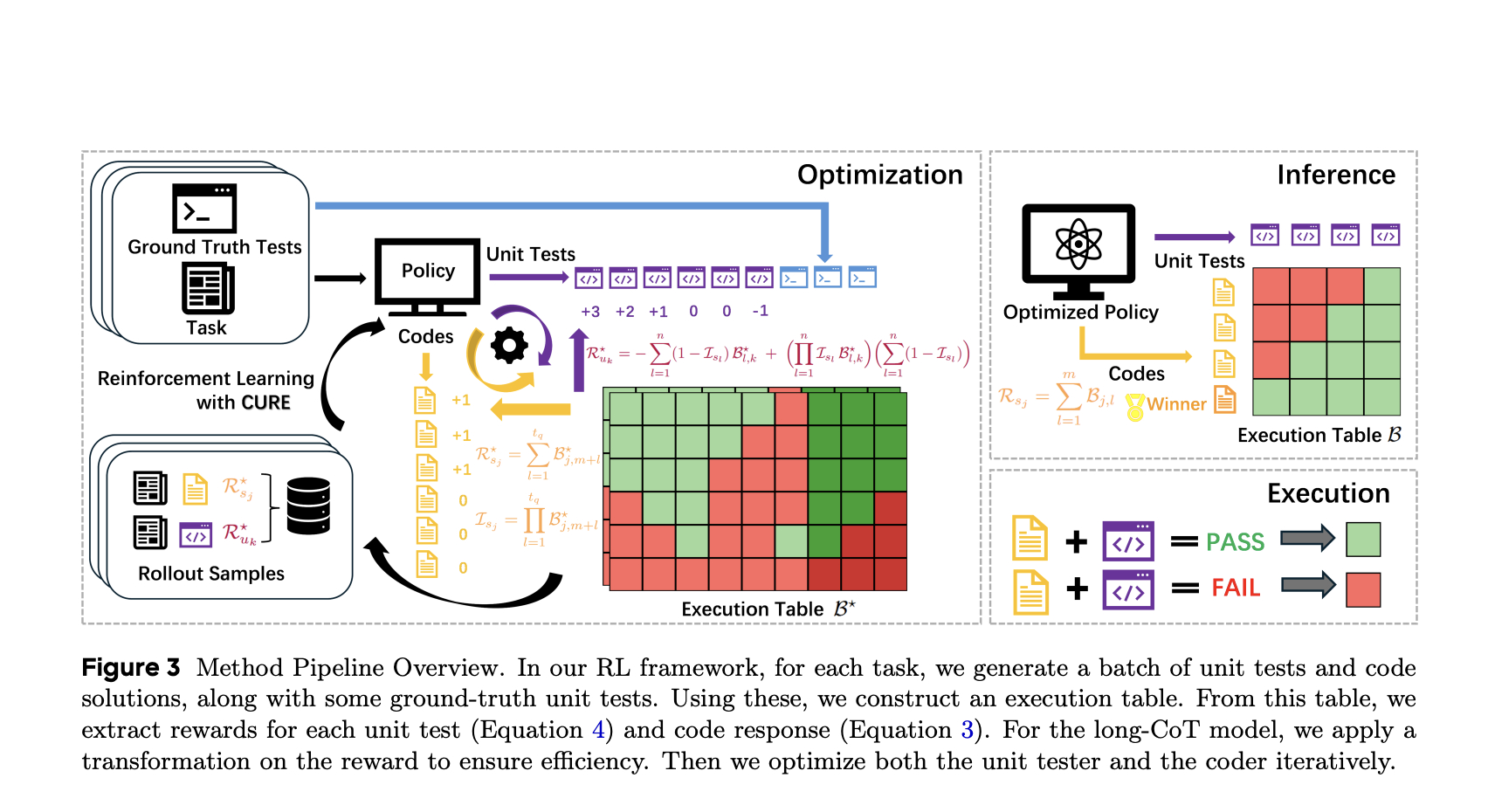

CURE: A Reinforcement Learning Framework for Co-Evolving Code and Unit Test Generation in LLMs

Introduction Large Language Models (LLMs) have shown substantial improvements in reasoning and precision through reinforcement learning (RL) and test-time scaling […]

How Do LLMs Really Reason? A Framework to Separate Logic from Knowledge

Unpacking Reasoning in Modern LLMs: Why Final Answers Aren’t Enough Recent advancements in reasoning-focused LLMs like OpenAI’s o1/3 and DeepSeek-R1 […]

Mistral AI Releases Magistral Series: Advanced Chain-of-Thought LLMs for Enterprise and Open-Source Applications

Mistral AI has officially introduced Magistral, its latest series of reasoning-optimized large language models (LLMs). This marks a significant step […]

NVIDIA Researchers Introduce Dynamic Memory Sparsification (DMS) for 8× KV Cache Compression in Transformer LLMs

As the demand for reasoning-heavy tasks grows, large language models (LLMs) are increasingly expected to generate longer sequences or parallel […]

How Much Do Language Models Really Memorize? Meta’s New Framework Defines Model Capacity at the Bit Level

Introduction: The Challenge of Memorization in Language Models Modern language models face increasing scrutiny regarding their memorization behavior. With models […]