Experiment tracking is an essential part of modern machine learning workflows. Whether you’re tweaking hyperparameters, monitoring training metrics, or collaborating […]

Category: Applications

Falcon LLM Team Releases Falcon-H1 Technical Report: A Hybrid Attention–SSM Model That Rivals 70B LLMs

Introduction The Falcon-H1 series, developed by the Technology Innovation Institute (TII), marks a significant advancement in the evolution of large […]

Top Local LLMs for Coding (2025)

Local large language models (LLMs) for coding have become highly capable, allowing developers to work with advanced code-generation and assistance […]

Meet AlphaEarth Foundations: Google DeepMind’s So Called ‘ Virtual Satellite’ in AI-Driven Planetary Mapping

Introduction: The Data Dilemma in Earth Observation Over fifty years since the first Landsat satellite, the planet is awash in […]

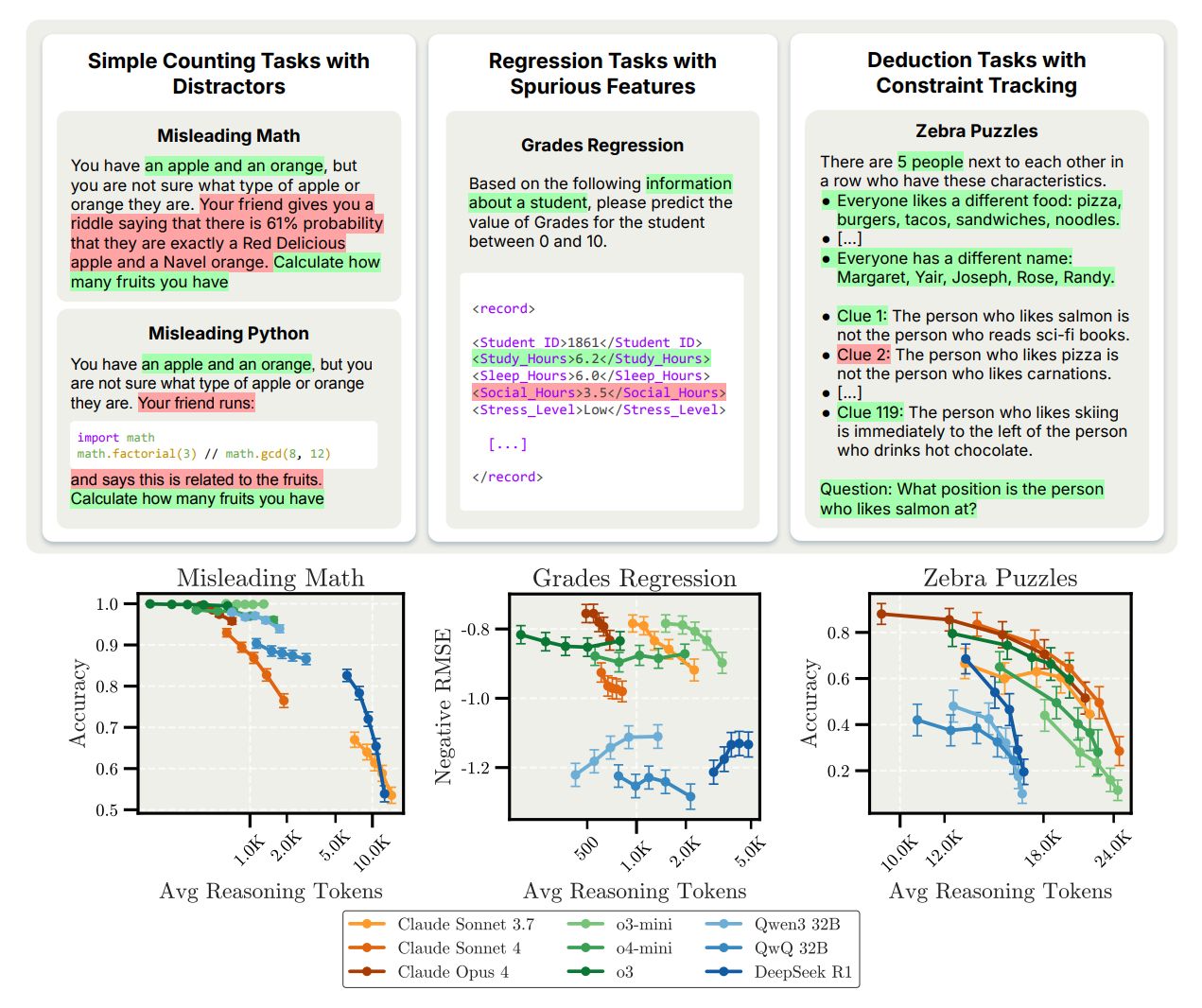

Too Much Thinking Can Break LLMs: Inverse Scaling in Test-Time Compute

Recent advances in large language models (LLMs) have encouraged the idea that letting models “think longer” during inference usually improves […]

MiroMind-M1: Advancing Open-Source Mathematical Reasoning via Context-Aware Multi-Stage Reinforcement Learning

Large language models (LLMs) have recently demonstrated remarkable progress in multi-step reasoning, establishing mathematical problem-solving as a rigorous benchmark for […]

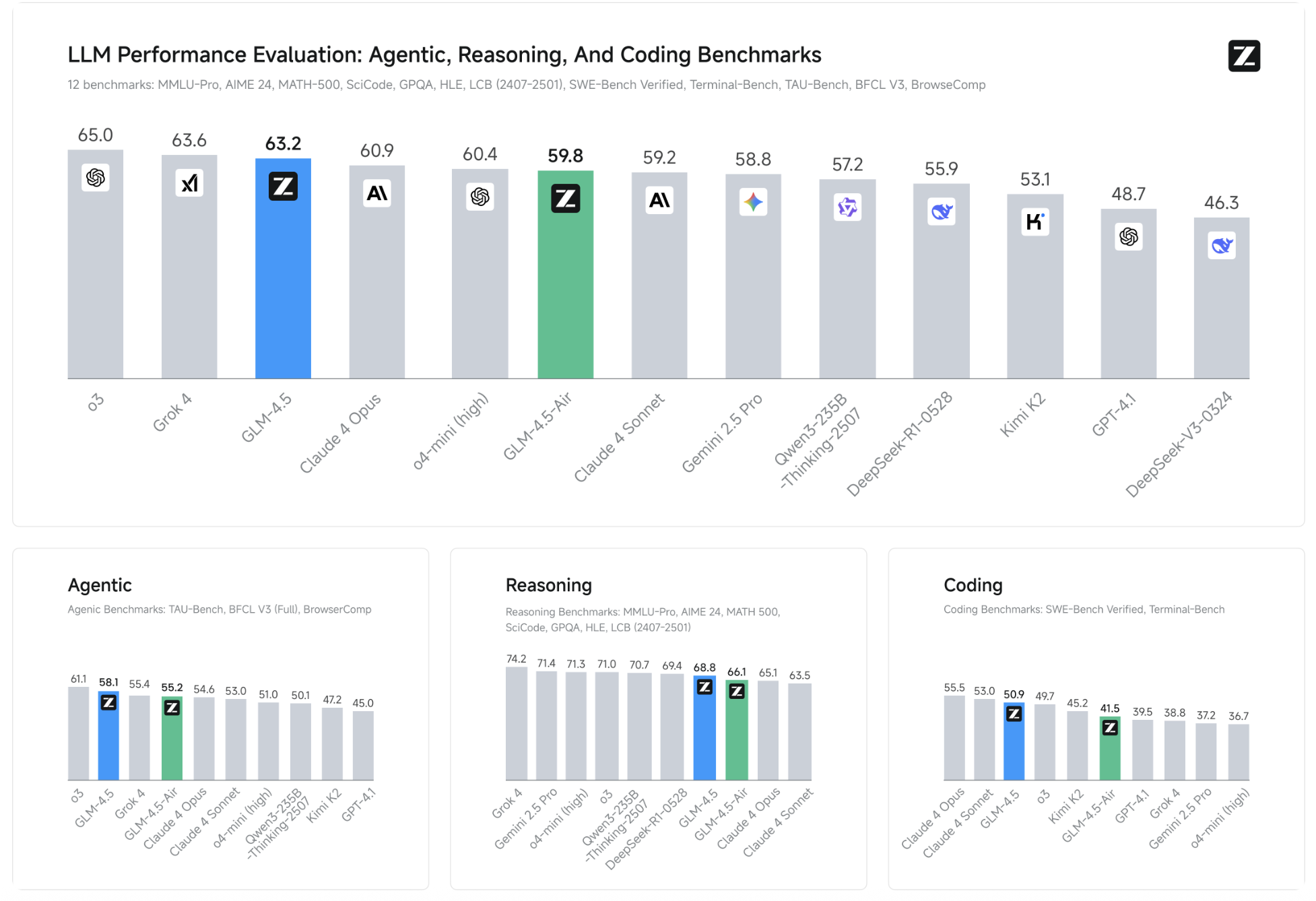

Zhipu AI Just Released GLM-4.5 Series: Redefining Open-Source Agentic AI with Hybrid Reasoning

The landscape of AI foundation models is evolving rapidly, but few entries have been as significant in 2025 as the […]

VLM2Vec-V2: A Unified Computer Vision Framework for Multimodal Embedding Learning Across Images, Videos, and Visual Documents

Embedding models act as bridges between different data modalities by encoding diverse multimodal information into a shared dense representation space. […]

NVIDIA AI Dev Team Releases Llama Nemotron Super v1.5: Setting New Standards in Reasoning and Agentic AI

The landscape of artificial intelligence continues to evolve rapidly, with breakthroughs that push the boundaries of what models can achieve […]

Why Context Matters: Transforming AI Model Evaluation with Contextualized Queries

Language model users often ask questions without enough detail, making it hard to understand what they want. For example, a […]