LLMs have made significant strides in language-related tasks such as conversational AI, reasoning, and code generation. However, human communication extends […]

Category: Applications

NVIDIA Open-Sources Open Code Reasoning Models (32B, 14B, 7B)

NVIDIA continues to push the boundaries of open AI development by open-sourcing its Open Code Reasoning (OCR) model suite — […]

Hugging Face Releases nanoVLM: A Pure PyTorch Library to Train a Vision-Language Model from Scratch in 750 Lines of Code

In a notable step toward democratizing vision-language model development, Hugging Face has released nanoVLM, a compact and educational PyTorch-based framework […]

Google Launches Gemini 2.5 Pro I/O: Outperforms GPT-4 Turbo in Coding, Supports Native Video Understanding and Leads WebDev Arena

Just ahead of its annual I/O developer conference, Google has released an early preview of Gemini 2.5 Pro (I/O Edition)—a […]

Researchers from Fudan University Introduce Lorsa: A Sparse Attention Mechanism That Recovers Atomic Attention Units Hidden in Transformer Superposition

Large Language Models (LLMs) have gained significant attention in recent years, yet understanding their internal mechanisms remains challenging. When examining […]

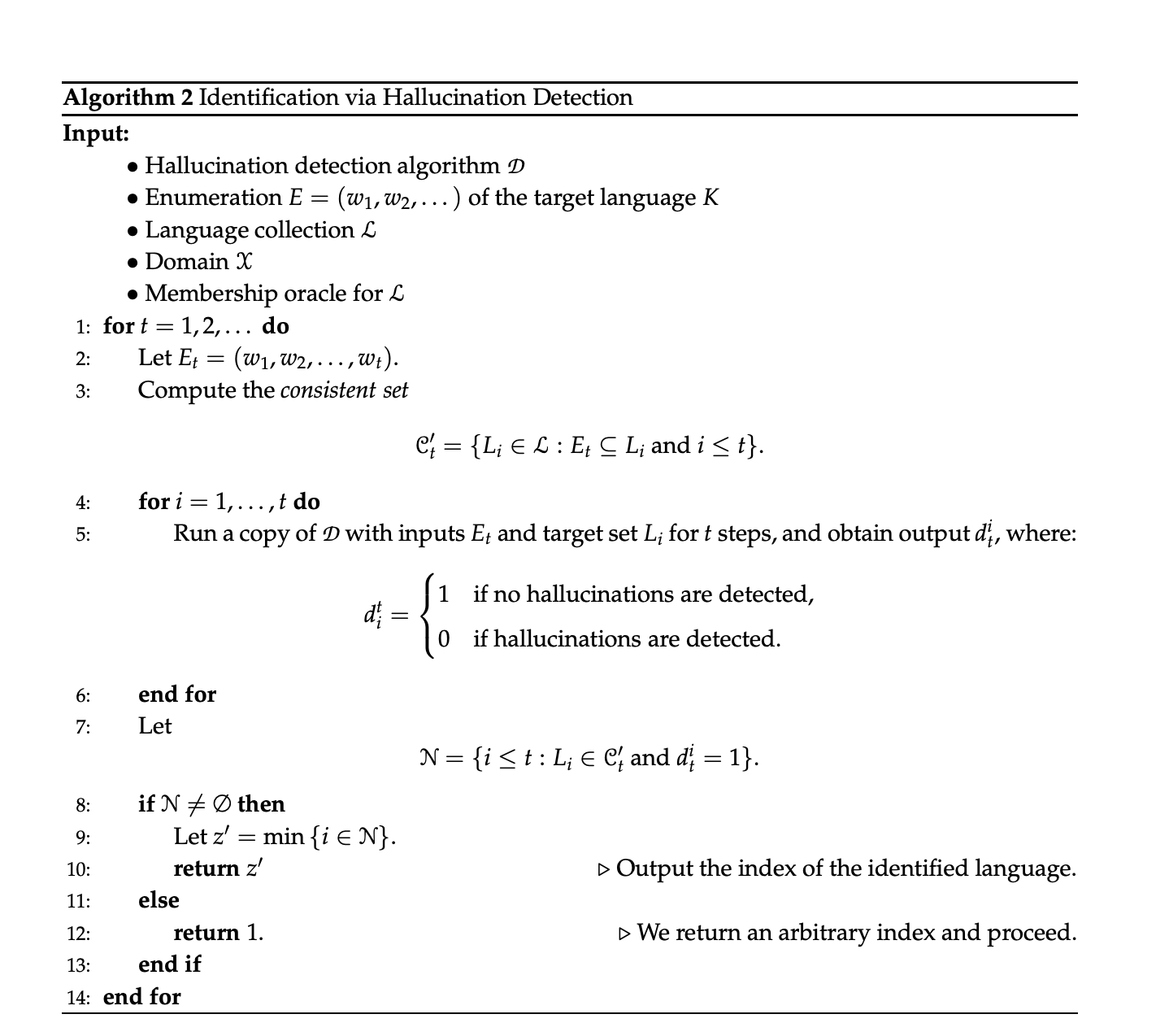

Is Automated Hallucination Detection in LLMs Feasible? A Theoretical and Empirical Investigation

Recent advancements in LLMs have significantly improved natural language understanding, reasoning, and generation. These models now excel at diverse tasks […]

LLMs Can Now Talk in Real-Time with Minimal Latency: Chinese Researchers Release LLaMA-Omni2, a Scalable Modular Speech Language Model

Researchers at the Institute of Computing Technology, Chinese Academy of Sciences, have introduced LLaMA-Omni2, a family of speech-capable large language […]

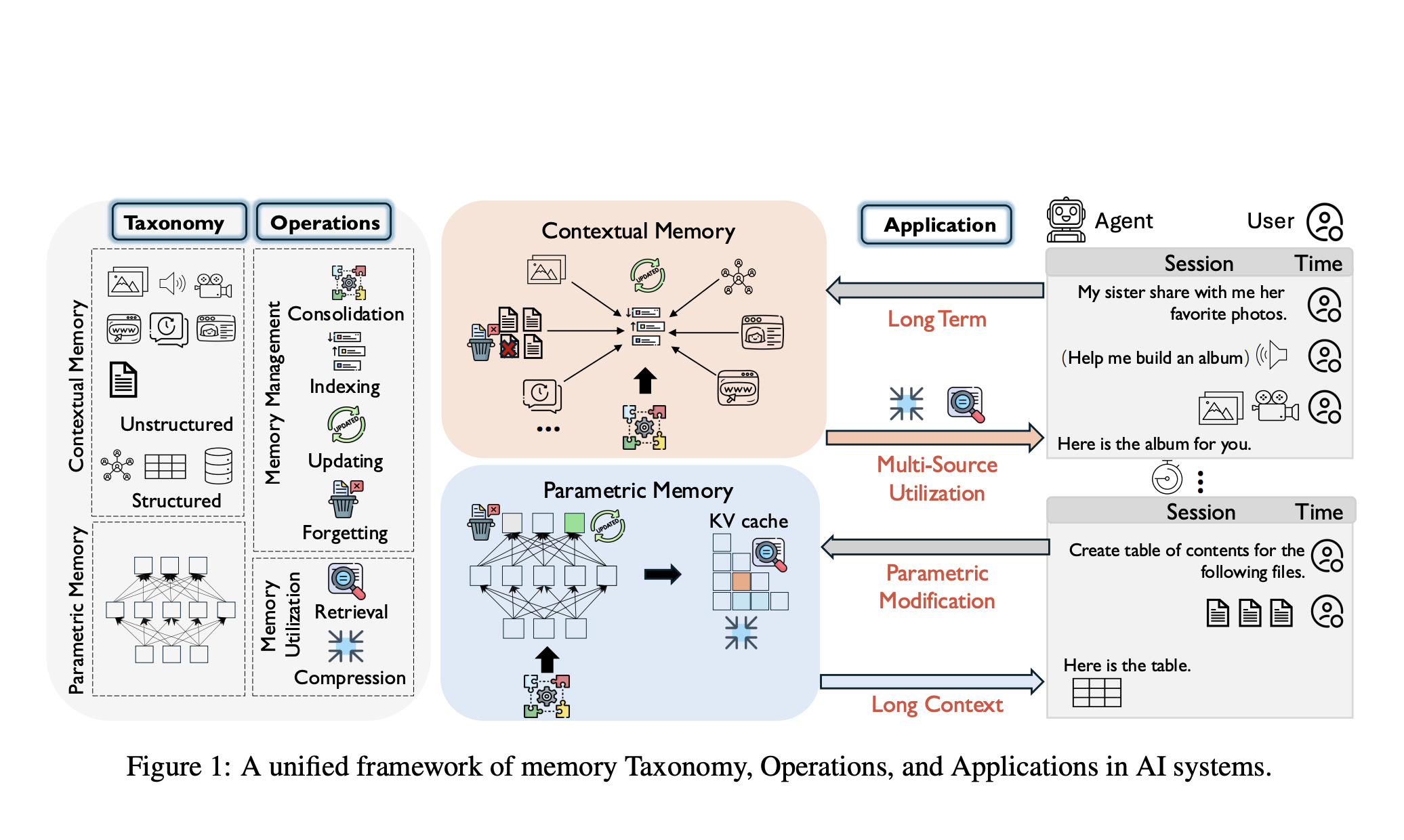

How AI Agents Store, Forget, and Retrieve? A Fresh Look at Memory Operations for the Next-Gen LLMs

Memory plays a crucial role in LLM-based AI systems, supporting sustained, coherent interactions over time. While earlier surveys have explored […]

RWKV-X Combines Sparse Attention and Recurrent Memory to Enable Efficient 1M-Token Decoding with Linear Complexity

LLMs built on Transformer architectures face significant scaling challenges due to their quadratic complexity in sequence length when processing long-context […]

How the Model Context Protocol (MCP) Standardizes, Simplifies, and Future-Proofs AI Agent Tool Calling Across Models for Scalable, Secure, Interoperable Workflows Traditional Approaches to AI–Tool Integration

Before MCP, LLMs relied on ad-hoc, model-specific integrations to access external tools. Approaches like ReAct interleave chain-of-thought reasoning with explicit […]