Large language models (LLMs) offer several parameters that let you fine-tune their behavior and control how they generate responses. If a model isn’t producing the desired output, the issue often lies in how these parameters are configured. In this tutorial, we’ll explore some of the most commonly used ones — max_completion_tokens, temperature, top_p, presence_penalty, and frequency_penalty — and understand how each influences the model’s output.

Installing the dependencies

pip install openai pandas matplotlibLoading OpenAI API Key

import os from getpass import getpass os.environ['OPENAI_API_KEY'] = getpass('Enter OpenAI API Key: ')Initializing the Model

from openai import OpenAI model="gpt-4.1" client = OpenAI()Max Tokens

Max Tokens is the maximum number of tokens the model can generate during a run. The model will try to stay within this limit across all turns. If it exceeds the specified number, the run will stop and be marked as incomplete.

A smaller value (like 16) limits the model to very short answers, while a higher value (like 80) allows it to generate more detailed and complete responses. Increasing this parameter gives the model more room to elaborate, explain, or format its output more naturally.

prompt = "What is the most popular French cheese?" for tokens in [16, 30, 80]: print(f"n--- max_output_tokens = {tokens} ---") response = client.chat.completions.create( model=model, messages=[ {"role": "developer", "content": "You are a helpful assistant."}, {"role": "user", "content": prompt} ], max_completion_tokens=tokens ) print(response.choices[0].message.content)Temperature

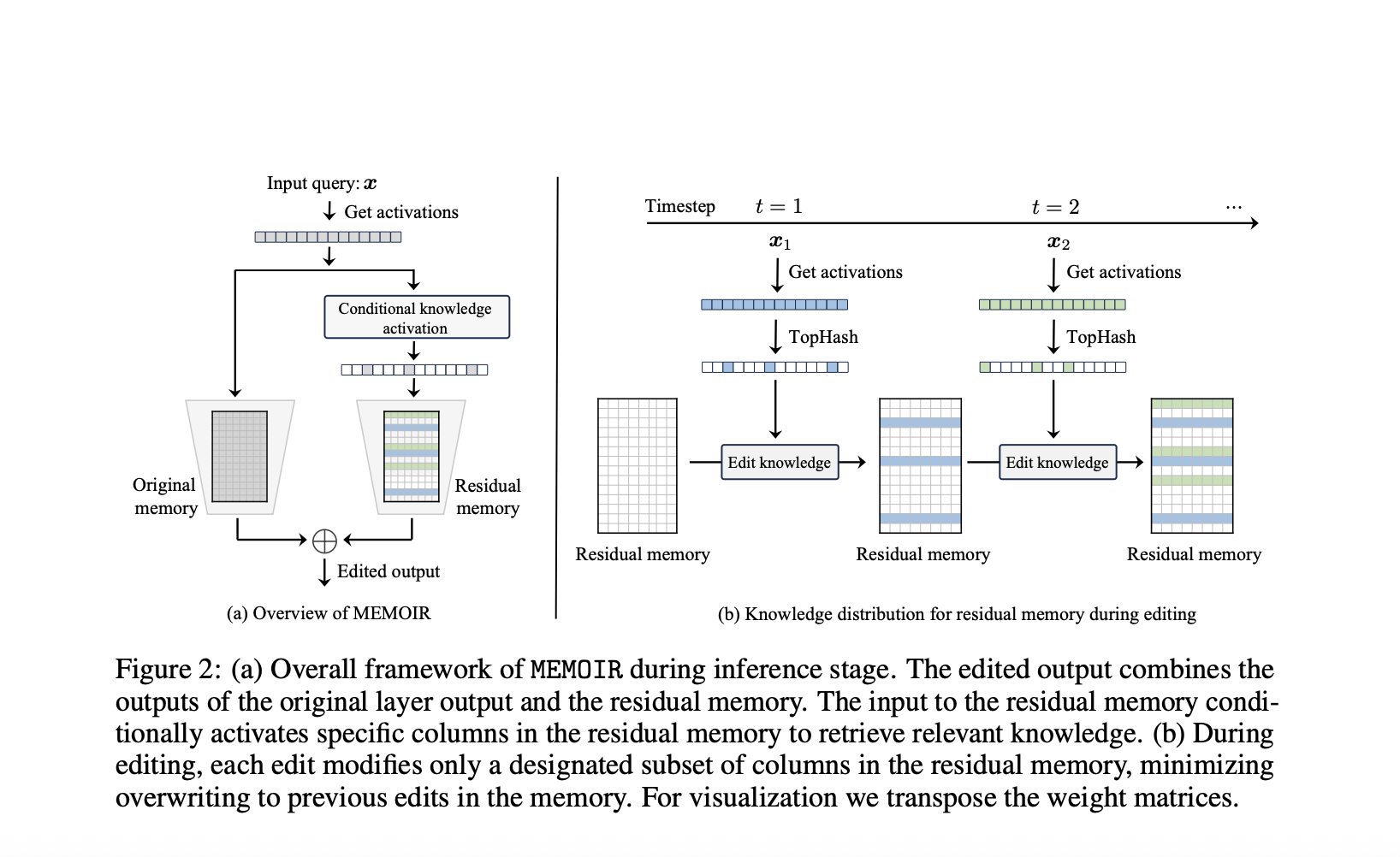

In Large Language Models (LLMs), the temperature parameter controls the diversity and randomness of generated outputs. Lower temperature values make the model more deterministic and focused on the most probable responses — ideal for tasks that require accuracy and consistency. Higher values, on the other hand, introduce creativity and variety by allowing the model to explore less likely options. Technically, temperature scales the probabilities of predicted tokens in the softmax function: increasing it flattens the distribution (more diverse outputs), while decreasing it sharpens the distribution (more predictable outputs).

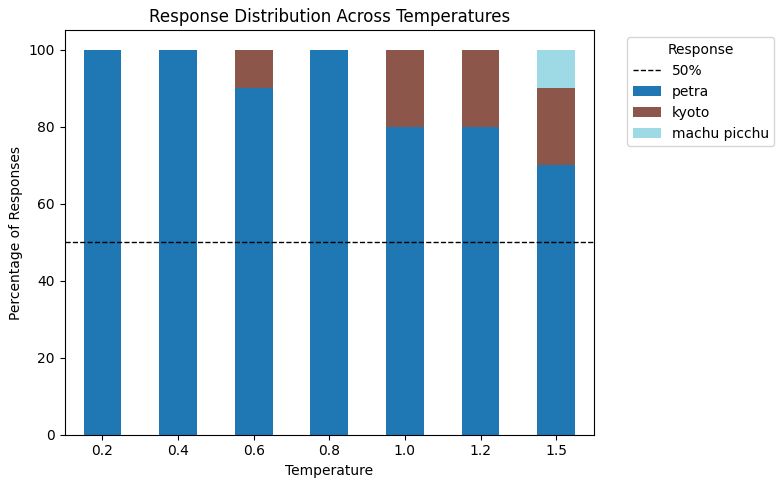

In this code, we’re prompting the LLM to give 10 different responses (n_choices = 10) for the same question — “What is one intriguing place worth visiting?” — across a range of temperature values. By doing this, we can observe how the diversity of answers changes with temperature. Lower temperatures will likely produce similar or repeated responses, while higher temperatures will show a broader and more varied distribution of places.

prompt = "What is one intriguing place worth visiting? Give a single-word answer and think globally." temperatures = [0.2, 0.4, 0.6, 0.8, 1.0, 1.2, 1.5] n_choices = 10 results = {} for temp in temperatures: response = client.chat.completions.create( model=model, messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": prompt} ], temperature=temp, n=n_choices ) # Collect all n responses in a list results[temp] = [response.choices[i].message.content.strip() for i in range(n_choices)] # Display results for temp, responses in results.items(): print(f"n--- temperature = {temp} ---") print(responses)

As we can see, as the temperature increases to 0.6, the responses become more diverse, moving beyond the repeated single answer “Petra.” At a higher temperature of 1.5, the distribution shifts, and we can see responses like Kyoto, and Machu Picchu as well.

Top P

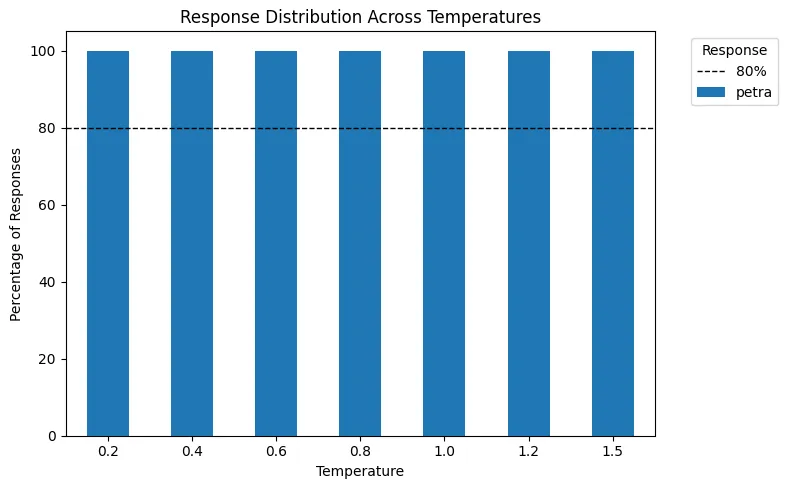

Top P (also known as nucleus sampling) is a parameter that controls how many tokens the model considers based on a cumulative probability threshold. It helps the model focus on the most likely tokens, often improving coherence and output quality.

In the following visualization, we first set a temperature value and then apply Top P = 0.5 (50%), meaning only the top 50% of the probability mass is kept. Note that when temperature = 0, the output is deterministic, so Top P has no effect.

The generation process works as follows:

- Apply the temperature to adjust the token probabilities.

- Use Top P to retain only the most probable tokens that together make up 50% of the total probability mass.

- Renormalize the remaining probabilities before sampling.

We’ll visualize how the token probability distribution changes across different temperature values for the question:

“What is one intriguing place worth visiting?”

prompt = "What is one intriguing place worth visiting? Give a single-word answer and think globally." temperatures = [0.2, 0.4, 0.6, 0.8, 1.0, 1.2, 1.5] n_choices = 10 results_ = {} for temp in temperatures: response = client.chat.completions.create( model=model, messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": prompt} ], temperature=temp, n=n_choices, top_p=0.5 ) # Collect all n responses in a list results_[temp] = [response.choices[i].message.content.strip() for i in range(n_choices)] # Display results for temp, responses in results_.items(): print(f"n--- temperature = {temp} ---") print(responses)

Since Petra consistently accounted for more than 50% of the total response probability, applying Top P = 0.5 filters out all other options. As a result, the model only selects “Petra” as the final output in every case.

Frequency Penalty

Frequency Penalty controls how much the model avoids repeating the same words or phrases in its output.

Range: -2 to 2

Default: 0

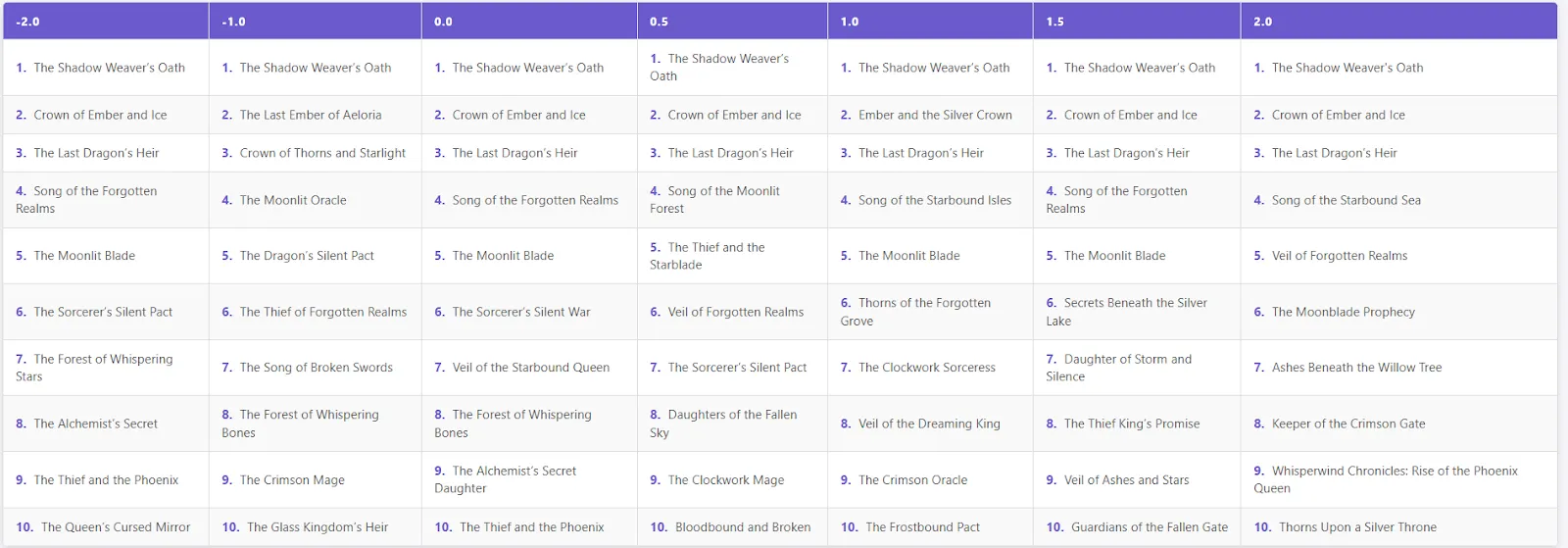

When the frequency penalty is higher, the model gets penalized for using words it has already used before. This encourages it to choose new and different words, making the text more varied and less repetitive.

In simple terms — a higher frequency penalty = less repetition and more creativity.

We’ll test this using the prompt:

“List 10 possible titles for a fantasy book. Give the titles only and each title on a new line.”

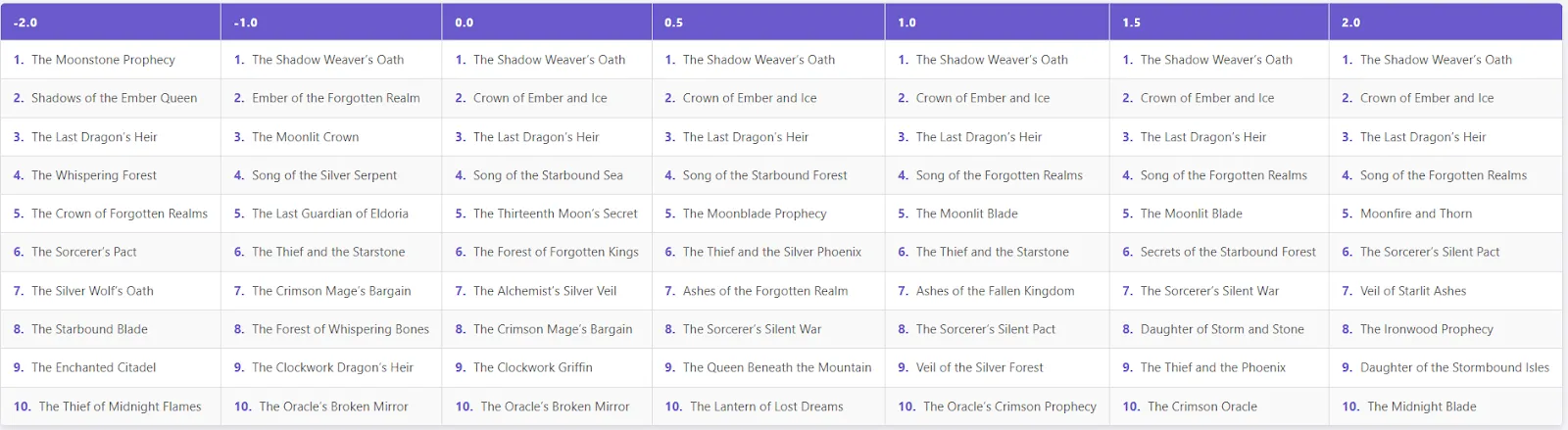

prompt = "List 10 possible titles for a fantasy book. Give the titles only and each title on a new line." frequency_penalties = [-2.0, -1.0, 0.0, 0.5, 1.0, 1.5, 2.0] results = {} for fp in frequency_penalties: response = client.chat.completions.create( model=model, messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": prompt} ], frequency_penalty=fp, temperature=0.2 ) text = response.choices[0].message.content items = [line.strip("- ").strip() for line in text.split("n") if line.strip()] results[fp] = items # Display results for fp, items in results.items(): print(f"n--- frequency_penalty = {fp} ---") print(items)

- Low frequency penalties (-2 to 0): Titles tend to repeat, with familiar patterns like “The Shadow Weaver’s Oath”, “Crown of Ember and Ice”, and “The Last Dragon’s Heir” appearing frequently.

- Moderate penalties (0.5 to 1.5): Some repetition remains, but the model starts generating more varied and creative titles.

- High penalty (2.0): The first three titles are still the same, but after that, the model produces diverse, unique, and imaginative book names (e.g., “Whisperwind Chronicles: Rise of the Phoenix Queen”, “Ashes Beneath the Willow Tree”).

Presence Penalty

Presence Penalty controls how much the model avoids repeating words or phrases that have already appeared in the text.

- Range: -2 to 2

- Default: 0

A higher presence penalty encourages the model to use a wider variety of words, making the output more diverse and creative.

Unlike the frequency penalty, which accumulates with each repetition, the presence penalty is applied once to any word that has already appeared, reducing the chance it will be repeated in the output. This helps the model produce text with more variety and originality.

prompt = "List 10 possible titles for a fantasy book. Give the titles only and each title on a new line." presence_penalties = [-2.0, -1.0, 0.0, 0.5, 1.0, 1.5, 2.0] results = {} for fp in frequency_penalties: response = client.chat.completions.create( model=model, messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": prompt} ], presence_penalty=fp, temperature=0.2 ) text = response.choices[0].message.content items = [line.strip("- ").strip() for line in text.split("n") if line.strip()] results[fp] = items # Display results for fp, items in results.items(): print(f"n--- presence_penalties = {fp} ---") print(items)

- Low to Moderate Penalty (-2.0 to 0.5): Titles are somewhat varied, with some repetition of common fantasy patterns like “The Shadow Weaver’s Oath”, “The Last Dragon’s Heir”, “Crown of Ember and Ice”.

- Medium Penalty (1.0 to 1.5): The first few popular titles remain, while later titles show more creativity and unique combinations. Examples: “Ashes of the Fallen Kingdom”, “Secrets of the Starbound Forest”, “Daughter of Storm and Stone”.

- Maximum Penalty (2.0): Top three titles stay the same, but the rest become highly diverse and imaginative. Examples: “Moonfire and Thorn”, “Veil of Starlit Ashes”, “The Midnight Blade”.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Arham Islam

I am a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I have a keen interest in Data Science, especially Neural Networks and their application in various areas.