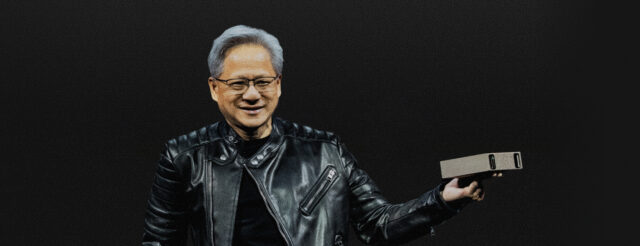

Nvidia CEO Jensen Huang’s now-famous GTC 2025 reveal where he held up a palm-sized AI supercomputer and likened it to the original DGX-1 “with Pym particles” wasn’t just a show of engineering flair.

The DGX Spark, as it’s now officially called, marks a turning point in how we think about AI infrastructure. For the first time, Nvidia’s Grace Blackwell superchip architecture has been distilled into a 1.2kg box that fits on a desktop, priced accessibly for research teams and small-scale deployments.

Despite its size, this device delivers serious computational power. Built around Nvidia’s new GB10 system-on-a-chip, the DGX Spark encapsulates the Grace Blackwell architecture in miniature, offering researchers an accessible gateway into the world of AI supercomputing. Originally developed under the codename “Project DIGITS,” it’s billed as the world’s smallest AI supercomputer, and for good reason.

A shift in form factor

The release of DGX Spark marks a notable departure from the trajectory of recent Nvidia systems. Last year, Nvidia introduced the GB200 NVL72, a towering server packed with 36 Grace CPUs and 72 Blackwell GPUs, weighing more than a metric ton. In contrast, the DGX Spark can sit comfortably on a desk without threatening the structural integrity of your workspace.

Its appeal goes beyond portability. With a price tag under $3,999, the DGX Spark offers a low-barrier entry point for academic labs, research institutions, and edge computing environments — domains that may lack the resources or justification for full-scale supercomputing hardware. Its price, paired with a compact form, makes it an attractive option for early-stage testing, model development, and data science experimentation.

Why researchers need superchips

AI and HPC researchers face consistent pressure to run large-scale simulations and develop bespoke machine learning models — tasks that demand substantial compute power, memory bandwidth, and interconnect performance. Traditional workstations often fall short, while high-end systems are frequently overkill or inaccessible due to cost and complexity.

Take, for example, the Arm-powered Isambard 3 supercomputer in the UK, initially hosted by the Met Office. It has been used for climate modelling, molecular simulations on Parkinson’s disease, and drug development research. Those types of applications thrive on the capabilities superchips deliver: massive floating-point operations per second (FLOPs), fast memory access, and low-latency communication between processing units.

That’s the appeal of superchips — multi-component processors that combine CPUs and GPUs on a single board to optimise performance for specific workloads. Nvidia’s lineup includes combinations like the Grace Hopper (GH200), the Grace-Grace CPU-only pairing, and the latest GB200 systems that push boundaries even further. These designs balance compute flexibility, memory throughput, and interconnect efficiency, making them ideal for modern scientific computing.

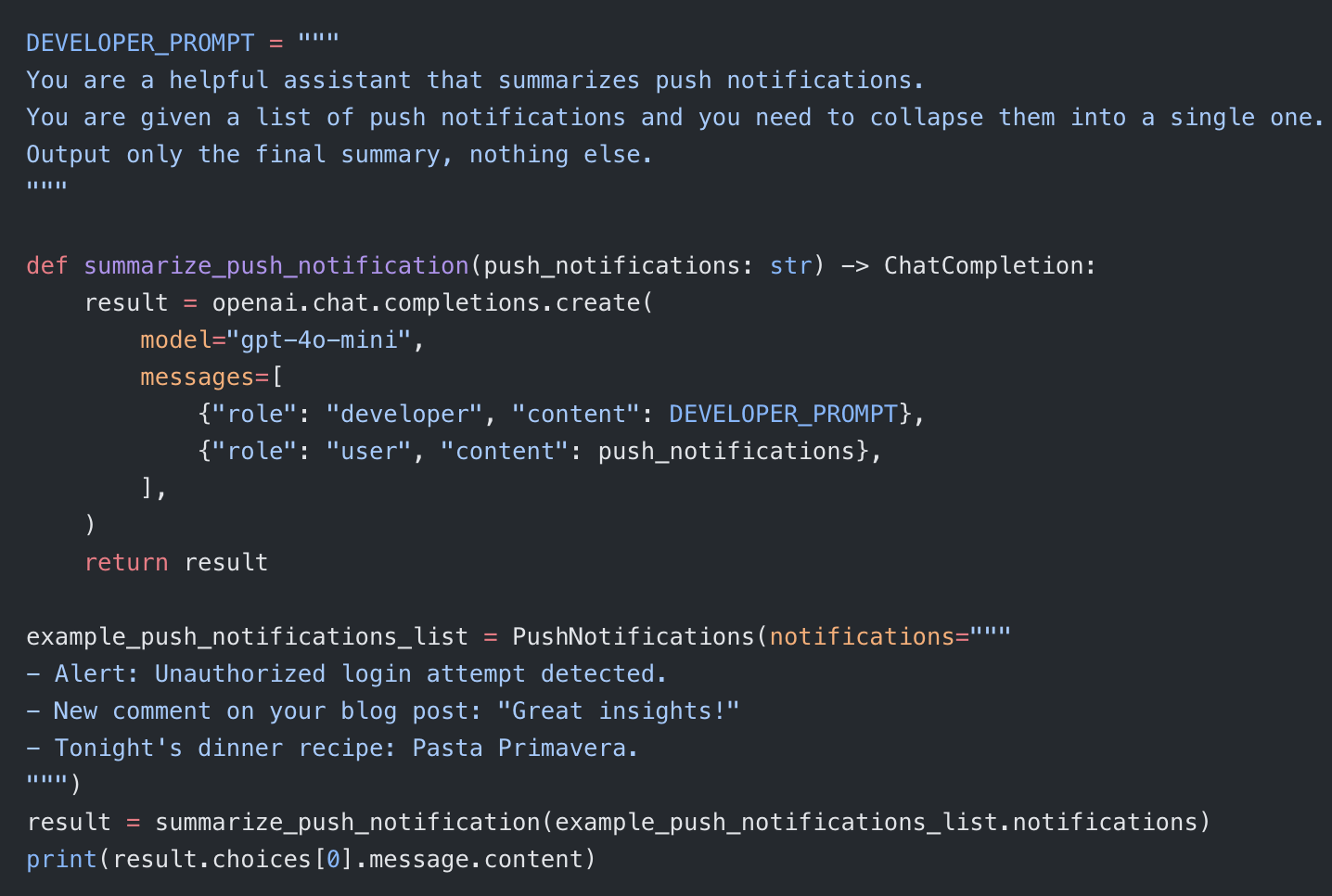

What DGX Spark brings to the table

Despite its small size, the DGX Spark doesn’t skimp on performance. It offers 1 petaFLOP of AI compute at FP4 precision, 128GB of LPDDR5X unified memory, 20 Arm CPU cores, and up to 4TB of onboard storage. It’s paired with a 200GbE ConnectX-7 NIC, enabling high-throughput networking ideal for clustered configurations. Its power draw is a modest 170W, making it practical for a desktop setup.

These specs are well-suited to academic workflows: training and fine-tuning models, running inference, developing edge applications, or prototyping end-to-end pipelines. Crucially, the DGX Spark enables researchers to experiment with the Grace Blackwell architecture firsthand — without needing grant approval for a six-figure server.

There’s also a strategic edge to this accessibility. According to UKRI guidance, capital equipment between £10,000 and £400,000 can be included in grant applications if it’s essential to the research and no alternative is available. DGX Spark provides a powerful validation platform to support such funding cases. Researchers can test workloads and demonstrate value before seeking approval for larger infrastructure investments.

Software, out-of-the-box

DGX Spark ships with Nvidia DGX OS, based on Ubuntu but enhanced with RTX drivers, CUDA libraries, Nvidia DOCA, and Docker support, meaning it’s ready for AI and scientific workloads immediately. It supports Nvidia’s developer ecosystem and the NGC (Nvidia GPU Cloud) library of SDKs and frameworks, giving researchers access to pre-optimized tools for a range of AI tasks.

That out-of-the-box readiness is key. The less time researchers spend configuring environments, the more they can focus on experimentation, hypothesis testing, and development.

Clustering for more power

Though the DGX Spark is powerful on its own, Nvidia has confirmed that two units can be paired in a supported cluster configuration.

According to reporting by ServeTheHome, larger configurations aren’t officially supported yet, but technically feasible. This opens the door for creative deployments: building multi-node mini-clusters in lab environments or using Spark devices as scalable testbeds for future, production-scale workloads.

The DGX Spark signals the arrival of a new tier in AI infrastructure, something between consumer GPUs and enterprise HPC systems. It won’t replace hyperscale data centers or rack-mounted DGX servers. But for researchers and developers looking to test, tinker, and iterate, it brings the future of compute into arm’s reach.

As AI continues to push into disciplines from climate science to biomedical research, tools like DGX Spark could play a critical role in accelerating innovation at the edge. Supercomputing, it turns out, doesn’t always need a supercomputer.

Image Credit: Nvidia

Allan Kaye is MD of Vespertec, a data center infrastructure firm and Nvidia Elite Compute and Networking Partner.