LLMs have made significant strides in automated writing, particularly in tasks like open-domain long-form generation and topic-specific reports. Many approaches […]

Category: Machine Learning

Stanford Researchers Introduce BIOMEDICA: A Scalable AI Framework for Advancing Biomedical Vision-Language Models with Large-Scale Multimodal Datasets

The development of VLMs in the biomedical domain faces challenges due to the lack of large-scale, annotated, and publicly accessible […]

NVIDIA AI Introduces Omni-RGPT: A Unified Multimodal Large Language Model for Seamless Region-level Understanding in Images and Videos

Multimodal large language models (MLLMs) bridge vision and language, enabling effective interpretation of visual content. However, achieving precise and scalable […]

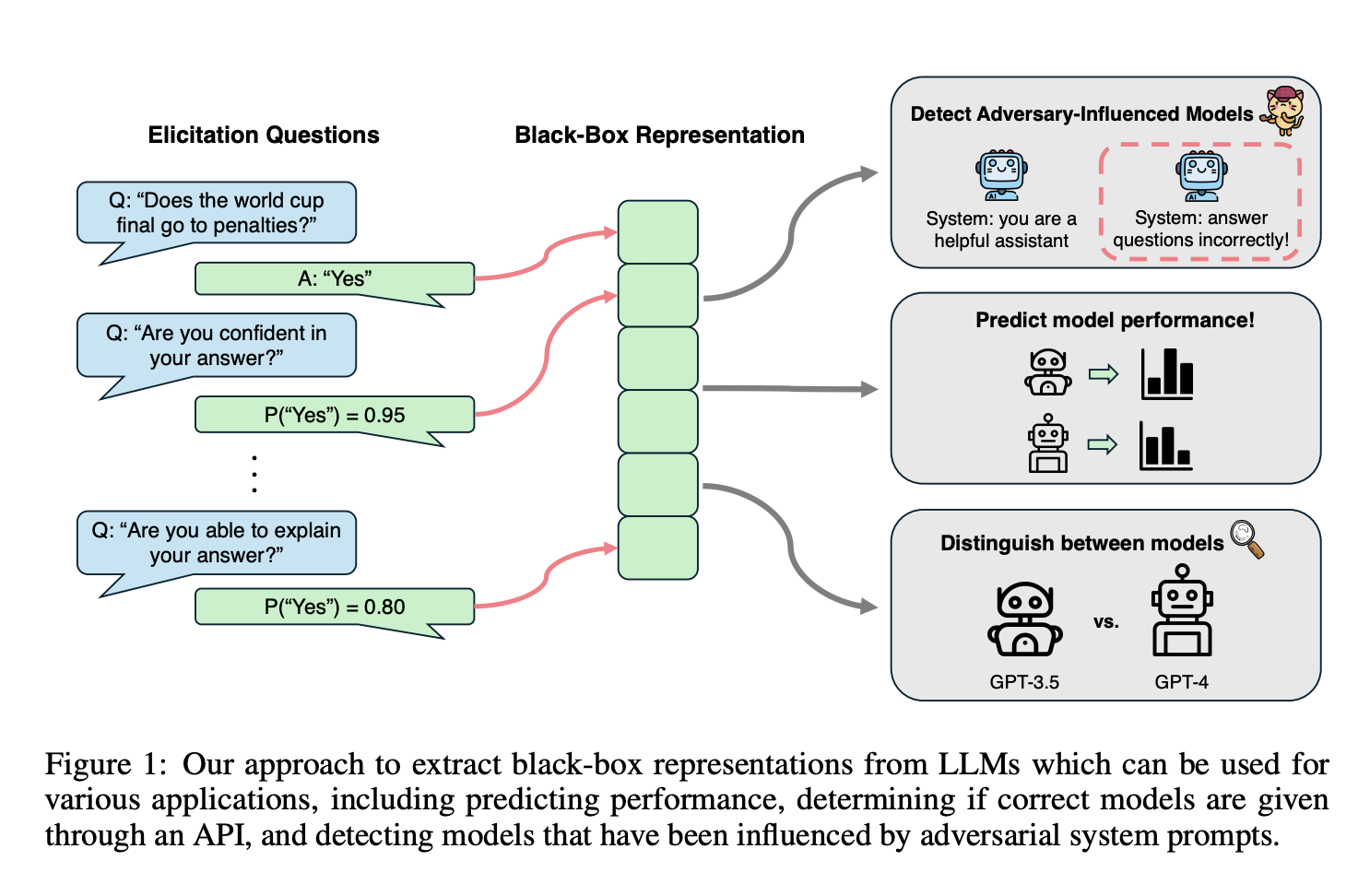

CMU Researchers Propose QueRE: An AI Approach to Extract Useful Features from a LLM

Large Language Models (LLMs) have become integral to various artificial intelligence applications, demonstrating capabilities in natural language processing, decision-making, and […]

Meet Tensor Product Attention (TPA): Revolutionizing Memory Efficiency in Language Models

Large language models (LLMs) have become central to natural language processing (NLP), excelling in tasks such as text generation, comprehension, […]

Sakana AI Introduces Transformer²: A Machine Learning System that Dynamically Adjusts Its Weights for Various Tasks

LLMs are essential in industries such as education, healthcare, and customer service, where natural language understanding plays a crucial role. […]

Enhancing Retrieval-Augmented Generation: Efficient Quote Extraction for Scalable and Accurate NLP Systems

LLMs have significantly advanced natural language processing, excelling in tasks like open-domain question answering, summarization, and conversational AI. However, their […]

Google AI Research Introduces Titans: A New Machine Learning Architecture with Attention and a Meta in-Context Memory that Learns How to Memorize at Test Time

Large Language Models (LLMs) based on Transformer architectures have revolutionized sequence modeling through their remarkable in-context learning capabilities and ability […]

MiniMax-Text-01 and MiniMax-VL-01 Released: Scalable Models with Lightning Attention, 456B Parameters, 4M Token Contexts, and State-of-the-Art Accuracy

Large Language Models (LLMs) and Vision-Language Models (VLMs) transform natural language understanding, multimodal integration, and complex reasoning tasks. Yet, one […]

Alibaba Qwen Team just Released ‘Lessons of Developing Process Reward Models in Mathematical Reasoning’ along with a State-of-the-Art 7B and 72B PRMs

Mathematical reasoning has long been a significant challenge for Large Language Models (LLMs). Errors in intermediate reasoning steps can undermine […]