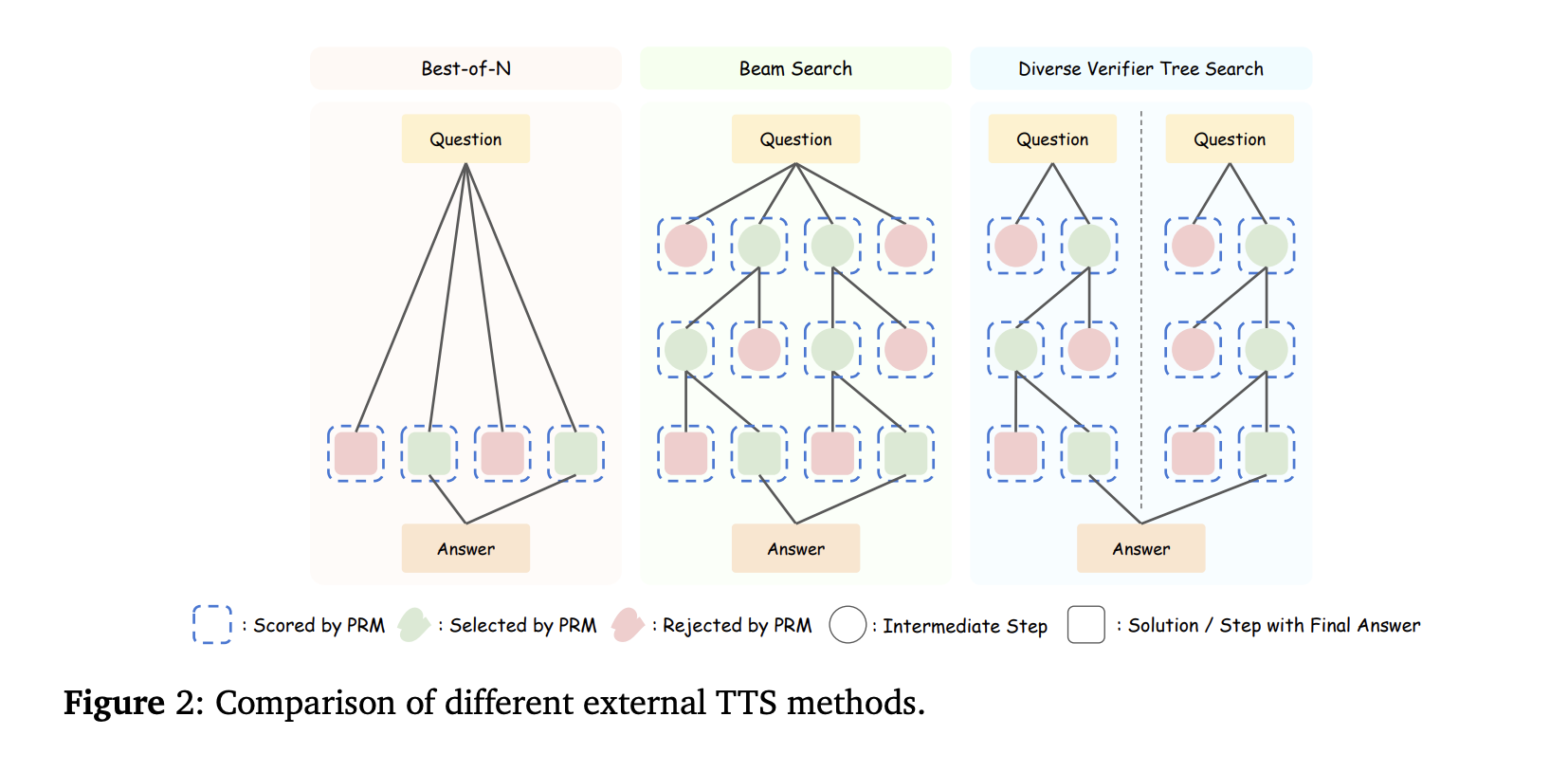

Test-Time Scaling (TTS) is a crucial technique for enhancing the performance of LLMs by leveraging additional computational resources during inference. […]

Category: Machine Learning

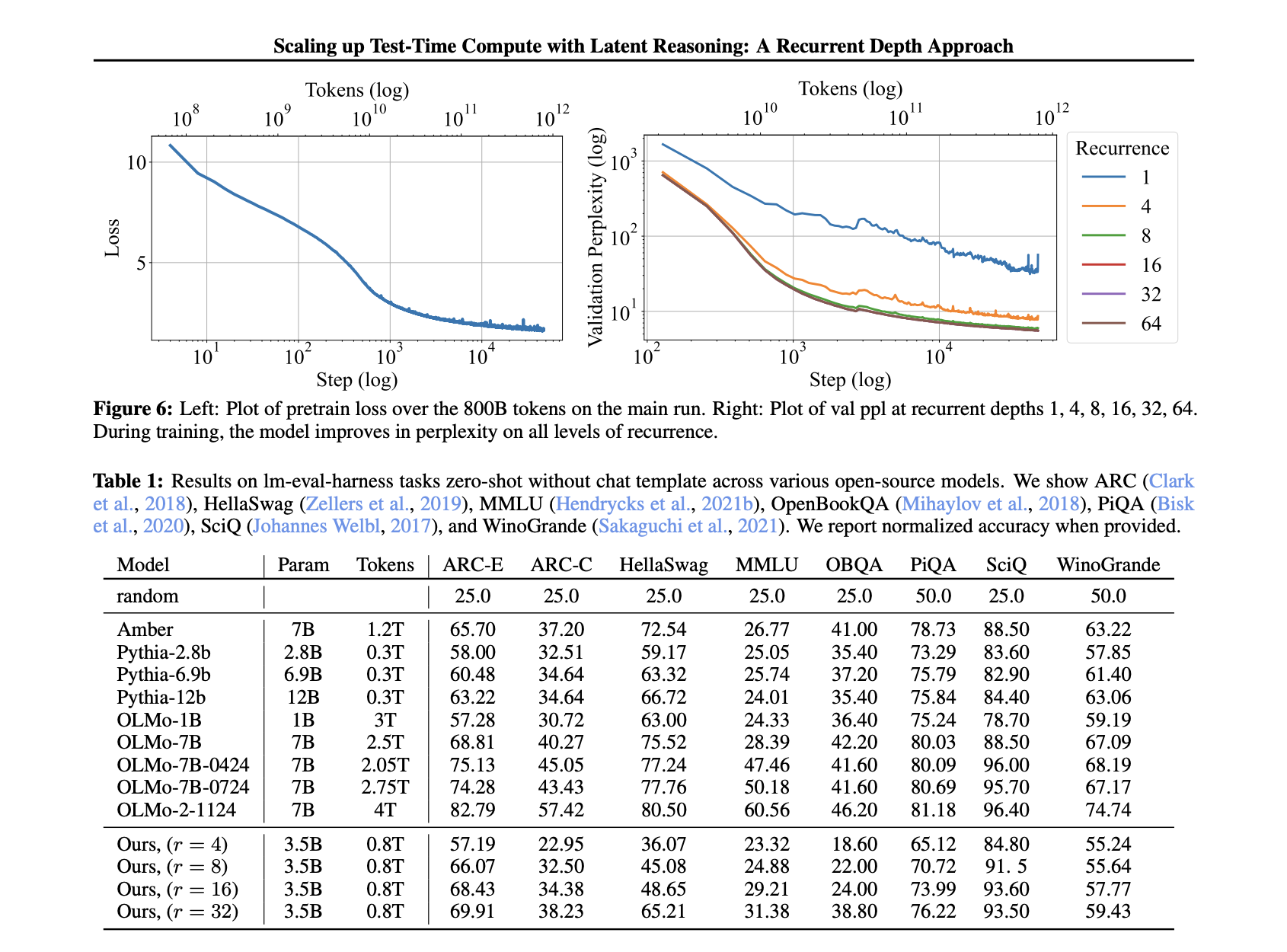

Meet Huginn-3.5B: A New AI Reasoning Model with Scalable Latent Computation

Artificial intelligence models face a fundamental challenge in efficiently scaling their reasoning capabilities at test time. While increasing model size […]

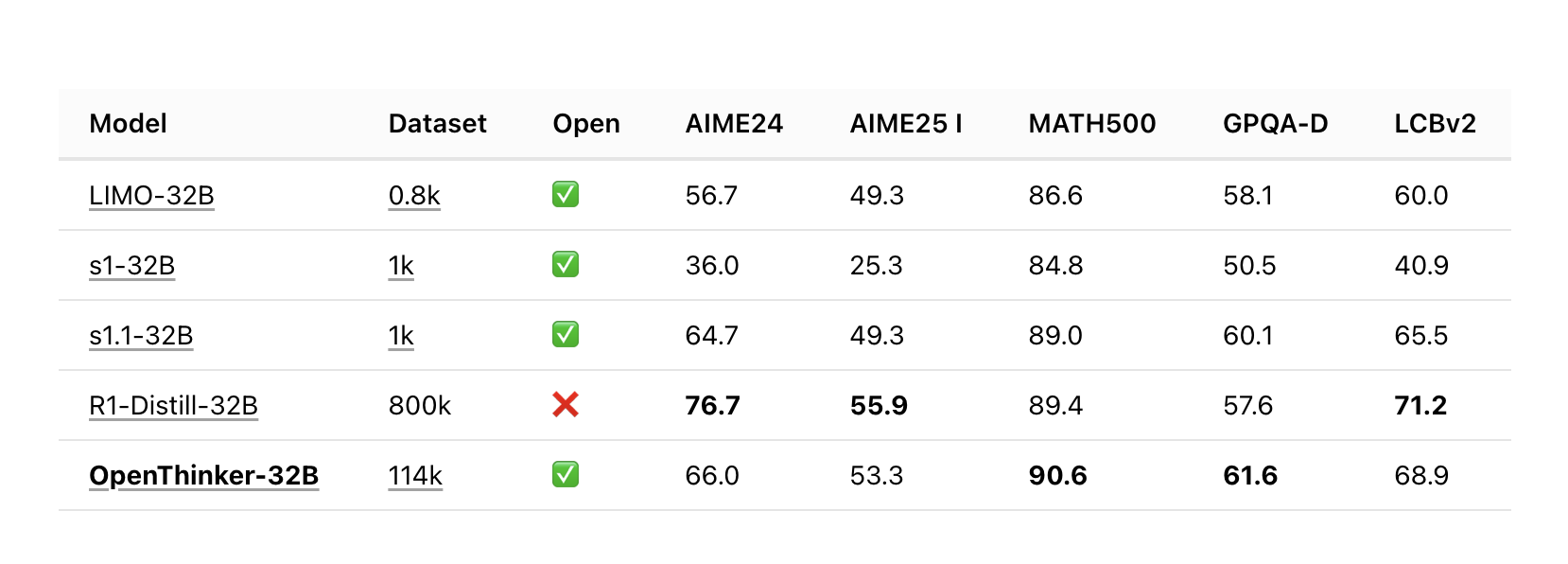

Meet OpenThinker-32B: A State-of-the-Art Open-Data Reasoning Model

Artificial intelligence has made significant strides, yet developing models capable of nuanced reasoning remains a challenge. Many existing models struggle […]

LIMO: The AI Model that Proves Quality Training Beats Quantity

Reasoning tasks are yet a big challenge for most of the language models. Instilling a reasoning aptitude in models, particularly […]

Convergence Labs Introduces the Large Memory Model (LM2): A Memory-Augmented Transformer Architecture Designed to Address Long Context Reasoning Challenges

Transformer-based models have significantly advanced natural language processing (NLP), excelling in various tasks. However, they struggle with reasoning over long […]

OpenAI Introduces Competitive Programming with Large Reasoning Models

Competitive programming has long served as a benchmark for assessing problem-solving and coding skills. These challenges require advanced computational thinking, […]

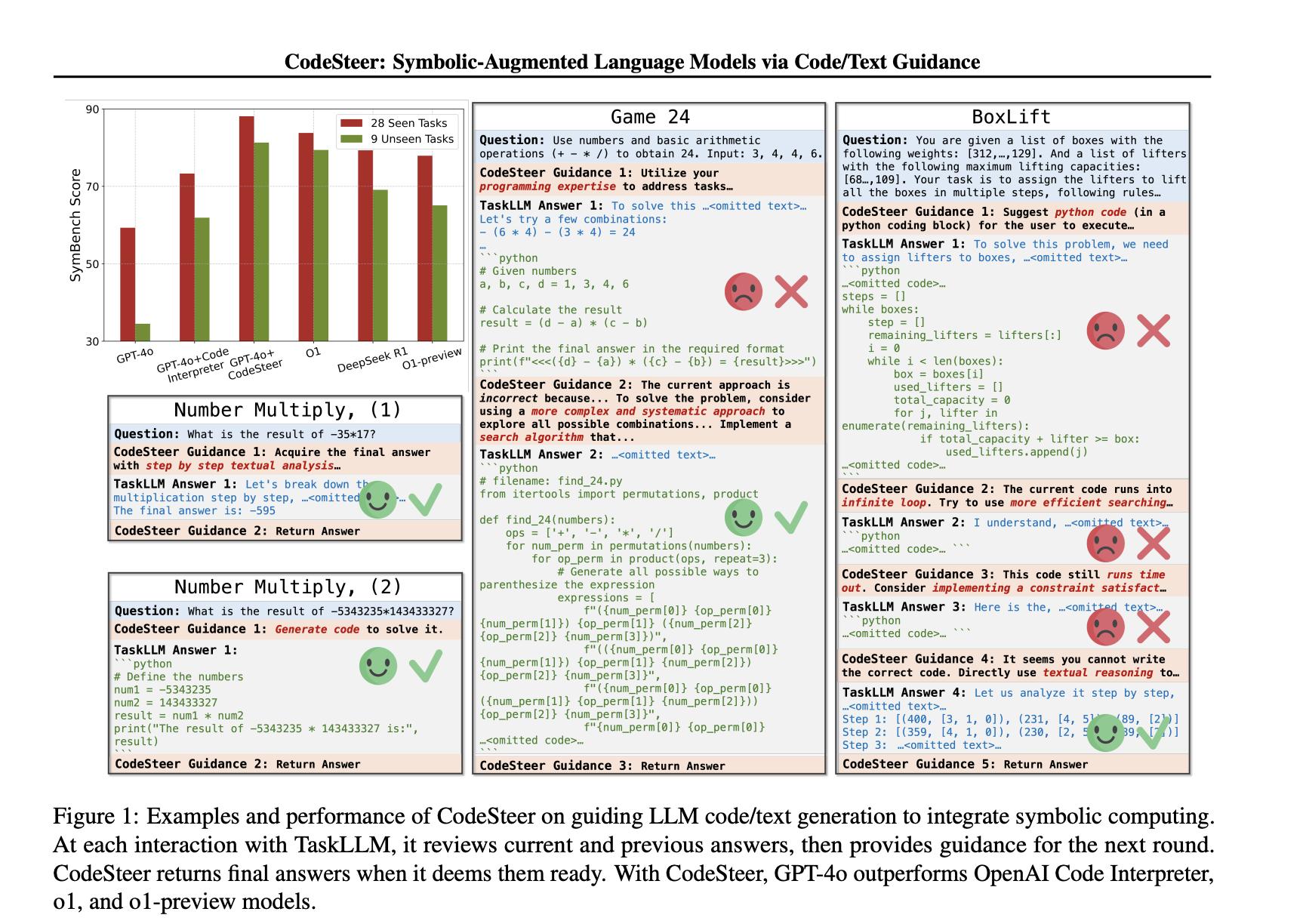

This AI Paper Introduces CodeSteer: Symbolic-Augmented Language Models via Code/Text Guidance

Large language models (LLMs) struggle with precise computations, symbolic manipulations, and algorithmic tasks, often requiring structured problem-solving approaches. While language […]

Sam Altman: OpenAI is not for sale, even for Elon Musk’s $97 billion offer

A brief history of Musk vs. Altman The beef between Musk and Altman goes back to 2015, when the pair […]

Shanghai AI Lab Releases OREAL-7B and OREAL-32B: Advancing Mathematical Reasoning with Outcome Reward-Based Reinforcement Learning

Mathematical reasoning remains a difficult area for artificial intelligence (AI) due to the complexity of problem-solving and the need for […]

This AI Paper Explores Long Chain-of-Thought Reasoning: Enhancing Large Language Models with Reinforcement Learning and Supervised Fine-Tuning

Large language models (LLMs) have demonstrated proficiency in solving complex problems across mathematics, scientific research, and software engineering. Chain-of-thought (CoT) […]