Improving the reasoning capabilities of large language models (LLMs) without architectural changes is a core challenge in advancing AI alignment […]

Category: Machine Learning

Crome: Google DeepMind’s Causal Framework for Robust Reward Modeling in LLM Alignment

Reward models are fundamental components for aligning LLMs with human feedback, yet they face the challenge of reward hacking issues. […]

Thought Anchors: A Machine Learning Framework for Identifying and Measuring Key Reasoning Steps in Large Language Models with Precision

Understanding the Limits of Current Interpretability Tools in LLMs AI models, such as DeepSeek and GPT variants, rely on billions […]

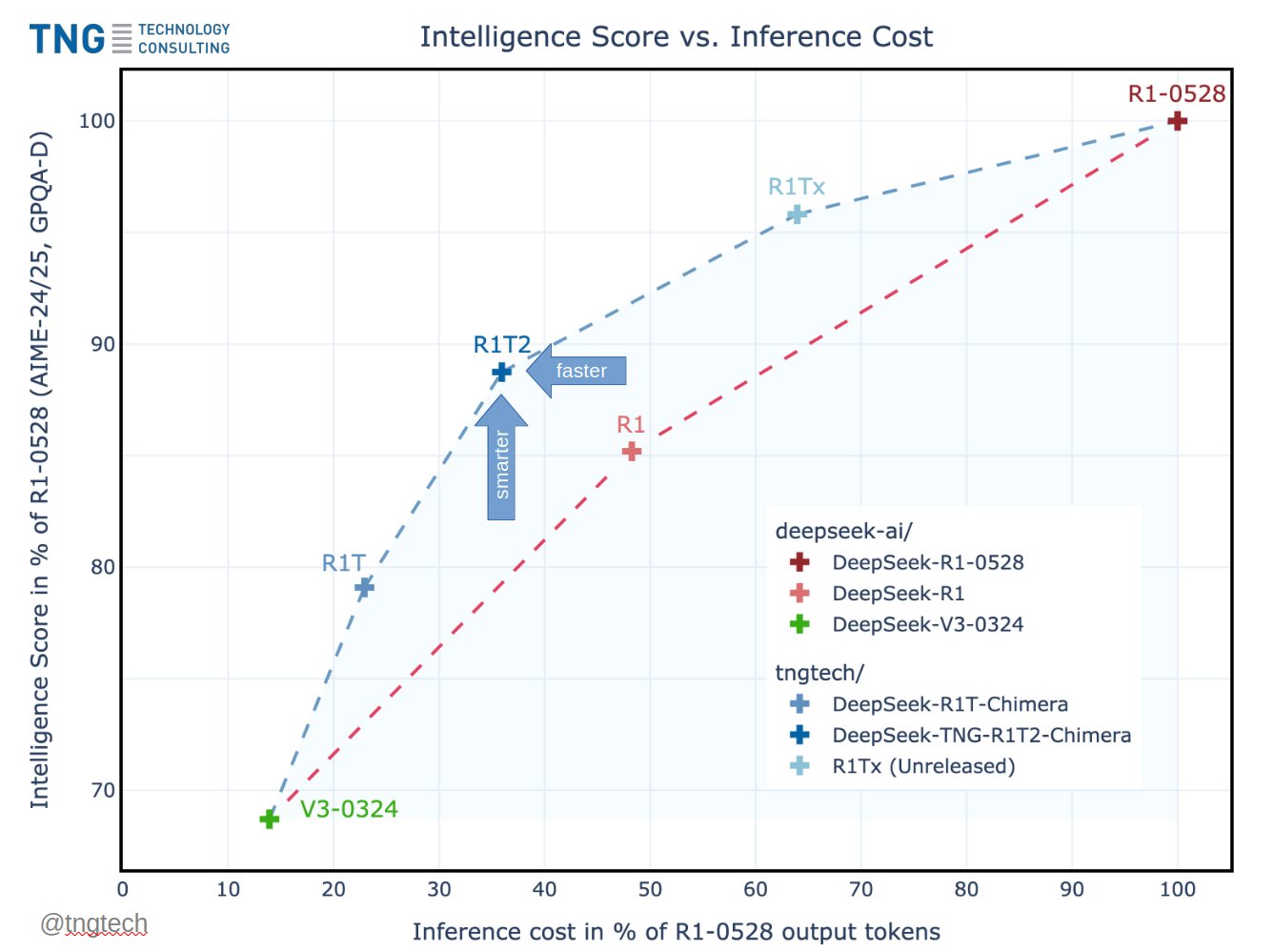

DeepSeek R1T2 Chimera: 200% Faster Than R1-0528 With Improved Reasoning and Compact Output

TNG Technology Consulting has unveiled DeepSeek-TNG R1T2 Chimera, a new Assembly-of-Experts (AoE) model that blends intelligence and speed through an […]

Shanghai Jiao Tong Researchers Propose OctoThinker for Reinforcement Learning-Scalable LLM Development

Introduction: Reinforcement Learning Progress through Chain-of-Thought Prompting LLMs have shown excellent progress in complex reasoning tasks through CoT prompting combined […]

ReasonFlux-PRM: A Trajectory-Aware Reward Model Enhancing Chain-of-Thought Reasoning in LLMs

Understanding the Role of Chain-of-Thought in LLMs Large language models are increasingly being used to solve complex tasks such as […]

Baidu Open Sources ERNIE 4.5: LLM Series Scaling from 0.3B to 424B Parameters

Baidu has officially open-sourced its latest ERNIE 4.5 series, a powerful family of foundation models designed for enhanced language understanding, […]

OMEGA: A Structured Math Benchmark to Probe the Reasoning Limits of LLMs

Introduction to Generalization in Mathematical Reasoning Large-scale language models with long CoT reasoning, such as DeepSeek-R1, have shown good results […]

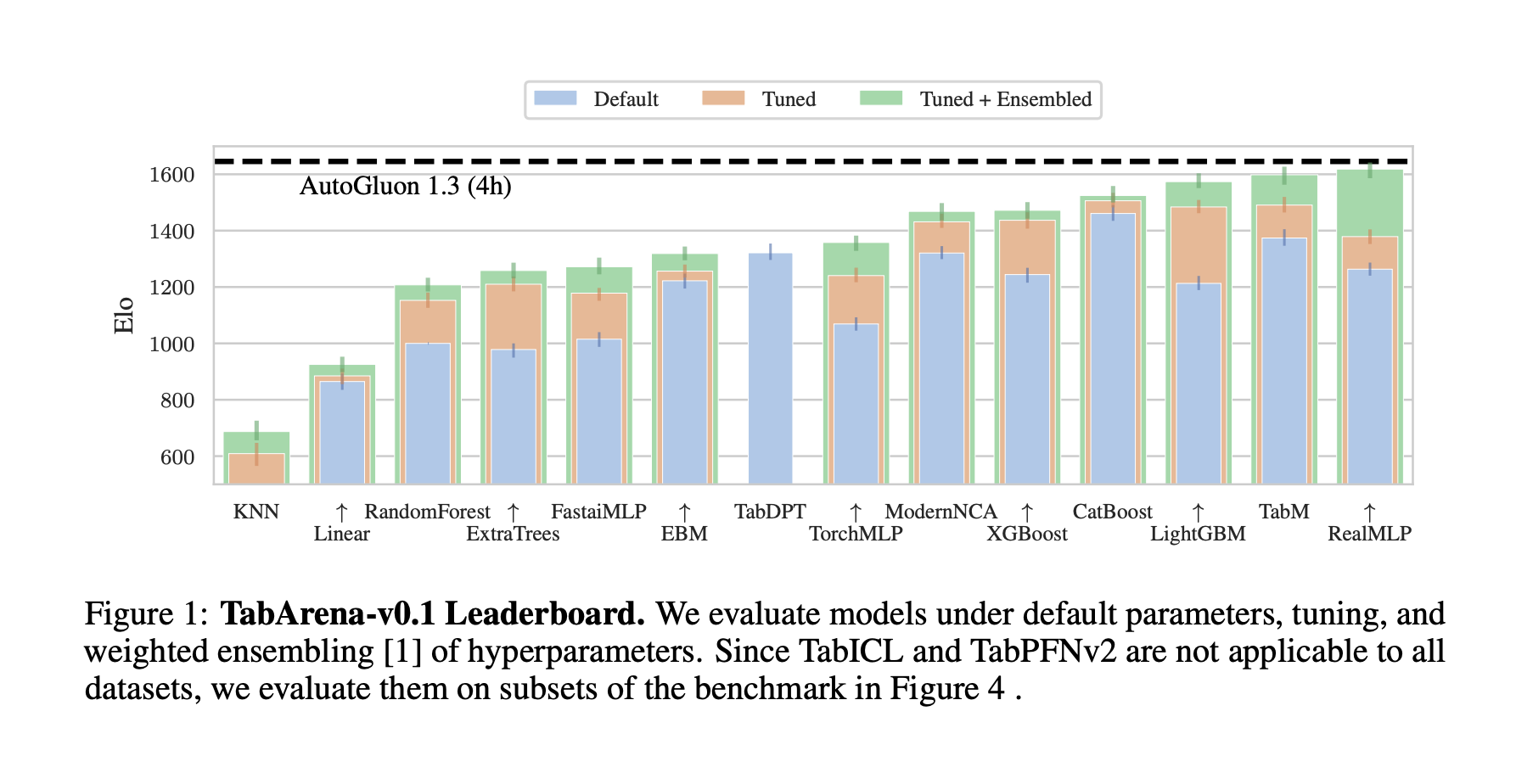

TabArena: Benchmarking Tabular Machine Learning with Reproducibility and Ensembling at Scale

Understanding the Importance of Benchmarking in Tabular ML Machine learning on tabular data focuses on building models that learn patterns […]

MDM-Prime: A generalized Masked Diffusion Models (MDMs) Framework that Enables Partially Unmasked Tokens during Sampling

Introduction to MDMs and Their Inefficiencies Masked Diffusion Models (MDMs) are powerful tools for generating discrete data, such as text […]