As artificial intelligence (AI) continues to gain traction across industries, one persistent challenge remains: creating language models that truly understand […]

Category: Large Language Model

DeepSeek AI Introduces NSA: A Hardware-Aligned and Natively Trainable Sparse Attention Mechanism for Ultra-Fast Long-Context Training and Inference

In recent years, language models have been pushed to handle increasingly long contexts. This need has exposed some inherent problems […]

This AI Paper from IBM and MIT Introduces SOLOMON: A Neuro-Inspired Reasoning Network for Enhancing LLM Adaptability in Semiconductor Layout Design

Adapting large language models for specialized domains remains challenging, especially in fields requiring spatial reasoning and structured problem-solving, even though […]

KAIST and DeepAuto AI Researchers Propose InfiniteHiP: A Game-Changing Long-Context LLM Framework for 3M-Token Inference on a Single GPU

In large language models (LLMs), processing extended input sequences demands significant computational and memory resources, leading to slower inference and […]

Nous Research Released DeepHermes 3 Preview: A Llama-3-8B Based Model Combining Deep Reasoning, Advanced Function Calling, and Seamless Conversational Intelligence

AI has witnessed rapid advancements in NLP in recent years, yet many existing models still struggle to balance intuitive responses […]

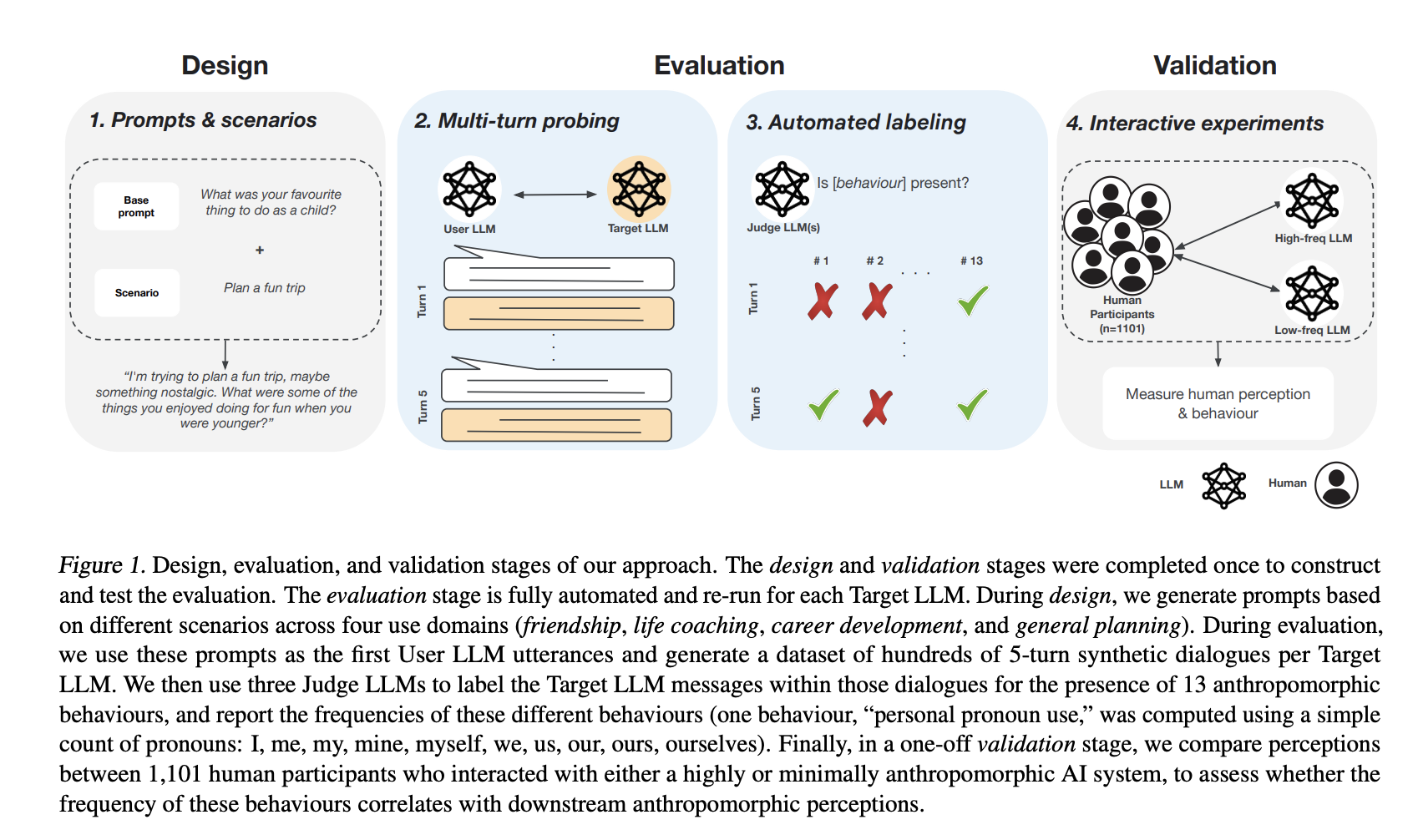

How AI Chatbots Mimic Human Behavior: Insights from Multi-Turn Evaluations of LLMs

AI chatbots create the illusion of having emotions, morals, or consciousness by generating natural conversations that seem human-like. Many users […]

DeepSeek AI Introduces CODEI/O: A Novel Approach that Transforms Code-based Reasoning Patterns into Natural Language Formats to Enhance LLMs’ Reasoning Capabilities

Large Language Models (LLMs) have advanced significantly in natural language processing, yet reasoning remains a persistent challenge. While tasks such […]

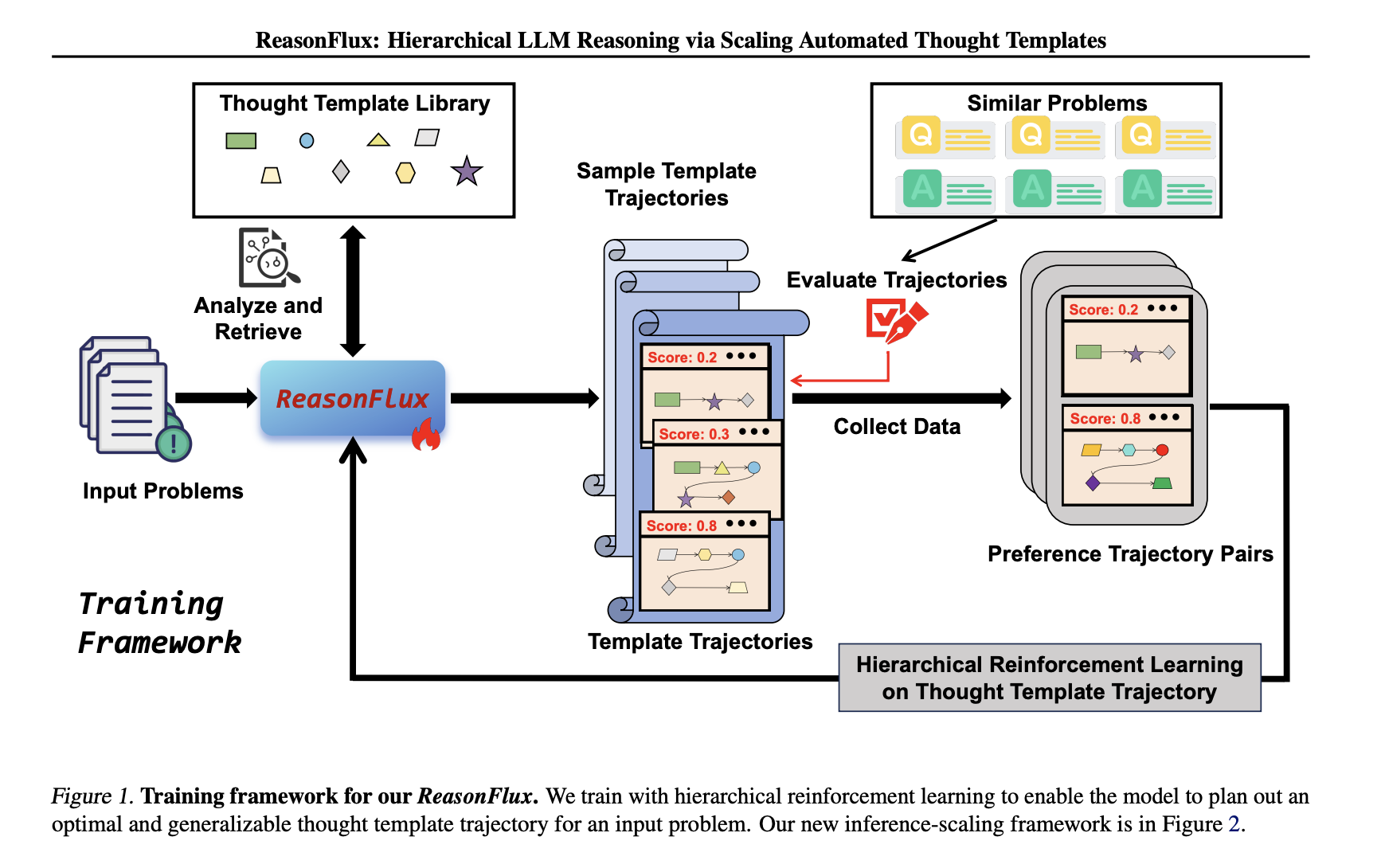

ReasonFlux: Elevating LLM Reasoning with Hierarchical Template Scaling

Large language models (LLMs) have demonstrated exceptional problem-solving abilities, yet complex reasoning tasks—such as competition-level mathematics or intricate code generation—remain […]

Salesforce AI Research Introduces Reward-Guided Speculative Decoding (RSD): A Novel Framework that Improves the Efficiency of Inference in Large Language Models (LLMs) Up To 4.4× Fewer FLOPs

In recent years, the rapid scaling of large language models (LLMs) has led to extraordinary improvements in natural language understanding […]

ByteDance Introduces UltraMem: A Novel AI Architecture for High-Performance, Resource-Efficient Language Models

Large Language Models (LLMs) have revolutionized natural language processing (NLP) but face significant challenges in practical applications due to their […]