Creating songs from text is difficult because it involves generating vocals and instrumental music together. Songs are unique as they […]

Category: Large Language Model

Allen Institute for AI Released olmOCR: A High-Performance Open Source Toolkit Designed to Convert PDFs and Document Images into Clean and Structured Plain Text

Access to high-quality textual data is crucial for advancing language models in the digital age. Modern AI systems rely on […]

LongPO: Enhancing Long-Context Alignment in LLMs Through Self-Optimized Short-to-Long Preference Learning

LLMs have exhibited impressive capabilities through extensive pretraining and alignment techniques. However, while they excel in short-context tasks, their performance […]

Convergence Releases Proxy Lite: A Mini, Open-Weights Version of Proxy Assistant Performing Pretty Well on UI Navigation Tasks

In today’s digital landscape, automating interactions with web content remains a nuanced challenge. Many existing solutions are resource-intensive and tailored […]

DeepSeek AI Releases DeepEP: An Open-Source EP Communication Library for MoE Model Training and Inference

Large language models that use the Mixture-of-Experts (MoE) architecture have enabled significant increases in model capacity without a corresponding rise […]

This AI Paper from Menlo Research Introduces AlphaMaze: A Two-Stage Training Framework for Enhancing Spatial Reasoning in Large Language Models

Artificial intelligence continues to advance in natural language processing but still faces challenges in spatial reasoning tasks. Visual-spatial reasoning is […]

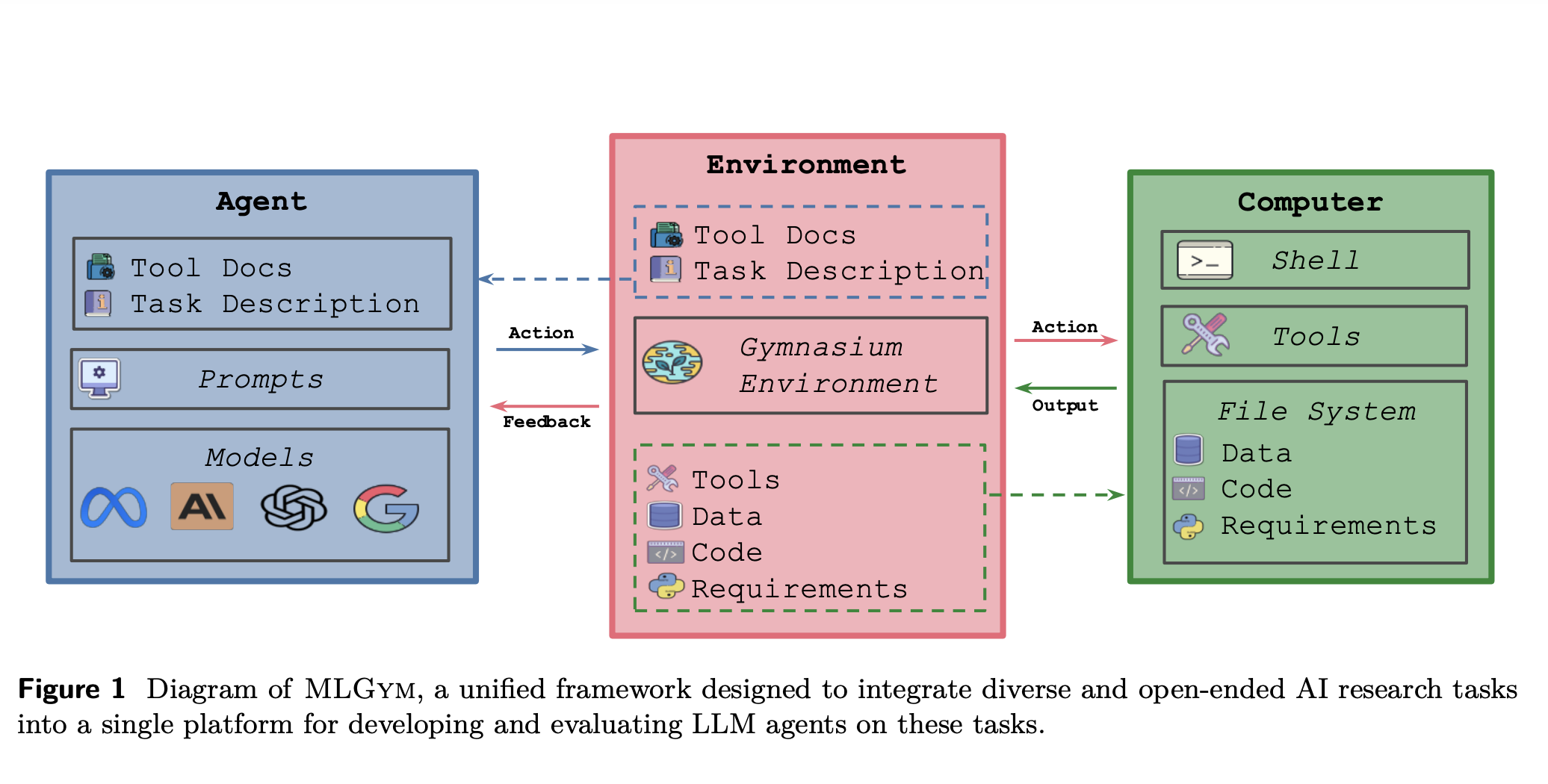

Meta AI Introduces MLGym: A New AI Framework and Benchmark for Advancing AI Research Agents

The ambition to accelerate scientific discovery through AI has been longstanding, with early efforts such as the Oak Ridge Applied […]

Moonshot AI and UCLA Researchers Release Moonlight: A 3B/16B-Parameter Mixture-of-Expert (MoE) Model Trained with 5.7T Tokens Using Muon Optimizer

Training large language models (LLMs) has become central to advancing artificial intelligence, yet it is not without its challenges. As […]

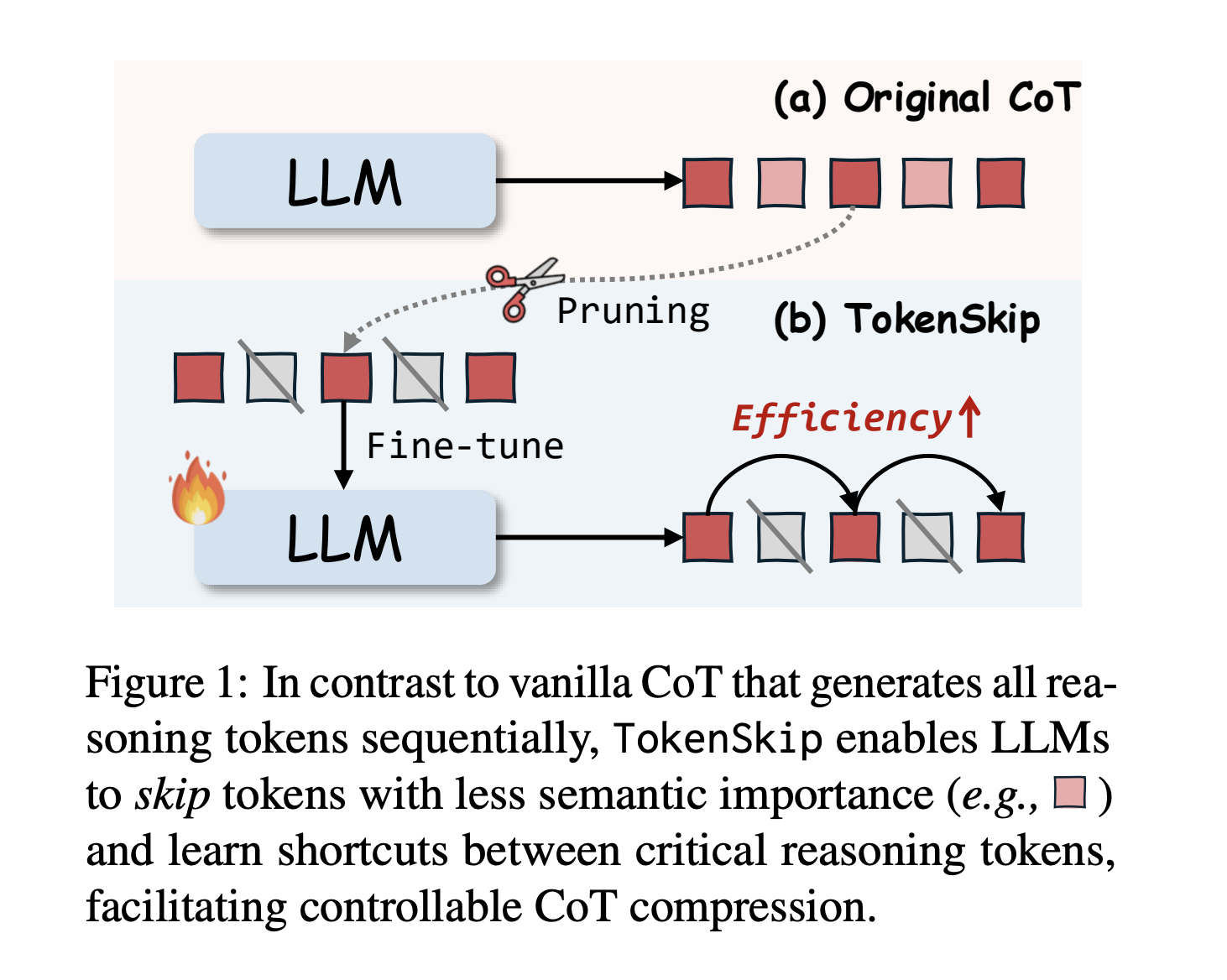

TokenSkip: Optimizing Chain-of-Thought Reasoning in LLMs Through Controllable Token Compression

Large Language Models (LLMs) face significant challenges in complex reasoning tasks, despite the breakthrough advances achieved through Chain-of-Thought (CoT) prompting. […]

Stanford Researchers Introduce OctoTools: A Training-Free Open-Source Agentic AI Framework Designed to Tackle Complex Reasoning Across Diverse Domains

Large language models (LLMs) are limited by complex reasoning tasks that require multiple steps, domain-specific knowledge, or external tool integration. […]