In today’s rapidly evolving digital landscape, the need for accessible, efficient language models is increasingly evident. Traditional large-scale models have […]

Category: Large Language Model

Alibaba Released Babel: An Open Multilingual Large Language Model LLM Serving Over 90% of Global Speakers

Most existing LLMs prioritize languages with abundant training resources, such as English, French, and German, while widely spoken but underrepresented […]

Qwen Releases QwQ-32B: A 32B Reasoning Model that Achieves Significantly Enhanced Performance in Downstream Task

Despite significant progress in natural language processing, many AI systems continue to encounter difficulties with advanced reasoning, especially when faced […]

Researchers at Stanford Introduces LLM-Lasso: A Novel Machine Learning Framework that Leverages Large Language Models (LLMs) to Guide Feature Selection in Lasso ℓ1 Regression

Feature selection plays a crucial role in statistical learning by helping models focus on the most relevant predictors while reducing […]

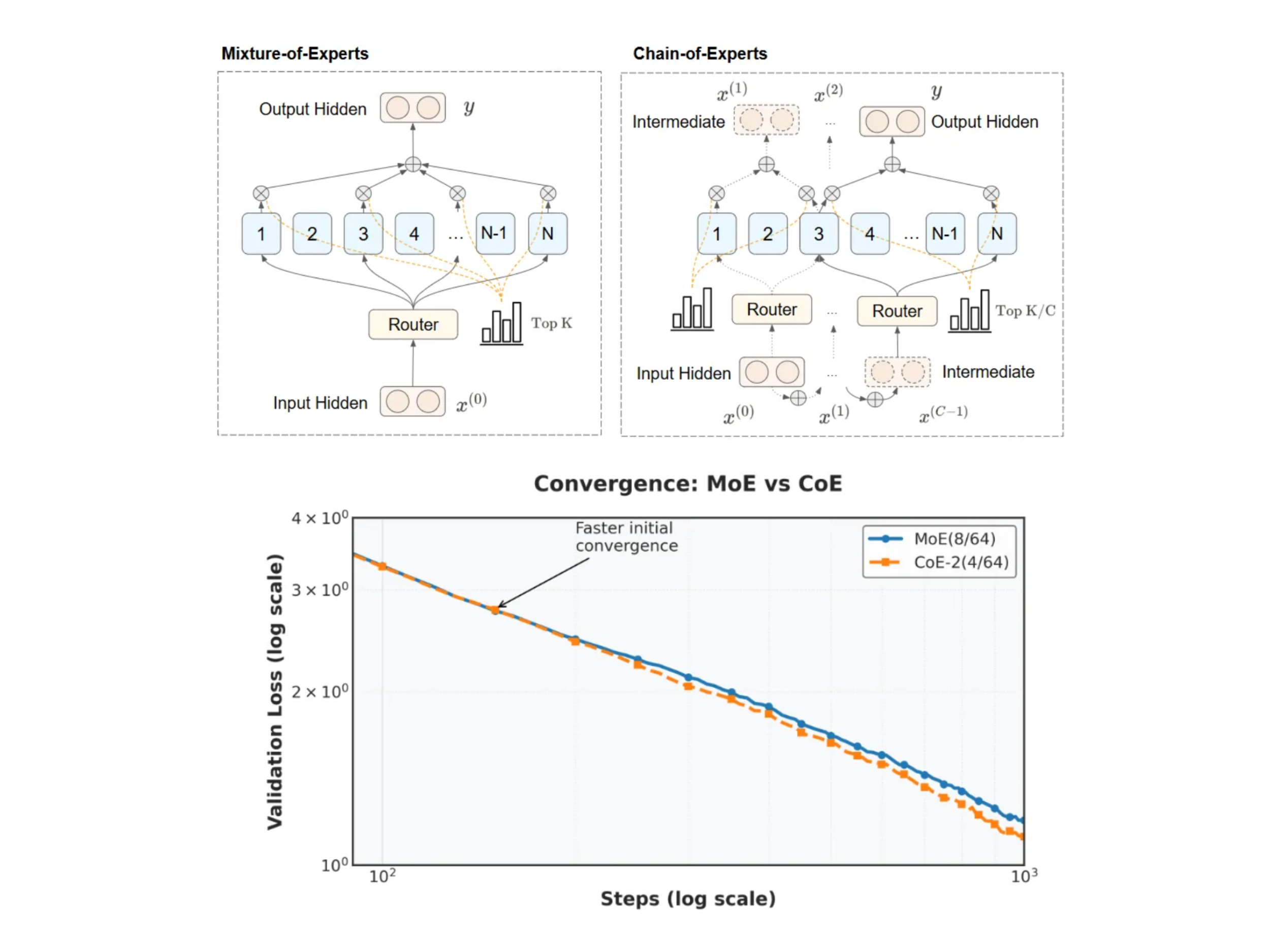

Rethinking MoE Architectures: A Measured Look at the Chain-of-Experts Approach

Large language models have significantly advanced our understanding of artificial intelligence, yet scaling these models efficiently remains challenging. Traditional Mixture-of-Experts […]

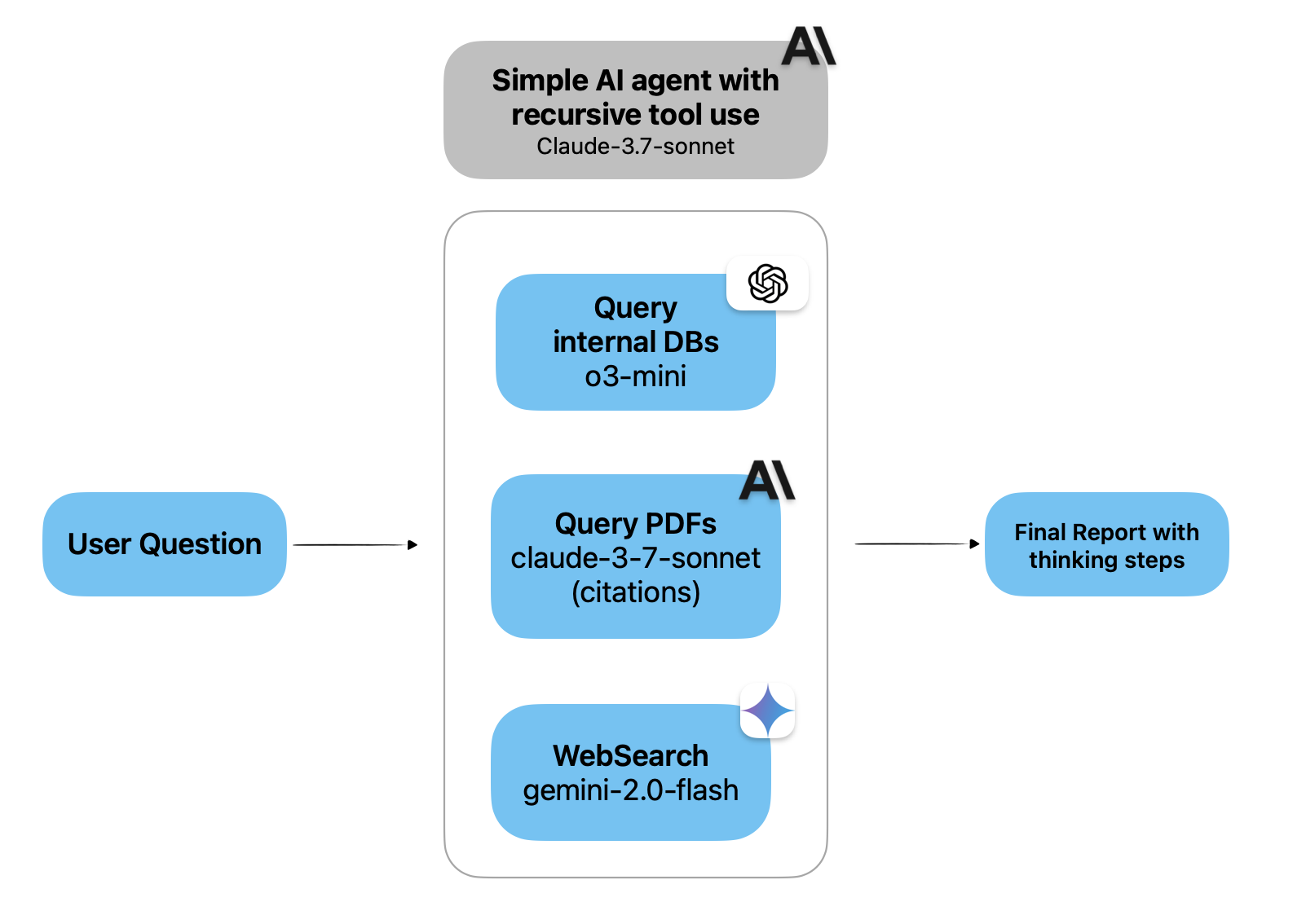

Defog AI Open Sources Introspect: MIT-Licensed Deep-Research for Your Internal Data

Modern enterprises face a myriad of challenges when it comes to internal data research. Data today is scattered across various […]

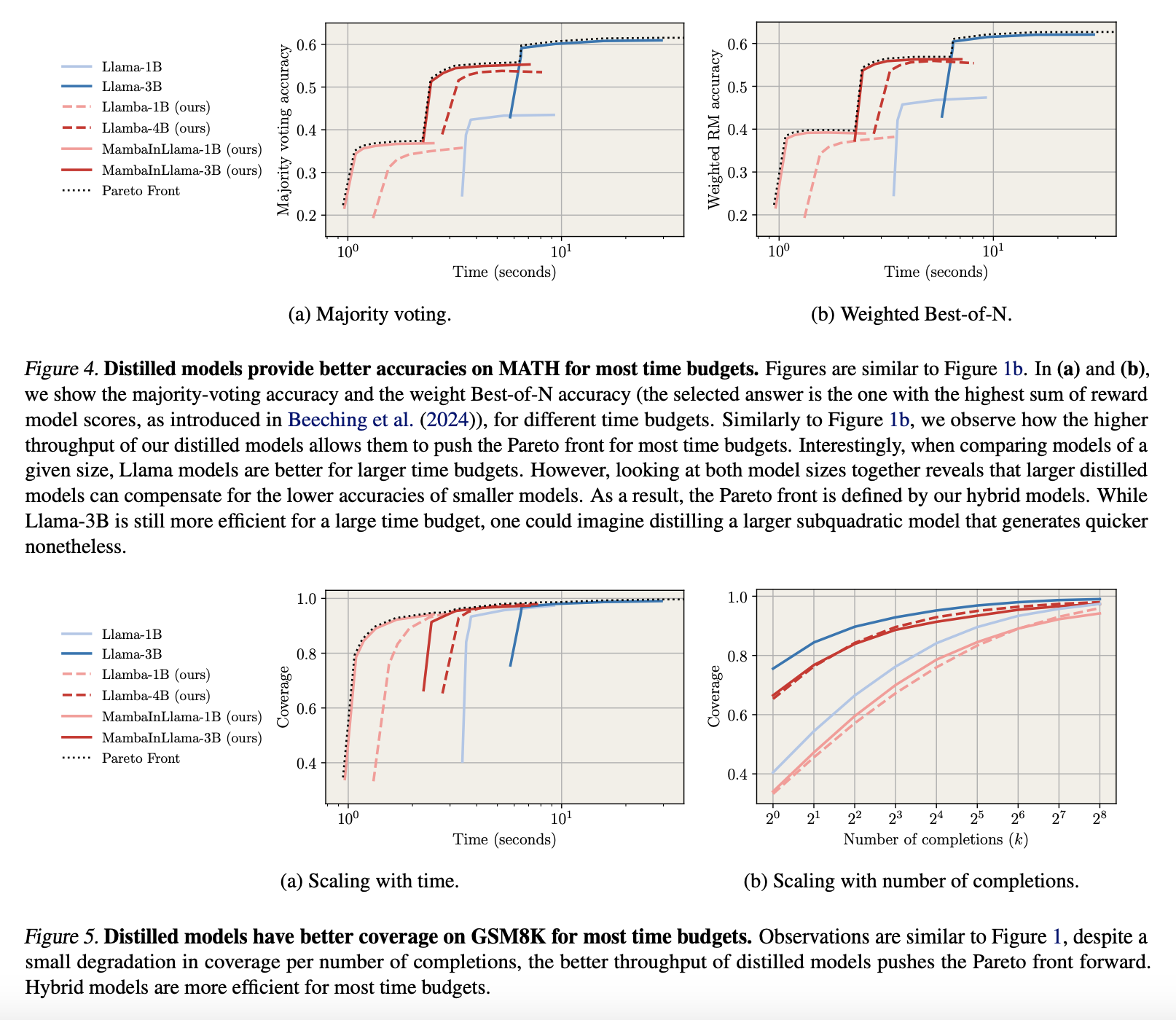

Accelerating AI: How Distilled Reasoners Scale Inference Compute for Faster, Smarter LLMs

Improving how large language models (LLMs) handle complex reasoning tasks while keeping computational costs low is a challenge. Generating multiple […]

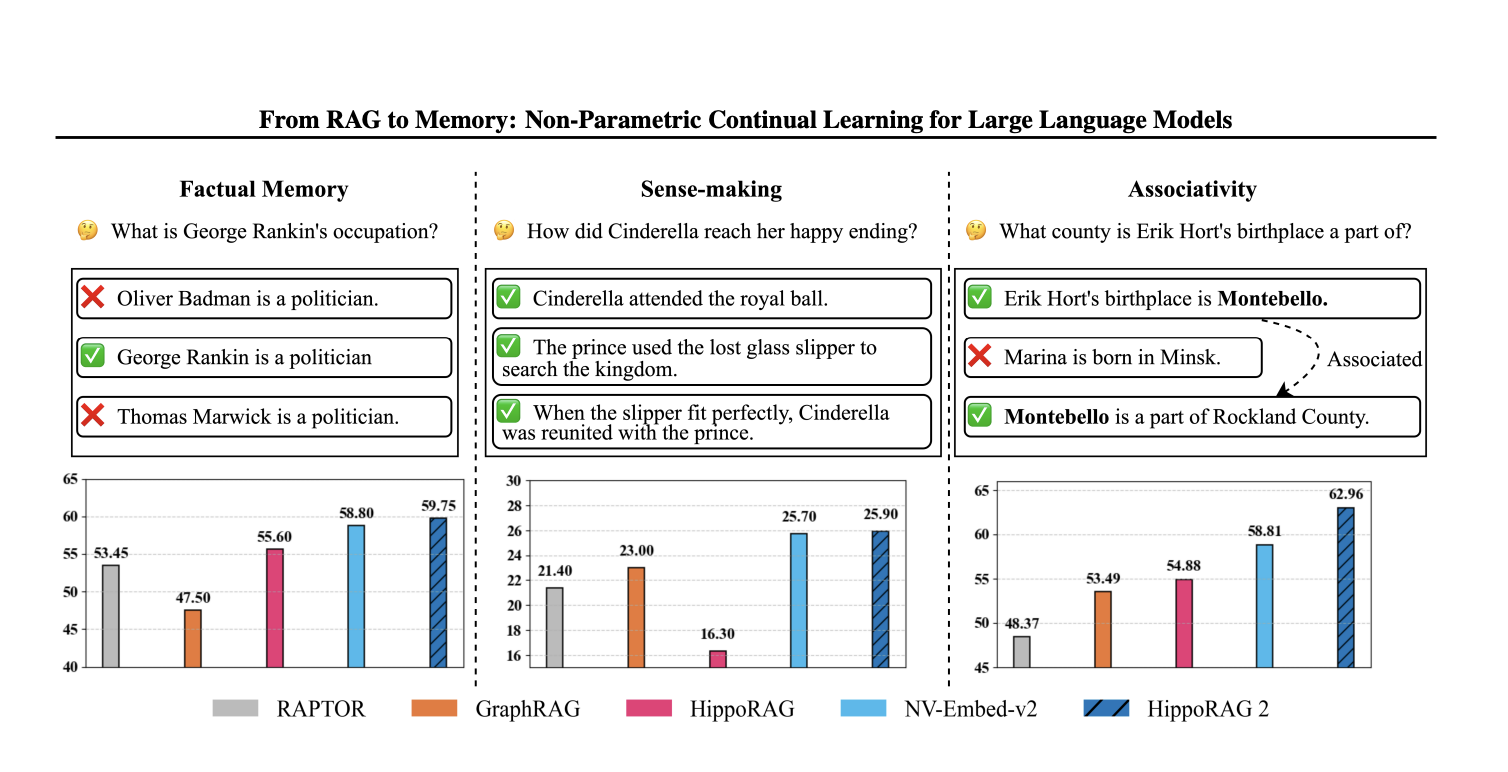

HippoRAG 2: Advancing Long-Term Memory and Contextual Retrieval in Large Language Models

LLMs face challenges in continual learning due to the limitations of parametric knowledge retention, leading to the widespread adoption of […]

Researchers from UCLA, UC Merced and Adobe propose METAL: A Multi-Agent Framework that Divides the Task of Chart Generation into the Iterative Collaboration among Specialized Agents

Creating charts that accurately reflect complex data remains a nuanced challenge in today’s data visualization landscape. Often, the task involves […]

LightThinker: Dynamic Compression of Intermediate Thoughts for More Efficient LLM Reasoning

Methods like Chain-of-Thought (CoT) prompting have enhanced reasoning by breaking complex problems into sequential sub-steps. More recent advances, such as […]