The project developer for one of the Internet’s most popular networking tools is scrapping its vulnerability reward program after being […]

Tag: LLMs

A single click mounted a covert, multistage attack against Copilot

Microsoft has fixed a vulnerability in its Copilot AI assistant that allowed hackers to pluck a host of sensitive user […]

Signal creator Moxie Marlinspike wants to do for AI what he did for messaging

On Confer, remote attestation allows anyone to reproduce the bit-by-bit outputs that confirm that the publicly available proxy and image […]

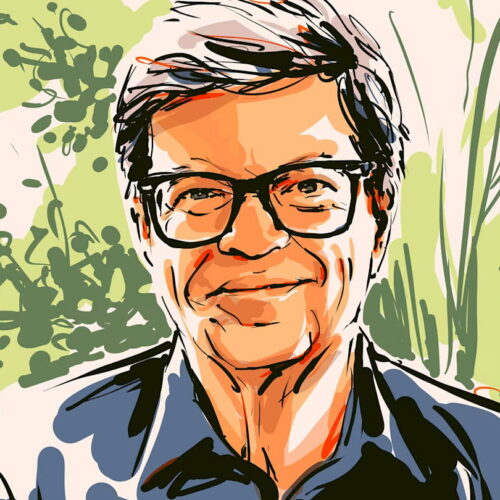

Computer scientist Yann LeCun: “Intelligence really is about learning”

The AI pioneer talks about stepping down from Meta, limits of large language models. I arrive 10 minutes ahead of […]

No, Grok can’t really “apologize” for posting non-consensual sexual images

Despite reporting to the contrary, there’s evidence to suggest that Grok isn’t sorry at all about reports that it generated […]

World’s largest shadow library made a 300TB copy of Spotify’s most streamed songs

But Anna’s Archive is clearly working to support AI developers, another noted, pointing out that Anna’s Archive promotes selling “high-speed […]

LLMs’ impact on science: Booming publications, stagnating quality

Skip to content Once researchers turn to LLMs, paper counts go up, quality does not. There have been a number […]

Google releases VaultGemma, its first privacy-preserving LLM

The companies seeking to build larger AI models have been increasingly stymied by a lack of high-quality training data. As […]

Study: Social media probably can’t be fixed

“The [structural] mechanism producing these problematic outcomes is really robust and hard to resolve.” Credit: Aurich Lawson | Getty Images […]

AI industry horrified to face largest copyright class action ever certified

According to the groups, allowing copyright class actions in AI training cases will result in a future where copyright questions […]