Estimated reading time: 5 minutes Table of contents Introduction What Is a Proxy Server? Technical Architecture: Key Functions (2025): Types […]

Category: Guardrail

Safeguarding Agentic AI Systems: NVIDIA’s Open-Source Safety Recipe

As large language models (LLMs) evolve from simple text generators to agentic systems —able to plan, reason, and autonomously act—there […]

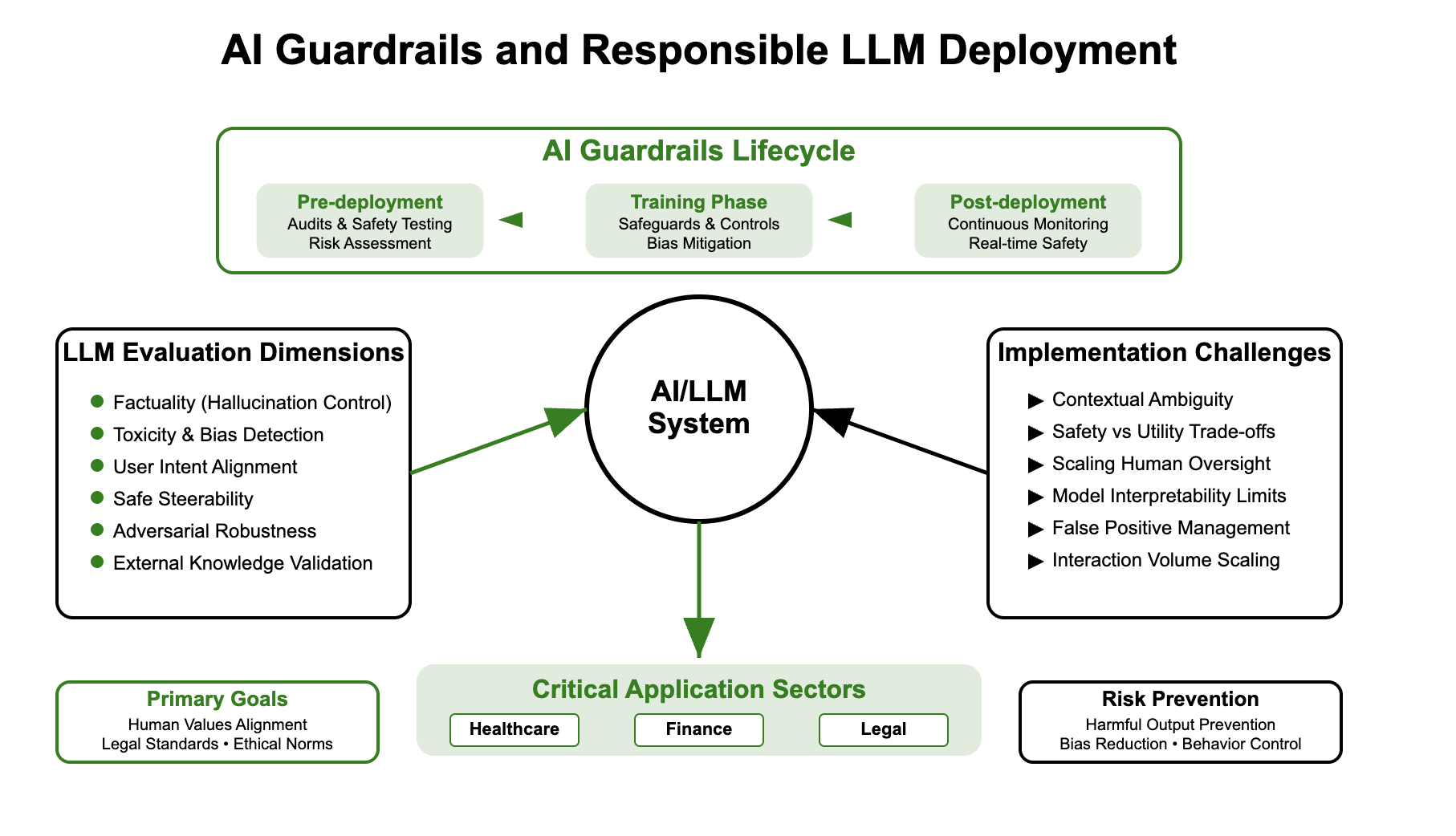

AI Guardrails and Trustworthy LLM Evaluation: Building Responsible AI Systems

Table of contents Introduction: The Rising Need for AI Guardrails What Are AI Guardrails? Trustworthy AI: Principles and Pillars LLM […]

Anthropic Proposes Targeted Transparency Framework for Frontier AI Systems

As the development of large-scale AI systems accelerates, concerns about safety, oversight, and risk management are becoming increasingly critical. In […]

VERINA: Evaluating LLMs on End-to-End Verifiable Code Generation with Formal Proofs

LLM-Based Code Generation Faces a Verification Gap LLMs have shown strong performance in programming and are widely adopted in tools […]

Meta AI Open-Sources LlamaFirewall: A Security Guardrail Tool to Help Build Secure AI Agents

As AI agents become more autonomous—capable of writing production code, managing workflows, and interacting with untrusted data sources—their exposure to […]

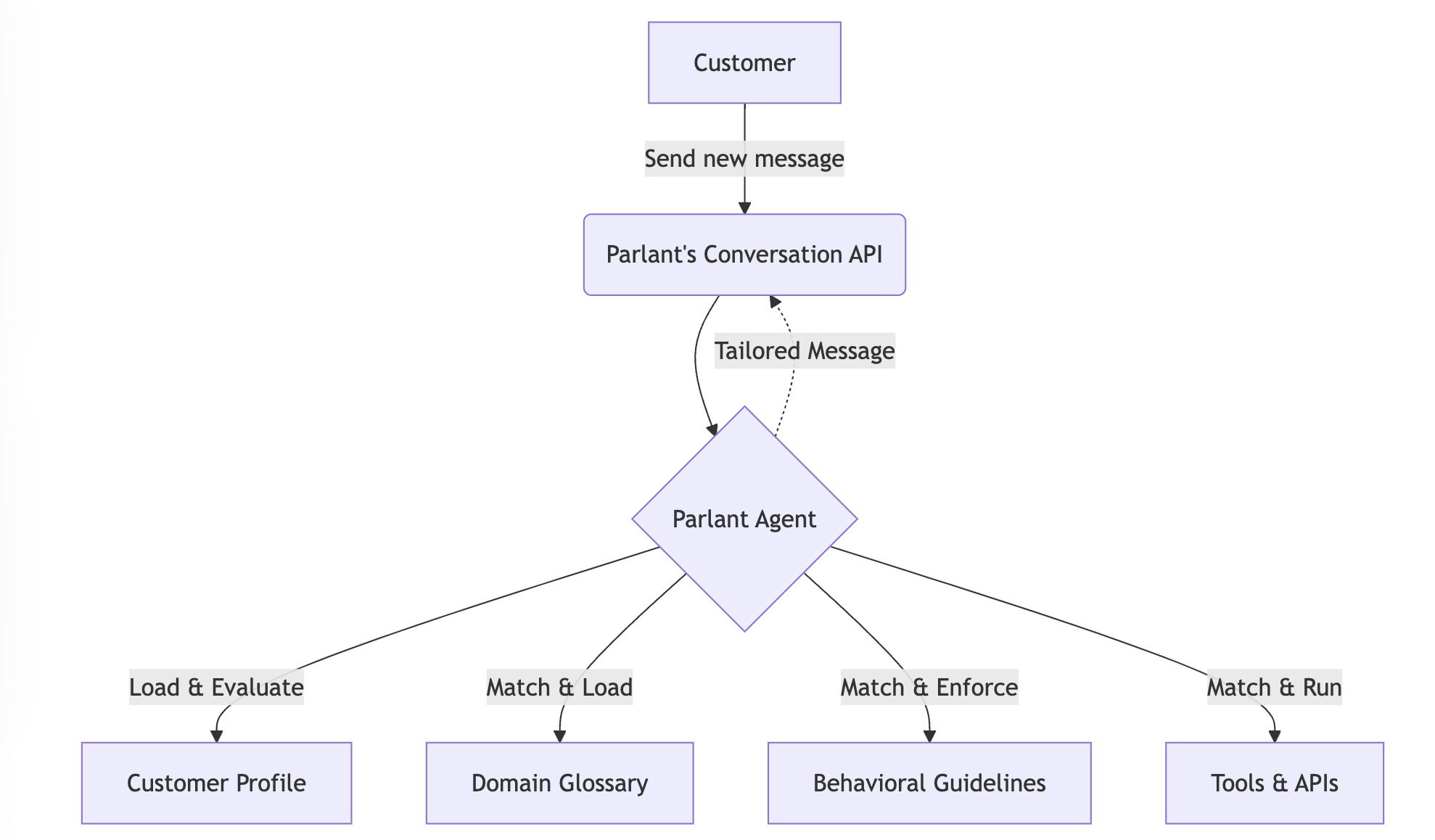

Introducing Parlant: The Open-Source Framework for Reliable AI Agents

The Problem: Why Current AI Agent Approaches Fail If you have ever designed and implemented an LLM Model-based chatbot in […]

![Proxy Servers Explained: Types, Use Cases & Trends in 2025 [Technical Deep Dive]](https://thetechbriefs.com/wp-content/uploads/2025/08/51565-proxy-servers-explained-types-use-cases-trends-in-2025-technical-deep-dive.png)