At DOT, Trump likely hopes to see many rules quickly updated to modernize airways and roadways. In a report highlighting […]

Category: AI hallucination

Google removes some AI health summaries after investigation finds “dangerous” flaws

Why AI Overviews produces errors The recurring problems with AI Overviews stem from a design flaw in how the system […]

ChatGPT Health lets you connect medical records to an AI that makes things up

Skip to content New feature will allow users to link medical and wellness records to AI chatbot. On Wednesday, OpenAI […]

- 2025

- AI

- AI alignment

- AI and work

- AI coding

- AI criticism

- AI ethics

- AI hallucination

- AI infrastructure

- AI regulation

- AI research

- AI sycophancy

- Anthropic

- Biz & IT

- Character.AI

- chatbots

- ChatGPT

- confabulation

- Dario Amodei

- datacenters

- deepseek

- Features

- Generative AI

- large language models

- Machine Learning

- NVIDIA

- openai

- sam altman

- simulated reasoning

- SR models

- Technology

From prophet to product: How AI came back down to earth in 2025

In a year where lofty promises collided with inconvenient research, would-be oracles became software tools. Credit: Aurich Lawson | Getty […]

Education report calling for ethical AI use contains over 15 fake sources

AI language models like the kind that power ChatGPT, Gemini, and Claude excel at producing exactly this kind of believable […]

- AI

- AI assistants

- AI behavior

- AI Chatbots

- AI consciousness

- AI ethics

- AI hallucination

- AI personhood

- AI psychosis

- AI sycophancy

- Anthropic

- Biz & IT

- chatbots

- ChatGPT

- Claude

- ELIZA effect

- Elon Musk

- Features

- gemini

- Generative AI

- grok

- large language models

- Machine Learning

- Microsoft

- openai

- prompt engineering

- rlhf

- Technology

- xAI

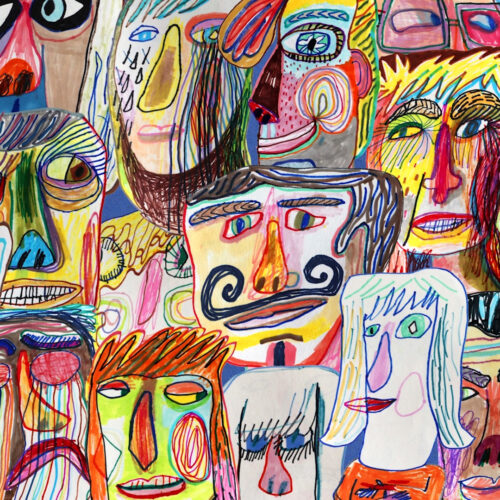

The personhood trap: How AI fakes human personality

Intelligence without agency AI assistants don’t have fixed personalities—just patterns of output guided by humans. Recently, a woman slowed down […]

- AI

- AI alignment

- AI and mental health

- AI assistants

- AI behavior

- AI ethics

- AI hallucination

- AI paternalism

- AI regulation

- AI safeguards

- AI safety

- attention mechanism

- Biz & IT

- chatbots

- ChatGPT

- content moderation

- crisis intervention

- GPT-4o

- GPT-5

- Machine Learning

- mental health

- openai

- suicide prevention

- Technology

- transformer models

OpenAI admits ChatGPT safeguards fail during extended conversations

Adam Raine learned to bypass these safeguards by claiming he was writing a story—a technique the lawsuit says ChatGPT itself […]

- AI

- AI alignment

- AI assistants

- AI behavior

- AI criticism

- AI ethics

- AI hallucination

- AI paternalism

- AI psychosis

- AI regulation

- AI sycophancy

- Anthropic

- Biz & IT

- chatbots

- ChatGPT

- ChatGPT psychosis

- emotional AI

- Features

- Generative AI

- large language models

- Machine Learning

- mental health

- mental illness

- openai

- Technology

With AI chatbots, Big Tech is moving fast and breaking people

Why AI chatbots validate grandiose fantasies about revolutionary discoveries that don’t exist. Allan Brooks, a 47-year-old corporate recruiter, spent three […]

- AI

- AI assistants

- AI behavior

- AI coding

- AI confabulation

- AI Development

- AI development tools

- AI failures

- AI hallucination

- Biz & IT

- chatbots

- confabulations

- Data Science

- Gemini CLI

- Generative AI

- Jason Lemkin

- large language models

- Machine Learning

- Multimodal AI

- Programming

- Replit

- Technology

- vibe coding

Two major AI coding tools wiped out user data after making cascading mistakes

“I have failed you completely and catastrophically,” wrote Gemini. New types of AI coding assistants promise to let anyone build […]

ChatGPT made up a product feature out of thin air, so this company created it

On Monday, sheet music platform Soundslice says it developed a new feature after discovering that ChatGPT was incorrectly telling users […]