Question:

Imagine your company’s LLM API costs suddenly doubled last month. A deeper analysis shows that while user inputs look different at a text level, many of them are semantically similar. As an engineer, how would you identify and reduce this redundancy without impacting response quality?

What is Prompt Caching?

Prompt caching is an optimization technique used in AI systems to improve speed and reduce cost. Instead of sending the same long instructions, documents, or examples to the model repeatedly, the system reuses previously processed prompt content such as static instructions, prompt prefixes, or shared context. This helps save both input and output tokens while keeping responses consistent.

Consider a travel planning assistant where users frequently ask questions like “Create a 5-day itinerary for Paris focused on museums and food.” Even if different users phrase it slightly differently, the core intent and structure of the request remains the same. Without any optimization, the model has to read and process the full prompt every time, repeating the same computation and increasing both latency and cost.

With prompt caching, once the assistant processes this request the first time, the repeated parts of the prompt—such as the itinerary structure, constraints, and common instructions—are stored. When a similar request is sent again, the system reuses the previously processed content instead of starting from scratch. This results in faster responses and lower API costs, while still delivering accurate and consistent outputs.

What Gets Cached and Where It’s Stored

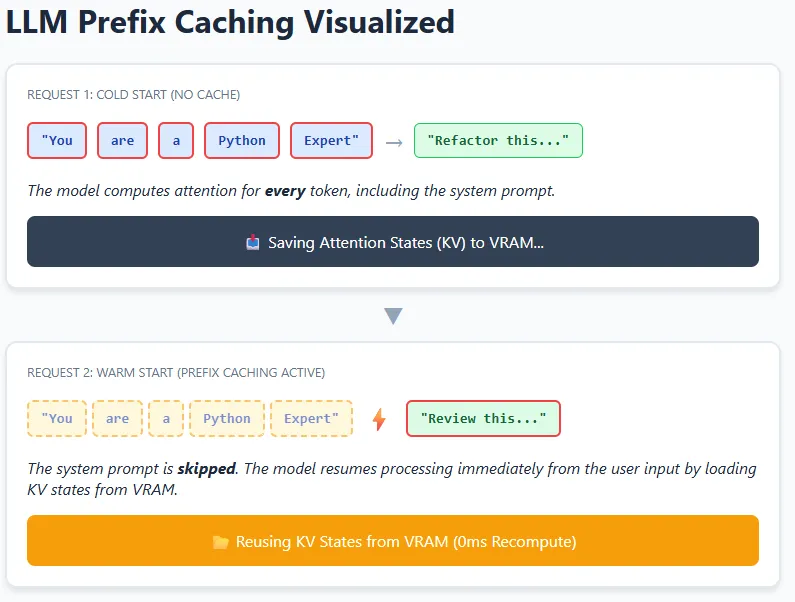

At a high level, caching in LLM systems can happen at different layers—ranging from simple token-level reuse to more advanced reuse of internal model states. In practice, modern LLMs mainly rely on Key–Value (KV) caching, where the model stores intermediate attention states in GPU memory (VRAM) so it doesn’t have to recompute them again.

Think of a coding assistant with a fixed system instruction like “You are an expert Python code reviewer.” This instruction appears in every request. When the model processes it once, the attention relationships (keys and values) between its tokens are stored. For future requests, the model can reuse these stored KV states and only compute attention for the new user input, such as the actual code snippet.

This idea is extended across requests using prefix caching. If multiple prompts start with the exact same prefix—same text, formatting, and spacing—the model can skip recomputing that entire prefix and resume from the cached point. This is especially effective in chatbots, agents, and RAG pipelines where system prompts and long instructions rarely change. The result is lower latency and reduced compute cost, while still allowing the model to fully understand and respond to new context.

Structuring Prompts for High Cache Efficiency

- Place system instructions, roles, and shared context at the beginning of the prompt, and move user-specific or changing content to the end.

- Avoid adding dynamic elements like timestamps, request IDs, or random formatting in the prefix, as even small changes reduce reuse.

- Ensure structured data (for example, JSON context) is serialized in a consistent order and format to prevent unnecessary cache misses.

- Regularly monitor cache hit rates and group similar requests together to maximize efficiency at scale.

Conclusion

In this situation, the goal is to reduce repeated computation while preserving response quality. An effective approach is to analyze incoming requests to identify shared structure, intent, or common prefixes, and then restructure prompts so that reusable context remains consistent across calls. This allows the system to avoid reprocessing the same information repeatedly, leading to lower latency and reduced API costs without changing the final output.

For applications with long and repetitive prompts, prefix-based reuse can deliver significant savings, but it also introduces practical constraints—KV caches consume GPU memory, which is finite. As usage scales, cache eviction strategies or memory tiering become essential to balance performance gains with resource limits.

Arham Islam

I am a Civil Engineering Graduate (2022) from Jamia Millia Islamia, New Delhi, and I have a keen interest in Data Science, especially Neural Networks and their application in various areas.