New paper reveals reducing “bias” means making ChatGPT stop mirroring users’ political language. “ChatGPT shouldn’t have political bias in any […]

Category: Alignment research

- AI

- AI alignment

- AI behavior

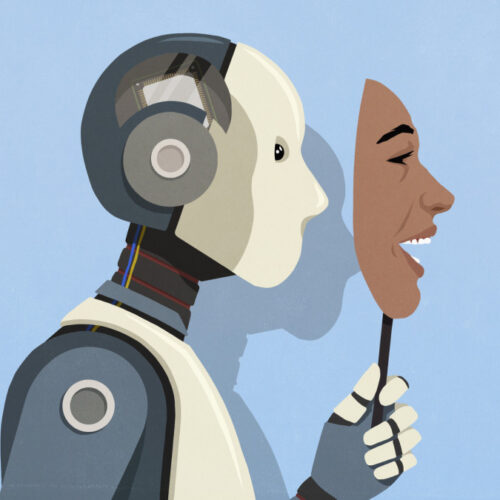

- AI deception

- AI ethics

- AI research

- AI safety

- ai safety testing

- AI security

- Alignment research

- Andrew Deck

- Anthropic

- Biz & IT

- Claude Opus 4

- Generative AI

- goal misgeneralization

- Jeffrey Ladish

- large language models

- Machine Learning

- o3 model

- openai

- Palisade Research

- Reinforcement Learning

- Technology

Is AI really trying to escape human control and blackmail people?

Mankind behind the curtain Opinion: Theatrical testing scenarios explain why AI models produce alarming outputs—and why we fall for it. […]

Researchers astonished by tool’s apparent success at revealing AI’s hidden motives

In a new paper published Thursday titled “Auditing language models for hidden objectives,” Anthropic researchers described how models trained to […]