Large language models (LLMs) have shown impressive results in many areas, but when it comes to playing classic text adventure games, they often struggle to make it past even the simplest of puzzles.

A recent experiment by Entropic Thoughts tested how well various models could navigate and solve interactive fiction, using a structured benchmark to compare results across multiple games. The takeaway was that while some models can make reasonable progress, even the best require guidance and struggle with the skills these classic problem-solving games demand.

SEE ALSO: Continua is an AI assistant that joins your group chats and keeps plans on track

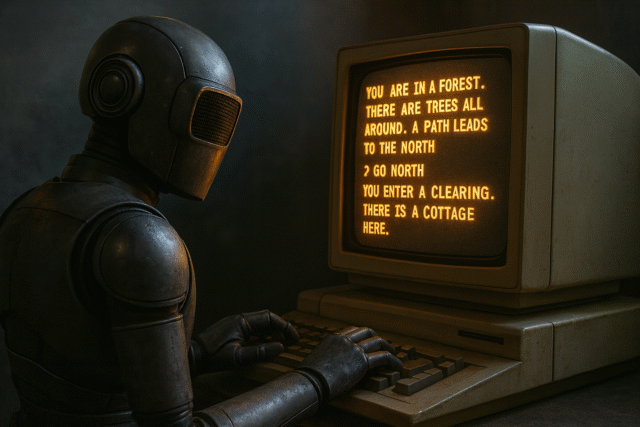

The experiment started with a Perl script linking a text adventure interpreter to a large language model. This allowed the model to receive game output, keep a short-term memory, and decide its next command based on a fixed prompt which encouraged the model to focus on important clues, track progress, and maintain consistent goals, thereby reducing the risk of aimless wandering.

One of the early lessons was that without guidance, models quickly lost track of objectives. They could loop endlessly on unimportant tasks, misinterpret puzzles, or become fixated on unnecessary details.

Even with structured prompting, they often failed to remember inventory items or to follow the game’s exact object names, leading to wasted turns.

Tracking AI performance

To measure performance in a repeatable way, Entropic Thoughts designed an achievement-based evaluation system. Each game was assigned a list of small, early-game milestones, such as answering the phone or opening a dresser in the first game, 9:05.

Models were given a fixed number of turns to collect as many achievements as possible. Results could then be compared without relying on needing to reach distant game endings.

The first tests covered 9:05, Lockout, Dreamhold, and Lost Pig and the results varied widely. Grok 4, Claude 4 Sonnet, and Gemini 2.5 Flash all achieved strong scores in the easier games, while smaller models such as Mistral Small 3 and gpt-4o-mini struggled to earn even basic achievements.

Costs ranged from under a dollar per million tokens for some models, to over 70 bucks for really top-end models like Claude 4 Opus.

Adjusting the scores to take game difficulty into account revealed that the cheaper Gemini 2.5 Flash performed on par with much more expensive options and in some cases even often matched or exceeded the performance of pricier counterparts when guided by the same structured prompt.

The test then expanded to include three more games — For a Change, Plundered Hearts, and So Far.

Across different runs of So Far, scores ranged from 0 percent to over 70 percent, while Lost Pig and Plundered Hearts, which start in a more linear fashion, proved more reliable for testing.

Repeating multiple runs with Gemini 2.5 Flash showed that score variability was wild, particularly in open-ended games with multiple distractions, suggesting that model evaluation should avoid games that allow large amounts of aimless exploration early on, unless the goal is to directly study that behavior.

From this work, Entropic Thoughts drew several conclusions. Firstly, while LLMs can be guided to make better choices in text adventures, their baseline problem-solving ability in these environments is still poor. They do, however, benefit greatly from structured prompts that keep them focused on relevant information and clear objectives.

Secondly, hints have surprisingly little impact, as the models tend to revert to prior unproductive patterns.

Finally, cost-effective models like Gemini 2.5 Flash can perform just as well as high-priced models in this context, making them more appealing for budget-conscious experiments.

LLMs will no doubt improve over time, but for now, while they may have the patience for text adventures, they still lack the skill to consistently beat them.

What do you think about using LLMs to play and benchmark classic text adventure games? Let us know in the comments.