As TechRadar’s Senior Health and Fitness Editor, I’ve used a lot of movement-tracking tools, from the best smartwatches to gait analysis and even AI tools to calculate movement efficiency.

I’ve also spoken to people responsible for making some of the best running shoes on the planet, who have made it their lives’ missions to understand the complex biomechanics of the human foot and body – how the foot splays as it falls, how the position of the knee affects the efficiency of the stride, what kind of adjustments to make to the shoes to mitigate issues, and so on.

Walk, Run, Crawl, RL Fun | Boston Dynamics | Atlas – YouTube

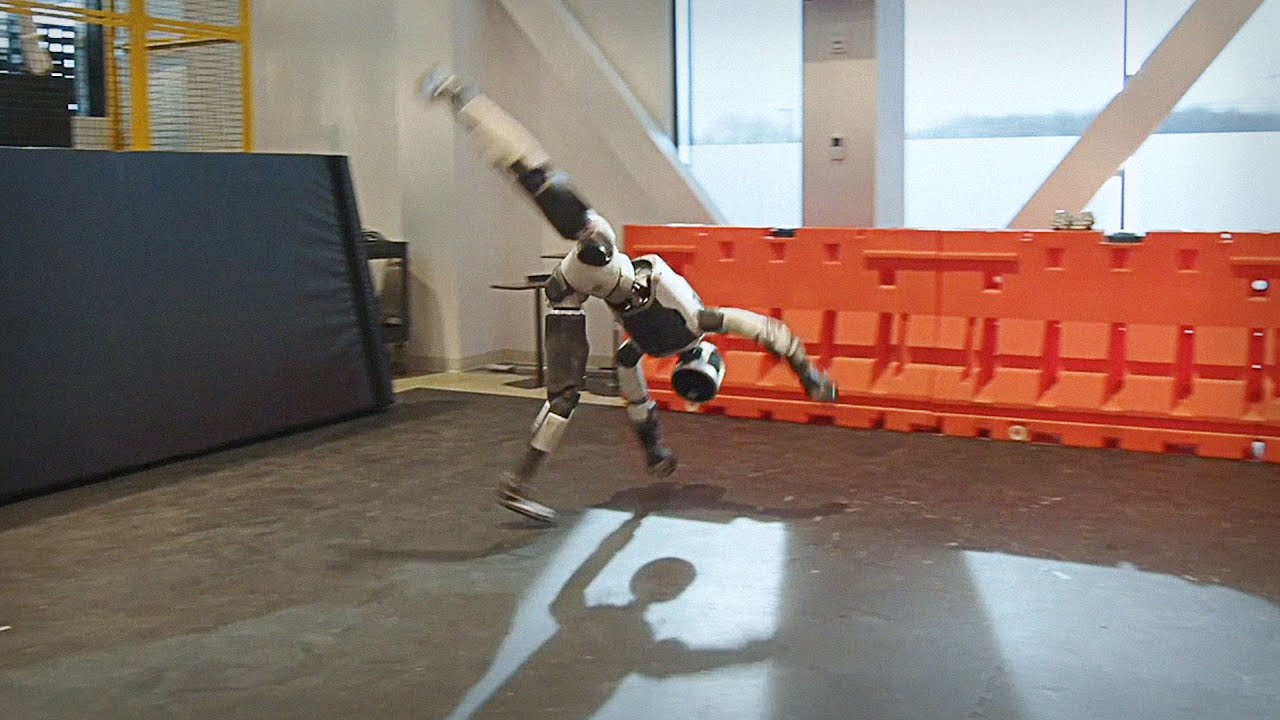

I was fascinated. As someone who looks at the intersection of human performance and technology on a regular basis, that little run felt like a landmark moment. The Atlas’ walking demonstration at CES, which you can see below in a video taken by our own Editor-at-Large Lance Ulanoff at his Atlas experience, still looked a little gawkish; like a shuffling, half-squat walk with small steps in case it fell over, rather than a confident stride.

However, watching the majority of the demonstration both thrilled and unnerved me. Atlas was so lifelike while it was moving, and yet the moment the robot came to a halt I was left cold by how unnaturally still it became. The contrast between this statue-like assemblage of metal and plastic and a living, breathing person was jarring.

How on earth did Boston Dynamics get its Atlas robot to copy a movement in this degree of detail?

Motion capture and VR

The CBS news show 60 Minutes aired a segment – you can see the video below – on Boston Dynamics and Atlas, which featured a visit to the Hyundai factory in which the robot is being tested, and included a look at the way new movements are taught to the robot.

My use of ‘taught’ rather than ‘programmed’ is deliberate: the development team uses AI and repetition to encourage the robot to learn a movement, rather than follow a pattern precisely. The reason for this is that the robot’s body is very different to a human body, despite its similar size and proportions, and must find the most efficient pathway on its own. Boston Dynamics dubs this method ‘kinematics’.

I’ve embedded the video below, but the long as the short of it is that Boston Dynamics records patterns of human motion by getting a human model to perform the movement. They’re wearing either an Xsens motion-tracking bodysuit for full-body movements, or a virtual-reality headset and handsets for more dexterity-based tasks, such as tying a knot.

This turns physical motion into digital data, which can be ‘retargeted’ or mapped from a human body onto a robot body to account for the different proportions and joints of the robot.

Progress made on AI-powered humanoid robots | 60 Minutes – YouTube

Once that’s done, the robot learns how to perform the movement in a simulation environment. In the 60 Minutes video you can see thousands of virtual robots performing a basic jumping jack. Some of them fall, stumble or perform the move incorrectly, but many of them get it right. Each simulated movement creates more data.

Once the robot’s collective hive mind (also terrifying) has figured out the best way of doing the movement, this data is rolled out to a whole fleet of robots, so the robotics team can train new movements at scale with massive efficiency. In this way, a whole army of robots can be trained to perform a new movement pattern in an afternoon, such as operating a new production line – but hopefully not more complex patterns, such as moving in sync to rise up against their former masters.

Xsens, in a blog post, describes this pipeline in far simpler terms: “Capture human motion → Retarget to the robot → Train at scale in simulation → Deploy to hardware → Repeat.”

Despite the fact that robots can move in unnatural ways, they aren’t yet anywhere close to moving as efficiently as humans can, or with as much complexity. However, they’re certainly getting there, and it looks like we’re only a few short years from seeing genuinely humanoid robots in the home, the workplace and all sorts of other arenas.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

“>

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.